add cpp inference for windows

This commit is contained in:

parent

a3494c66e9

commit

d9905dd19d

|

|

@ -1,8 +1,16 @@

|

|||

project(ocr_system CXX C)

|

||||

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

|

||||

|

||||

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." OFF)

|

||||

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

|

||||

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

|

||||

option(USE_TENSORRT "Compile demo with TensorRT." OFF)

|

||||

|

||||

SET(PADDLE_LIB "" CACHE PATH "Location of libraries")

|

||||

SET(OPENCV_DIR "" CACHE PATH "Location of libraries")

|

||||

SET(CUDA_LIB "" CACHE PATH "Location of libraries")

|

||||

SET(CUDNN_LIB "" CACHE PATH "Location of libraries")

|

||||

SET(TENSORRT_DIR "" CACHE PATH "Compile demo with TensorRT")

|

||||

|

||||

set(DEMO_NAME "ocr_system")

|

||||

|

||||

|

||||

macro(safe_set_static_flag)

|

||||

|

|

@ -15,24 +23,60 @@ macro(safe_set_static_flag)

|

|||

endforeach(flag_var)

|

||||

endmacro()

|

||||

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -g -fpermissive")

|

||||

set(CMAKE_STATIC_LIBRARY_PREFIX "")

|

||||

message("flags" ${CMAKE_CXX_FLAGS})

|

||||

set(CMAKE_CXX_FLAGS_RELEASE "-O3")

|

||||

if (WITH_MKL)

|

||||

ADD_DEFINITIONS(-DUSE_MKL)

|

||||

endif()

|

||||

|

||||

if(NOT DEFINED PADDLE_LIB)

|

||||

message(FATAL_ERROR "please set PADDLE_LIB with -DPADDLE_LIB=/path/paddle/lib")

|

||||

endif()

|

||||

if(NOT DEFINED DEMO_NAME)

|

||||

message(FATAL_ERROR "please set DEMO_NAME with -DDEMO_NAME=demo_name")

|

||||

|

||||

if(NOT DEFINED OPENCV_DIR)

|

||||

message(FATAL_ERROR "please set OPENCV_DIR with -DOPENCV_DIR=/path/opencv")

|

||||

endif()

|

||||

|

||||

|

||||

set(OPENCV_DIR ${OPENCV_DIR})

|

||||

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)

|

||||

if (WIN32)

|

||||

include_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||

include_directories("${PADDLE_LIB}/paddle/include")

|

||||

link_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/build/ NO_DEFAULT_PATH)

|

||||

|

||||

else ()

|

||||

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)

|

||||

include_directories("${PADDLE_LIB}/paddle/include")

|

||||

link_directories("${PADDLE_LIB}/paddle/lib")

|

||||

endif ()

|

||||

include_directories(${OpenCV_INCLUDE_DIRS})

|

||||

|

||||

include_directories("${PADDLE_LIB}/paddle/include")

|

||||

if (WIN32)

|

||||

add_definitions("/DGOOGLE_GLOG_DLL_DECL=")

|

||||

set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd")

|

||||

set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT")

|

||||

set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd")

|

||||

set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT")

|

||||

if (WITH_STATIC_LIB)

|

||||

safe_set_static_flag()

|

||||

add_definitions(-DSTATIC_LIB)

|

||||

endif()

|

||||

else()

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -g -o3 -std=c++11")

|

||||

set(CMAKE_STATIC_LIBRARY_PREFIX "")

|

||||

endif()

|

||||

message("flags" ${CMAKE_CXX_FLAGS})

|

||||

|

||||

|

||||

if (WITH_GPU)

|

||||

if (NOT DEFINED CUDA_LIB OR ${CUDA_LIB} STREQUAL "")

|

||||

message(FATAL_ERROR "please set CUDA_LIB with -DCUDA_LIB=/path/cuda-8.0/lib64")

|

||||

endif()

|

||||

if (NOT WIN32)

|

||||

if (NOT DEFINED CUDNN_LIB)

|

||||

message(FATAL_ERROR "please set CUDNN_LIB with -DCUDNN_LIB=/path/cudnn_v7.4/cuda/lib64")

|

||||

endif()

|

||||

endif(NOT WIN32)

|

||||

endif()

|

||||

|

||||

include_directories("${PADDLE_LIB}/third_party/install/protobuf/include")

|

||||

include_directories("${PADDLE_LIB}/third_party/install/glog/include")

|

||||

include_directories("${PADDLE_LIB}/third_party/install/gflags/include")

|

||||

|

|

@ -43,10 +87,12 @@ include_directories("${PADDLE_LIB}/third_party/eigen3")

|

|||

|

||||

include_directories("${CMAKE_SOURCE_DIR}/")

|

||||

|

||||

if (USE_TENSORRT AND WITH_GPU)

|

||||

include_directories("${TENSORRT_ROOT}/include")

|

||||

link_directories("${TENSORRT_ROOT}/lib")

|

||||

endif()

|

||||

if (NOT WIN32)

|

||||

if (WITH_TENSORRT AND WITH_GPU)

|

||||

include_directories("${TENSORRT_DIR}/include")

|

||||

link_directories("${TENSORRT_DIR}/lib")

|

||||

endif()

|

||||

endif(NOT WIN32)

|

||||

|

||||

link_directories("${PADDLE_LIB}/third_party/install/zlib/lib")

|

||||

|

||||

|

|

@ -57,17 +103,24 @@ link_directories("${PADDLE_LIB}/third_party/install/xxhash/lib")

|

|||

link_directories("${PADDLE_LIB}/paddle/lib")

|

||||

|

||||

|

||||

AUX_SOURCE_DIRECTORY(./src SRCS)

|

||||

add_executable(${DEMO_NAME} ${SRCS})

|

||||

|

||||

if(WITH_MKL)

|

||||

include_directories("${PADDLE_LIB}/third_party/install/mklml/include")

|

||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

|

||||

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

if (WIN32)

|

||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.lib

|

||||

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.lib)

|

||||

else ()

|

||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

|

||||

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

execute_process(COMMAND cp -r ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX} /usr/lib)

|

||||

endif ()

|

||||

set(MKLDNN_PATH "${PADDLE_LIB}/third_party/install/mkldnn")

|

||||

if(EXISTS ${MKLDNN_PATH})

|

||||

include_directories("${MKLDNN_PATH}/include")

|

||||

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

|

||||

if (WIN32)

|

||||

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/mkldnn.lib)

|

||||

else ()

|

||||

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

|

||||

endif ()

|

||||

endif()

|

||||

else()

|

||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/openblas/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

|

|

@ -82,24 +135,66 @@ else()

|

|||

${PADDLE_LIB}/paddle/lib/libpaddle_fluid${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

endif()

|

||||

|

||||

set(EXTERNAL_LIB "-lrt -ldl -lpthread -lm")

|

||||

if (NOT WIN32)

|

||||

set(DEPS ${DEPS}

|

||||

${MATH_LIB} ${MKLDNN_LIB}

|

||||

glog gflags protobuf z xxhash

|

||||

)

|

||||

if(EXISTS "${PADDLE_LIB}/third_party/install/snappystream/lib")

|

||||

set(DEPS ${DEPS} snappystream)

|

||||

endif()

|

||||

if (EXISTS "${PADDLE_LIB}/third_party/install/snappy/lib")

|

||||

set(DEPS ${DEPS} snappy)

|

||||

endif()

|

||||

else()

|

||||

set(DEPS ${DEPS}

|

||||

${MATH_LIB} ${MKLDNN_LIB}

|

||||

glog gflags_static libprotobuf xxhash)

|

||||

set(DEPS ${DEPS} libcmt shlwapi)

|

||||

if (EXISTS "${PADDLE_LIB}/third_party/install/snappy/lib")

|

||||

set(DEPS ${DEPS} snappy)

|

||||

endif()

|

||||

if(EXISTS "${PADDLE_LIB}/third_party/install/snappystream/lib")

|

||||

set(DEPS ${DEPS} snappystream)

|

||||

endif()

|

||||

endif(NOT WIN32)

|

||||

|

||||

set(DEPS ${DEPS}

|

||||

${MATH_LIB} ${MKLDNN_LIB}

|

||||

glog gflags protobuf z xxhash

|

||||

${EXTERNAL_LIB} ${OpenCV_LIBS})

|

||||

|

||||

if(WITH_GPU)

|

||||

if (USE_TENSORRT)

|

||||

set(DEPS ${DEPS}

|

||||

${TENSORRT_ROOT}/lib/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

set(DEPS ${DEPS}

|

||||

${TENSORRT_ROOT}/lib/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

if(NOT WIN32)

|

||||

if (WITH_TENSORRT)

|

||||

set(DEPS ${DEPS} ${TENSORRT_DIR}/lib/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

set(DEPS ${DEPS} ${TENSORRT_DIR}/lib/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

endif()

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

set(DEPS ${DEPS} ${CUDNN_LIB}/libcudnn${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

else()

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/cudart${CMAKE_STATIC_LIBRARY_SUFFIX} )

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/cublas${CMAKE_STATIC_LIBRARY_SUFFIX} )

|

||||

set(DEPS ${DEPS} ${CUDNN_LIB}/cudnn${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

endif()

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcublas${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

||||

set(DEPS ${DEPS} ${CUDNN_LIB}/libcudnn${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

||||

endif()

|

||||

|

||||

|

||||

if (NOT WIN32)

|

||||

set(EXTERNAL_LIB "-ldl -lrt -lgomp -lz -lm -lpthread")

|

||||

set(DEPS ${DEPS} ${EXTERNAL_LIB})

|

||||

endif()

|

||||

|

||||

set(DEPS ${DEPS} ${OpenCV_LIBS})

|

||||

|

||||

AUX_SOURCE_DIRECTORY(./src SRCS)

|

||||

add_executable(${DEMO_NAME} ${SRCS})

|

||||

|

||||

target_link_libraries(${DEMO_NAME} ${DEPS})

|

||||

|

||||

if (WIN32 AND WITH_MKL)

|

||||

add_custom_command(TARGET ${DEMO_NAME} POST_BUILD

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.dll ./mklml.dll

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dll

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dll

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.dll ./release/mklml.dll

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dll

|

||||

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mkldnn/lib/mkldnn.dll ./release/mkldnn.dll

|

||||

)

|

||||

endif()

|

||||

|

|

@ -0,0 +1,95 @@

|

|||

# Visual Studio 2019 Community CMake 编译指南

|

||||

|

||||

PaddleOCR在Windows 平台下基于`Visual Studio 2019 Community` 进行了测试。微软从`Visual Studio 2017`开始即支持直接管理`CMake`跨平台编译项目,但是直到`2019`才提供了稳定和完全的支持,所以如果你想使用CMake管理项目编译构建,我们推荐你使用`Visual Studio 2019`环境下构建。

|

||||

|

||||

|

||||

## 前置条件

|

||||

* Visual Studio 2019

|

||||

* CUDA 9.0 / CUDA 10.0,cudnn 7+ (仅在使用GPU版本的预测库时需要)

|

||||

* CMake 3.0+

|

||||

|

||||

请确保系统已经安装好上述基本软件,我们使用的是`VS2019`的社区版。

|

||||

|

||||

**下面所有示例以工作目录为 `D:\projects`演示**。

|

||||

|

||||

### Step1: 下载PaddlePaddle C++ 预测库 fluid_inference

|

||||

|

||||

PaddlePaddle C++ 预测库针对不同的`CPU`和`CUDA`版本提供了不同的预编译版本,请根据实际情况下载: [C++预测库下载列表](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/advanced_guide/inference_deployment/inference/windows_cpp_inference.html)

|

||||

|

||||

解压后`D:\projects\fluid_inference`目录包含内容为:

|

||||

```

|

||||

fluid_inference

|

||||

├── paddle # paddle核心库和头文件

|

||||

|

|

||||

├── third_party # 第三方依赖库和头文件

|

||||

|

|

||||

└── version.txt # 版本和编译信息

|

||||

```

|

||||

|

||||

### Step2: 安装配置OpenCV

|

||||

|

||||

1. 在OpenCV官网下载适用于Windows平台的3.4.6版本, [下载地址](https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download)

|

||||

2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\projects\opencv`

|

||||

3. 配置环境变量,如下流程所示

|

||||

- 我的电脑->属性->高级系统设置->环境变量

|

||||

- 在系统变量中找到Path(如没有,自行创建),并双击编辑

|

||||

- 新建,将opencv路径填入并保存,如`D:\projects\opencv\build\x64\vc14\bin`

|

||||

|

||||

### Step3: 使用Visual Studio 2019直接编译CMake

|

||||

|

||||

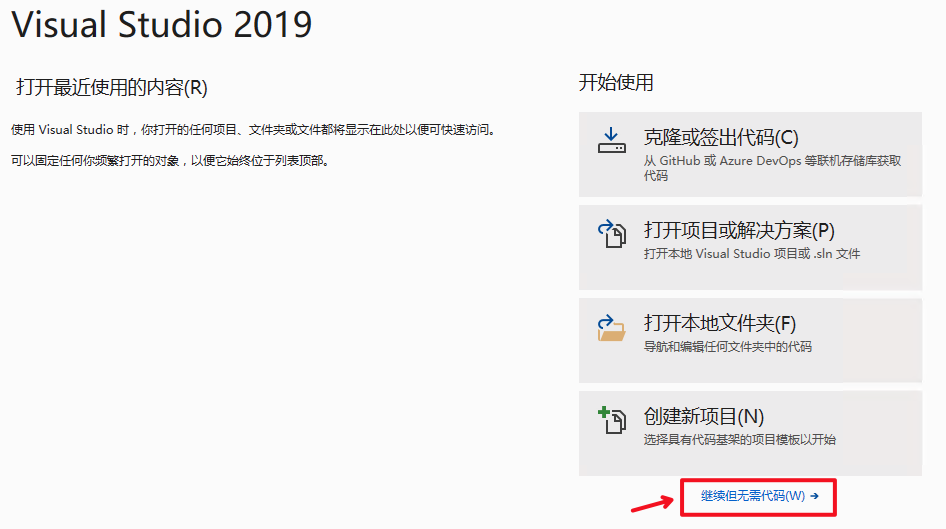

1. 打开Visual Studio 2019 Community,点击`继续但无需代码`

|

||||

|

||||

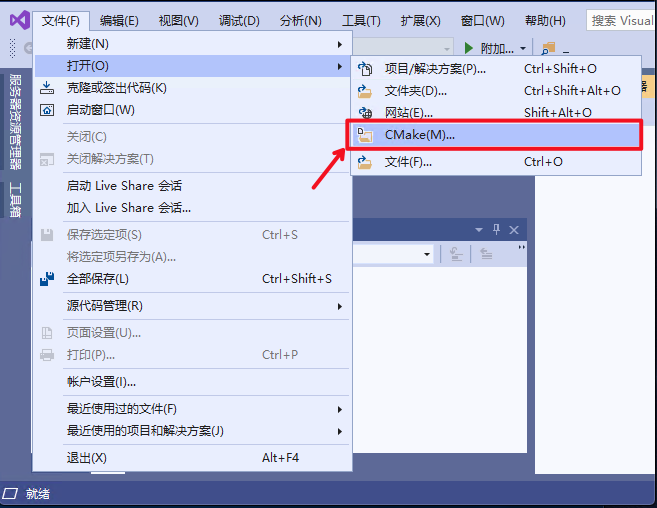

2. 点击: `文件`->`打开`->`CMake`

|

||||

|

||||

|

||||

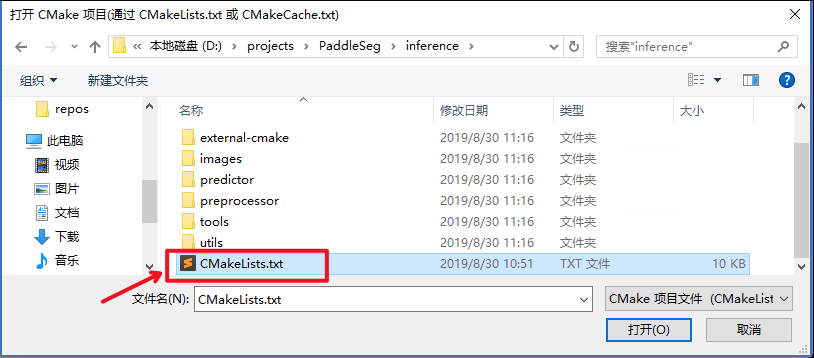

选择项目代码所在路径,并打开`CMakeList.txt`:

|

||||

|

||||

|

||||

|

||||

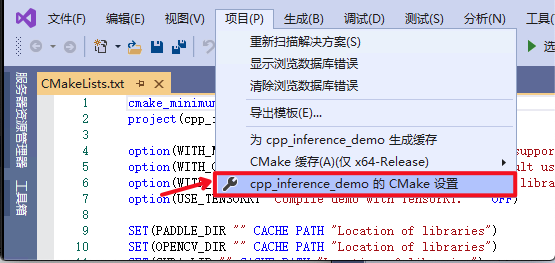

3. 点击:`项目`->`cpp_inference_demo的CMake设置`

|

||||

|

||||

|

||||

|

||||

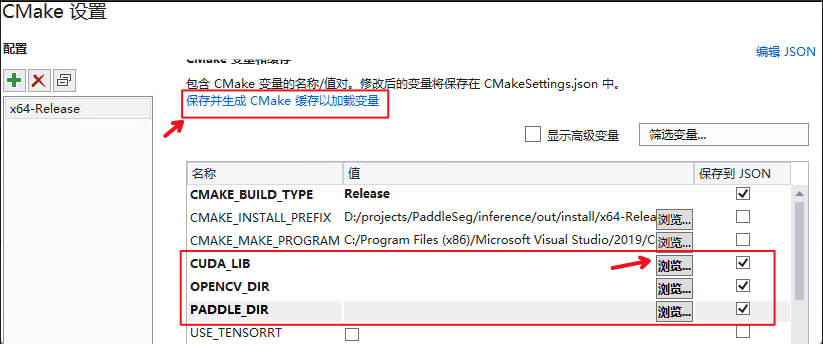

4. 点击`浏览`,分别设置编译选项指定`CUDA`、`CUDNN_LIB`、`OpenCV`、`Paddle预测库`的路径

|

||||

|

||||

三个编译参数的含义说明如下(带`*`表示仅在使用**GPU版本**预测库时指定, 其中CUDA库版本尽量对齐,**使用9.0、10.0版本,不使用9.2、10.1等版本CUDA库**):

|

||||

|

||||

| 参数名 | 含义 |

|

||||

| ---- | ---- |

|

||||

| *CUDA_LIB | CUDA的库路径 |

|

||||

| *CUDNN_LIB | CUDNN的库路径 |

|

||||

| OPENCV_DIR | OpenCV的安装路径 |

|

||||

| PADDLE_LIB | Paddle预测库的路径 |

|

||||

|

||||

**注意:**

|

||||

1. 使用`CPU`版预测库,请把`WITH_GPU`的勾去掉

|

||||

2. 如果使用的是`openblas`版本,请把`WITH_MKL`勾去掉

|

||||

|

||||

|

||||

|

||||

**设置完成后**, 点击上图中`保存并生成CMake缓存以加载变量`。

|

||||

|

||||

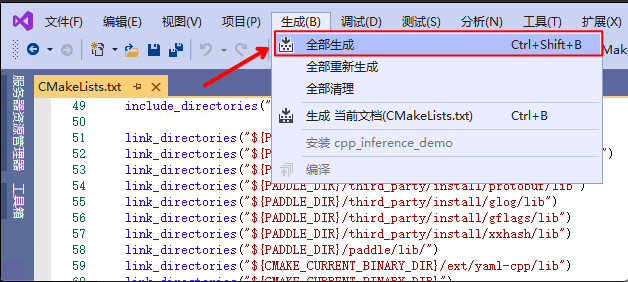

5. 点击`生成`->`全部生成`

|

||||

|

||||

|

||||

|

||||

|

||||

### Step4: 预测及可视化

|

||||

|

||||

上述`Visual Studio 2019`编译产出的可执行文件在`out\build\x64-Release`目录下,打开`cmd`,并切换到该目录:

|

||||

|

||||

```

|

||||

cd D:\projects\PaddleOCR\deploy\cpp_infer\out\build\x64-Release

|

||||

```

|

||||

可执行文件`ocr_system.exe`即为样例的预测程序,其主要使用方法如下

|

||||

|

||||

```shell

|

||||

#预测图片 `D:\projects\PaddleOCR\doc\imgs\10.jpg`

|

||||

.\ocr_system.exe D:\projects\PaddleOCR\deploy\cpp_infer\tools\config.txt D:\projects\PaddleOCR\doc\imgs\10.jpg

|

||||

```

|

||||

|

||||

第一个参数为配置文件路径,第二个参数为需要预测的图片路径。

|

||||

|

||||

|

||||

### 注意

|

||||

* 在Windows下的终端中执行文件exe时,可能会发生乱码的现象,此时需要在终端中输入`CHCP 65001`,将终端的编码方式由GBK编码(默认)改为UTF-8编码,更加具体的解释可以参考这篇博客:[https://blog.csdn.net/qq_35038153/article/details/78430359](https://blog.csdn.net/qq_35038153/article/details/78430359)。

|

||||

|

|

@ -7,6 +7,9 @@

|

|||

|

||||

### 运行准备

|

||||

- Linux环境,推荐使用docker。

|

||||

- Windows环境,目前支持基于`Visual Studio 2019 Community`进行编译。

|

||||

|

||||

* 该文档主要介绍基于Linux环境的PaddleOCR C++预测流程,如果需要在Windows下基于预测库进行C++预测,具体编译方法请参考[Windows下编译教程](./docs/windows_vs2019_build.md)

|

||||

|

||||

### 1.1 编译opencv库

|

||||

|

||||

|

|

|

|||

|

|

@ -44,7 +44,7 @@ Config::LoadConfig(const std::string &config_path) {

|

|||

std::map<std::string, std::string> dict;

|

||||

for (int i = 0; i < config.size(); i++) {

|

||||

// pass for empty line or comment

|

||||

if (config[i].size() <= 1 or config[i][0] == '#') {

|

||||

if (config[i].size() <= 1 || config[i][0] == '#') {

|

||||

continue;

|

||||

}

|

||||

std::vector<std::string> res = split(config[i], " ");

|

||||

|

|

|

|||

|

|

@ -39,22 +39,28 @@ std::vector<std::string> Utility::ReadDict(const std::string &path) {

|

|||

void Utility::VisualizeBboxes(

|

||||

const cv::Mat &srcimg,

|

||||

const std::vector<std::vector<std::vector<int>>> &boxes) {

|

||||

cv::Point rook_points[boxes.size()][4];

|

||||

for (int n = 0; n < boxes.size(); n++) {

|

||||

for (int m = 0; m < boxes[0].size(); m++) {

|

||||

rook_points[n][m] = cv::Point(int(boxes[n][m][0]), int(boxes[n][m][1]));

|

||||

}

|

||||

}

|

||||

// cv::Point rook_points[boxes.size()][4];

|

||||

// for (int n = 0; n < boxes.size(); n++) {

|

||||

// for (int m = 0; m < boxes[0].size(); m++) {

|

||||

// rook_points[n][m] = cv::Point(int(boxes[n][m][0]),

|

||||

// int(boxes[n][m][1]));

|

||||

// }

|

||||

// }

|

||||

cv::Mat img_vis;

|

||||

srcimg.copyTo(img_vis);

|

||||

for (int n = 0; n < boxes.size(); n++) {

|

||||

const cv::Point *ppt[1] = {rook_points[n]};

|

||||

cv::Point rook_points[4];

|

||||

for (int m = 0; m < boxes[n].size(); m++) {

|

||||

rook_points[m] = cv::Point(int(boxes[n][m][0]), int(boxes[n][m][1]));

|

||||

}

|

||||

|

||||

const cv::Point *ppt[1] = {rook_points};

|

||||

int npt[] = {4};

|

||||

cv::polylines(img_vis, ppt, npt, 1, 1, CV_RGB(0, 255, 0), 2, 8, 0);

|

||||

}

|

||||

|

||||

cv::imwrite("./ocr_vis.png", img_vis);

|

||||

std::cout << "The detection visualized image saved in ./ocr_vis.png.pn"

|

||||

std::cout << "The detection visualized image saved in ./ocr_vis.png"

|

||||

<< std::endl;

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -1,8 +1,7 @@

|

|||

|

||||

OPENCV_DIR=your_opencv_dir

|

||||

LIB_DIR=your_paddle_inference_dir

|

||||

CUDA_LIB_DIR=your_cuda_lib_dir

|

||||

CUDNN_LIB_DIR=/your_cudnn_lib_dir

|

||||

CUDNN_LIB_DIR=your_cudnn_lib_dir

|

||||

|

||||

BUILD_DIR=build

|

||||

rm -rf ${BUILD_DIR}

|

||||

|

|

@ -11,7 +10,6 @@ cd ${BUILD_DIR}

|

|||

cmake .. \

|

||||

-DPADDLE_LIB=${LIB_DIR} \

|

||||

-DWITH_MKL=ON \

|

||||

-DDEMO_NAME=ocr_system \

|

||||

-DWITH_GPU=OFF \

|

||||

-DWITH_STATIC_LIB=OFF \

|

||||

-DUSE_TENSORRT=OFF \

|

||||

|

|

|

|||

|

|

@ -15,8 +15,7 @@ det_model_dir ./inference/det_db

|

|||

# rec config

|

||||

rec_model_dir ./inference/rec_crnn

|

||||

char_list_file ../../ppocr/utils/ppocr_keys_v1.txt

|

||||

img_path ../../doc/imgs/11.jpg

|

||||

|

||||

# show the detection results

|

||||

visualize 0

|

||||

visualize 1

|

||||

|

||||

|

|

|

|||

Loading…

Reference in New Issue