Merge remote-tracking branch 'origin/android_demo' into android_demo

|

|

@ -4,4 +4,5 @@ include README.md

|

|||

recursive-include ppocr/utils *.txt utility.py logging.py

|

||||

recursive-include ppocr/data/ *.py

|

||||

recursive-include ppocr/postprocess *.py

|

||||

recursive-include tools/infer *.py

|

||||

recursive-include tools/infer *.py

|

||||

recursive-include ppocr/utils/e2e_utils/ *.py

|

||||

|

|

@ -147,6 +147,7 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

self.itemsToShapesbox = {}

|

||||

self.shapesToItemsbox = {}

|

||||

self.prevLabelText = getStr('tempLabel')

|

||||

self.noLabelText = getStr('nullLabel')

|

||||

self.model = 'paddle'

|

||||

self.PPreader = None

|

||||

self.autoSaveNum = 5

|

||||

|

|

@ -1020,7 +1021,7 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

item.setText(str([(int(p.x()), int(p.y())) for p in shape.points]))

|

||||

self.updateComboBox()

|

||||

|

||||

def updateComboBox(self): # TODO:貌似没用

|

||||

def updateComboBox(self):

|

||||

# Get the unique labels and add them to the Combobox.

|

||||

itemsTextList = [str(self.labelList.item(i).text()) for i in range(self.labelList.count())]

|

||||

|

||||

|

|

@ -1040,7 +1041,7 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

return dict(label=s.label, # str

|

||||

line_color=s.line_color.getRgb(),

|

||||

fill_color=s.fill_color.getRgb(),

|

||||

points=[(p.x(), p.y()) for p in s.points], # QPonitF

|

||||

points=[(int(p.x()), int(p.y())) for p in s.points], # QPonitF

|

||||

# add chris

|

||||

difficult=s.difficult) # bool

|

||||

|

||||

|

|

@ -1069,7 +1070,7 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

# print('Image:{0} -> Annotation:{1}'.format(self.filePath, annotationFilePath))

|

||||

return True

|

||||

except:

|

||||

self.errorMessage(u'Error saving label data')

|

||||

self.errorMessage(u'Error saving label data', u'Error saving label data')

|

||||

return False

|

||||

|

||||

def copySelectedShape(self):

|

||||

|

|

@ -1802,10 +1803,14 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

result.insert(0, box)

|

||||

print('result in reRec is ', result)

|

||||

self.result_dic.append(result)

|

||||

if result[1][0] == shape.label:

|

||||

print('label no change')

|

||||

else:

|

||||

rec_flag += 1

|

||||

else:

|

||||

print('Can not recognise the box')

|

||||

self.result_dic.append([box,(self.noLabelText,0)])

|

||||

|

||||

if self.noLabelText == shape.label or result[1][0] == shape.label:

|

||||

print('label no change')

|

||||

else:

|

||||

rec_flag += 1

|

||||

|

||||

if len(self.result_dic) > 0 and rec_flag > 0:

|

||||

self.saveFile(mode='Auto')

|

||||

|

|

@ -1836,9 +1841,14 @@ class MainWindow(QMainWindow, WindowMixin):

|

|||

print('label no change')

|

||||

else:

|

||||

shape.label = result[1][0]

|

||||

self.singleLabel(shape)

|

||||

self.setDirty()

|

||||

print(box)

|

||||

else:

|

||||

print('Can not recognise the box')

|

||||

if self.noLabelText == shape.label:

|

||||

print('label no change')

|

||||

else:

|

||||

shape.label = self.noLabelText

|

||||

self.singleLabel(shape)

|

||||

self.setDirty()

|

||||

|

||||

def autolcm(self):

|

||||

vbox = QVBoxLayout()

|

||||

|

|

|

|||

|

|

@ -45,7 +45,7 @@ class Canvas(QWidget):

|

|||

CREATE, EDIT = list(range(2))

|

||||

_fill_drawing = False # draw shadows

|

||||

|

||||

epsilon = 11.0

|

||||

epsilon = 5.0

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super(Canvas, self).__init__(*args, **kwargs)

|

||||

|

|

|

|||

|

|

@ -87,6 +87,7 @@ creatPolygon=四点标注

|

|||

drawSquares=正方形标注

|

||||

saveRec=保存识别结果

|

||||

tempLabel=待识别

|

||||

nullLabel=无法识别

|

||||

steps=操作步骤

|

||||

choseModelLg=选择模型语言

|

||||

cancel=取消

|

||||

|

|

|

|||

|

|

@ -77,7 +77,7 @@ IR=Image Resize

|

|||

autoRecognition=Auto Recognition

|

||||

reRecognition=Re-recognition

|

||||

mfile=File

|

||||

medit=Eidt

|

||||

medit=Edit

|

||||

mview=View

|

||||

mhelp=Help

|

||||

iconList=Icon List

|

||||

|

|

@ -87,6 +87,7 @@ creatPolygon=Create Quadrilateral

|

|||

drawSquares=Draw Squares

|

||||

saveRec=Save Recognition Result

|

||||

tempLabel=TEMPORARY

|

||||

nullLabel=NULL

|

||||

steps=Steps

|

||||

choseModelLg=Choose Model Language

|

||||

cancel=Cancel

|

||||

|

|

|

|||

|

|

@ -32,7 +32,8 @@ PaddleOCR supports both dynamic graph and static graph programming paradigm

|

|||

|

||||

<div align="center">

|

||||

<img src="doc/imgs_results/ch_ppocr_mobile_v2.0/test_add_91.jpg" width="800">

|

||||

<img src="doc/imgs_results/ch_ppocr_mobile_v2.0/00018069.jpg" width="800">

|

||||

<img src="doc/imgs_results/multi_lang/img_01.jpg" width="800">

|

||||

<img src="doc/imgs_results/multi_lang/img_02.jpg" width="800">

|

||||

</div>

|

||||

|

||||

The above pictures are the visualizations of the general ppocr_server model. For more effect pictures, please see [More visualizations](./doc/doc_en/visualization_en.md).

|

||||

|

|

|

|||

|

|

@ -59,8 +59,10 @@ Optimizer:

|

|||

PostProcess:

|

||||

name: PGPostProcess

|

||||

score_thresh: 0.5

|

||||

mode: fast # fast or slow two ways

|

||||

Metric:

|

||||

name: E2EMetric

|

||||

gt_mat_dir: # the dir of gt_mat

|

||||

character_dict_path: ppocr/utils/ic15_dict.txt

|

||||

main_indicator: f_score_e2e

|

||||

|

||||

|

|

@ -106,7 +108,7 @@ Eval:

|

|||

order: 'hwc'

|

||||

- ToCHWImage:

|

||||

- KeepKeys:

|

||||

keep_keys: [ 'image', 'shape', 'polys', 'strs', 'tags' ]

|

||||

keep_keys: [ 'image', 'shape', 'polys', 'strs', 'tags', 'img_id']

|

||||

loader:

|

||||

shuffle: False

|

||||

drop_last: False

|

||||

|

|

|

|||

|

|

@ -19,21 +19,56 @@ import logging

|

|||

logging.basicConfig(level=logging.INFO)

|

||||

|

||||

support_list = {

|

||||

'it':'italian', 'xi':'spanish', 'pu':'portuguese', 'ru':'russian', 'ar':'arabic',

|

||||

'ta':'tamil', 'ug':'uyghur', 'fa':'persian', 'ur':'urdu', 'rs':'serbian latin',

|

||||

'oc':'occitan', 'rsc':'serbian cyrillic', 'bg':'bulgarian', 'uk':'ukranian', 'be':'belarusian',

|

||||

'te':'telugu', 'ka':'kannada', 'chinese_cht':'chinese tradition','hi':'hindi','mr':'marathi',

|

||||

'ne':'nepali',

|

||||

'it': 'italian',

|

||||

'xi': 'spanish',

|

||||

'pu': 'portuguese',

|

||||

'ru': 'russian',

|

||||

'ar': 'arabic',

|

||||

'ta': 'tamil',

|

||||

'ug': 'uyghur',

|

||||

'fa': 'persian',

|

||||

'ur': 'urdu',

|

||||

'rs': 'serbian latin',

|

||||

'oc': 'occitan',

|

||||

'rsc': 'serbian cyrillic',

|

||||

'bg': 'bulgarian',

|

||||

'uk': 'ukranian',

|

||||

'be': 'belarusian',

|

||||

'te': 'telugu',

|

||||

'ka': 'kannada',

|

||||

'chinese_cht': 'chinese tradition',

|

||||

'hi': 'hindi',

|

||||

'mr': 'marathi',

|

||||

'ne': 'nepali',

|

||||

}

|

||||

assert(

|

||||

os.path.isfile("./rec_multi_language_lite_train.yml")

|

||||

),"Loss basic configuration file rec_multi_language_lite_train.yml.\

|

||||

|

||||

latin_lang = [

|

||||

'af', 'az', 'bs', 'cs', 'cy', 'da', 'de', 'es', 'et', 'fr', 'ga', 'hr',

|

||||

'hu', 'id', 'is', 'it', 'ku', 'la', 'lt', 'lv', 'mi', 'ms', 'mt', 'nl',

|

||||

'no', 'oc', 'pi', 'pl', 'pt', 'ro', 'rs_latin', 'sk', 'sl', 'sq', 'sv',

|

||||

'sw', 'tl', 'tr', 'uz', 'vi', 'latin'

|

||||

]

|

||||

arabic_lang = ['ar', 'fa', 'ug', 'ur']

|

||||

cyrillic_lang = [

|

||||

'ru', 'rs_cyrillic', 'be', 'bg', 'uk', 'mn', 'abq', 'ady', 'kbd', 'ava',

|

||||

'dar', 'inh', 'che', 'lbe', 'lez', 'tab', 'cyrillic'

|

||||

]

|

||||

devanagari_lang = [

|

||||

'hi', 'mr', 'ne', 'bh', 'mai', 'ang', 'bho', 'mah', 'sck', 'new', 'gom',

|

||||

'sa', 'bgc', 'devanagari'

|

||||

]

|

||||

multi_lang = latin_lang + arabic_lang + cyrillic_lang + devanagari_lang

|

||||

|

||||

assert (os.path.isfile("./rec_multi_language_lite_train.yml")

|

||||

), "Loss basic configuration file rec_multi_language_lite_train.yml.\

|

||||

You can download it from \

|

||||

https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph/configs/rec/multi_language/"

|

||||

|

||||

global_config = yaml.load(open("./rec_multi_language_lite_train.yml", 'rb'), Loader=yaml.Loader)

|

||||

|

||||

global_config = yaml.load(

|

||||

open("./rec_multi_language_lite_train.yml", 'rb'), Loader=yaml.Loader)

|

||||

project_path = os.path.abspath(os.path.join(os.getcwd(), "../../../"))

|

||||

|

||||

|

||||

class ArgsParser(ArgumentParser):

|

||||

def __init__(self):

|

||||

super(ArgsParser, self).__init__(

|

||||

|

|

@ -41,15 +76,30 @@ class ArgsParser(ArgumentParser):

|

|||

self.add_argument(

|

||||

"-o", "--opt", nargs='+', help="set configuration options")

|

||||

self.add_argument(

|

||||

"-l", "--language", nargs='+', help="set language type, support {}".format(support_list))

|

||||

"-l",

|

||||

"--language",

|

||||

nargs='+',

|

||||

help="set language type, support {}".format(support_list))

|

||||

self.add_argument(

|

||||

"--train",type=str,help="you can use this command to change the train dataset default path")

|

||||

"--train",

|

||||

type=str,

|

||||

help="you can use this command to change the train dataset default path"

|

||||

)

|

||||

self.add_argument(

|

||||

"--val",type=str,help="you can use this command to change the eval dataset default path")

|

||||

"--val",

|

||||

type=str,

|

||||

help="you can use this command to change the eval dataset default path"

|

||||

)

|

||||

self.add_argument(

|

||||

"--dict",type=str,help="you can use this command to change the dictionary default path")

|

||||

"--dict",

|

||||

type=str,

|

||||

help="you can use this command to change the dictionary default path"

|

||||

)

|

||||

self.add_argument(

|

||||

"--data_dir",type=str,help="you can use this command to change the dataset default root path")

|

||||

"--data_dir",

|

||||

type=str,

|

||||

help="you can use this command to change the dataset default root path"

|

||||

)

|

||||

|

||||

def parse_args(self, argv=None):

|

||||

args = super(ArgsParser, self).parse_args(argv)

|

||||

|

|

@ -68,21 +118,37 @@ class ArgsParser(ArgumentParser):

|

|||

return config

|

||||

|

||||

def _set_language(self, type):

|

||||

assert(type),"please use -l or --language to choose language type"

|

||||

lang = type[0]

|

||||

assert (type), "please use -l or --language to choose language type"

|

||||

assert(

|

||||

type[0] in support_list.keys()

|

||||

lang in support_list.keys() or lang in multi_lang

|

||||

),"the sub_keys(-l or --language) can only be one of support list: \n{},\nbut get: {}, " \

|

||||

"please check your running command".format(support_list, type)

|

||||

global_config['Global']['character_dict_path'] = 'ppocr/utils/dict/{}_dict.txt'.format(type[0])

|

||||

global_config['Global']['save_model_dir'] = './output/rec_{}_lite'.format(type[0])

|

||||

global_config['Train']['dataset']['label_file_list'] = ["train_data/{}_train.txt".format(type[0])]

|

||||

global_config['Eval']['dataset']['label_file_list'] = ["train_data/{}_val.txt".format(type[0])]

|

||||

global_config['Global']['character_type'] = type[0]

|

||||

assert(

|

||||

os.path.isfile(os.path.join(project_path,global_config['Global']['character_dict_path']))

|

||||

),"Loss default dictionary file {}_dict.txt.You can download it from \

|

||||

https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph/ppocr/utils/dict/".format(type[0])

|

||||

return type[0]

|

||||

"please check your running command".format(multi_lang, type)

|

||||

if lang in latin_lang:

|

||||

lang = "latin"

|

||||

elif lang in arabic_lang:

|

||||

lang = "arabic"

|

||||

elif lang in cyrillic_lang:

|

||||

lang = "cyrillic"

|

||||

elif lang in devanagari_lang:

|

||||

lang = "devanagari"

|

||||

global_config['Global'][

|

||||

'character_dict_path'] = 'ppocr/utils/dict/{}_dict.txt'.format(lang)

|

||||

global_config['Global'][

|

||||

'save_model_dir'] = './output/rec_{}_lite'.format(lang)

|

||||

global_config['Train']['dataset'][

|

||||

'label_file_list'] = ["train_data/{}_train.txt".format(lang)]

|

||||

global_config['Eval']['dataset'][

|

||||

'label_file_list'] = ["train_data/{}_val.txt".format(lang)]

|

||||

global_config['Global']['character_type'] = lang

|

||||

assert (

|

||||

os.path.isfile(

|

||||

os.path.join(project_path, global_config['Global'][

|

||||

'character_dict_path']))

|

||||

), "Loss default dictionary file {}_dict.txt.You can download it from \

|

||||

https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph/ppocr/utils/dict/".format(

|

||||

lang)

|

||||

return lang

|

||||

|

||||

|

||||

def merge_config(config):

|

||||

|

|

@ -110,43 +176,51 @@ def merge_config(config):

|

|||

cur[sub_key] = value

|

||||

else:

|

||||

cur = cur[sub_key]

|

||||

|

||||

def loss_file(path):

|

||||

assert(

|

||||

os.path.exists(path)

|

||||

),"There is no such file:{},Please do not forget to put in the specified file".format(path)

|

||||

|

||||

|

||||

|

||||

def loss_file(path):

|

||||

assert (

|

||||

os.path.exists(path)

|

||||

), "There is no such file:{},Please do not forget to put in the specified file".format(

|

||||

path)

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

FLAGS = ArgsParser().parse_args()

|

||||

merge_config(FLAGS.opt)

|

||||

save_file_path = 'rec_{}_lite_train.yml'.format(FLAGS.language)

|

||||

if os.path.isfile(save_file_path):

|

||||

os.remove(save_file_path)

|

||||

|

||||

|

||||

if FLAGS.train:

|

||||

global_config['Train']['dataset']['label_file_list'] = [FLAGS.train]

|

||||

train_label_path = os.path.join(project_path,FLAGS.train)

|

||||

train_label_path = os.path.join(project_path, FLAGS.train)

|

||||

loss_file(train_label_path)

|

||||

if FLAGS.val:

|

||||

global_config['Eval']['dataset']['label_file_list'] = [FLAGS.val]

|

||||

eval_label_path = os.path.join(project_path,FLAGS.val)

|

||||

eval_label_path = os.path.join(project_path, FLAGS.val)

|

||||

loss_file(eval_label_path)

|

||||

if FLAGS.dict:

|

||||

global_config['Global']['character_dict_path'] = FLAGS.dict

|

||||

dict_path = os.path.join(project_path,FLAGS.dict)

|

||||

dict_path = os.path.join(project_path, FLAGS.dict)

|

||||

loss_file(dict_path)

|

||||

if FLAGS.data_dir:

|

||||

global_config['Eval']['dataset']['data_dir'] = FLAGS.data_dir

|

||||

global_config['Train']['dataset']['data_dir'] = FLAGS.data_dir

|

||||

data_dir = os.path.join(project_path,FLAGS.data_dir)

|

||||

data_dir = os.path.join(project_path, FLAGS.data_dir)

|

||||

loss_file(data_dir)

|

||||

|

||||

|

||||

with open(save_file_path, 'w') as f:

|

||||

yaml.dump(dict(global_config), f, default_flow_style=False, sort_keys=False)

|

||||

yaml.dump(

|

||||

dict(global_config), f, default_flow_style=False, sort_keys=False)

|

||||

logging.info("Project path is :{}".format(project_path))

|

||||

logging.info("Train list path set to :{}".format(global_config['Train']['dataset']['label_file_list'][0]))

|

||||

logging.info("Eval list path set to :{}".format(global_config['Eval']['dataset']['label_file_list'][0]))

|

||||

logging.info("Dataset root path set to :{}".format(global_config['Eval']['dataset']['data_dir']))

|

||||

logging.info("Dict path set to :{}".format(global_config['Global']['character_dict_path']))

|

||||

logging.info("Config file set to :configs/rec/multi_language/{}".format(save_file_path))

|

||||

logging.info("Train list path set to :{}".format(global_config['Train'][

|

||||

'dataset']['label_file_list'][0]))

|

||||

logging.info("Eval list path set to :{}".format(global_config['Eval'][

|

||||

'dataset']['label_file_list'][0]))

|

||||

logging.info("Dataset root path set to :{}".format(global_config['Eval'][

|

||||

'dataset']['data_dir']))

|

||||

logging.info("Dict path set to :{}".format(global_config['Global'][

|

||||

'character_dict_path']))

|

||||

logging.info("Config file set to :configs/rec/multi_language/{}".

|

||||

format(save_file_path))

|

||||

|

|

|

|||

|

|

@ -0,0 +1,111 @@

|

|||

Global:

|

||||

use_gpu: true

|

||||

epoch_num: 500

|

||||

log_smooth_window: 20

|

||||

print_batch_step: 10

|

||||

save_model_dir: ./output/rec_arabic_lite

|

||||

save_epoch_step: 3

|

||||

eval_batch_step:

|

||||

- 0

|

||||

- 2000

|

||||

cal_metric_during_train: true

|

||||

pretrained_model: null

|

||||

checkpoints: null

|

||||

save_inference_dir: null

|

||||

use_visualdl: false

|

||||

infer_img: null

|

||||

character_dict_path: ppocr/utils/dict/arabic_dict.txt

|

||||

character_type: arabic

|

||||

max_text_length: 25

|

||||

infer_mode: false

|

||||

use_space_char: true

|

||||

Optimizer:

|

||||

name: Adam

|

||||

beta1: 0.9

|

||||

beta2: 0.999

|

||||

lr:

|

||||

name: Cosine

|

||||

learning_rate: 0.001

|

||||

regularizer:

|

||||

name: L2

|

||||

factor: 1.0e-05

|

||||

Architecture:

|

||||

model_type: rec

|

||||

algorithm: CRNN

|

||||

Transform: null

|

||||

Backbone:

|

||||

name: MobileNetV3

|

||||

scale: 0.5

|

||||

model_name: small

|

||||

small_stride:

|

||||

- 1

|

||||

- 2

|

||||

- 2

|

||||

- 2

|

||||

Neck:

|

||||

name: SequenceEncoder

|

||||

encoder_type: rnn

|

||||

hidden_size: 48

|

||||

Head:

|

||||

name: CTCHead

|

||||

fc_decay: 1.0e-05

|

||||

Loss:

|

||||

name: CTCLoss

|

||||

PostProcess:

|

||||

name: CTCLabelDecode

|

||||

Metric:

|

||||

name: RecMetric

|

||||

main_indicator: acc

|

||||

Train:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/arabic_train.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- RecAug: null

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: true

|

||||

batch_size_per_card: 256

|

||||

drop_last: true

|

||||

num_workers: 8

|

||||

Eval:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/arabic_val.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: false

|

||||

drop_last: false

|

||||

batch_size_per_card: 256

|

||||

num_workers: 8

|

||||

|

|

@ -0,0 +1,111 @@

|

|||

Global:

|

||||

use_gpu: true

|

||||

epoch_num: 500

|

||||

log_smooth_window: 20

|

||||

print_batch_step: 10

|

||||

save_model_dir: ./output/rec_cyrillic_lite

|

||||

save_epoch_step: 3

|

||||

eval_batch_step:

|

||||

- 0

|

||||

- 2000

|

||||

cal_metric_during_train: true

|

||||

pretrained_model: null

|

||||

checkpoints: null

|

||||

save_inference_dir: null

|

||||

use_visualdl: false

|

||||

infer_img: null

|

||||

character_dict_path: ppocr/utils/dict/cyrillic_dict.txt

|

||||

character_type: cyrillic

|

||||

max_text_length: 25

|

||||

infer_mode: false

|

||||

use_space_char: true

|

||||

Optimizer:

|

||||

name: Adam

|

||||

beta1: 0.9

|

||||

beta2: 0.999

|

||||

lr:

|

||||

name: Cosine

|

||||

learning_rate: 0.001

|

||||

regularizer:

|

||||

name: L2

|

||||

factor: 1.0e-05

|

||||

Architecture:

|

||||

model_type: rec

|

||||

algorithm: CRNN

|

||||

Transform: null

|

||||

Backbone:

|

||||

name: MobileNetV3

|

||||

scale: 0.5

|

||||

model_name: small

|

||||

small_stride:

|

||||

- 1

|

||||

- 2

|

||||

- 2

|

||||

- 2

|

||||

Neck:

|

||||

name: SequenceEncoder

|

||||

encoder_type: rnn

|

||||

hidden_size: 48

|

||||

Head:

|

||||

name: CTCHead

|

||||

fc_decay: 1.0e-05

|

||||

Loss:

|

||||

name: CTCLoss

|

||||

PostProcess:

|

||||

name: CTCLabelDecode

|

||||

Metric:

|

||||

name: RecMetric

|

||||

main_indicator: acc

|

||||

Train:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/cyrillic_train.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- RecAug: null

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: true

|

||||

batch_size_per_card: 256

|

||||

drop_last: true

|

||||

num_workers: 8

|

||||

Eval:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/cyrillic_val.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: false

|

||||

drop_last: false

|

||||

batch_size_per_card: 256

|

||||

num_workers: 8

|

||||

|

|

@ -0,0 +1,111 @@

|

|||

Global:

|

||||

use_gpu: true

|

||||

epoch_num: 500

|

||||

log_smooth_window: 20

|

||||

print_batch_step: 10

|

||||

save_model_dir: ./output/rec_devanagari_lite

|

||||

save_epoch_step: 3

|

||||

eval_batch_step:

|

||||

- 0

|

||||

- 2000

|

||||

cal_metric_during_train: true

|

||||

pretrained_model: null

|

||||

checkpoints: null

|

||||

save_inference_dir: null

|

||||

use_visualdl: false

|

||||

infer_img: null

|

||||

character_dict_path: ppocr/utils/dict/devanagari_dict.txt

|

||||

character_type: devanagari

|

||||

max_text_length: 25

|

||||

infer_mode: false

|

||||

use_space_char: true

|

||||

Optimizer:

|

||||

name: Adam

|

||||

beta1: 0.9

|

||||

beta2: 0.999

|

||||

lr:

|

||||

name: Cosine

|

||||

learning_rate: 0.001

|

||||

regularizer:

|

||||

name: L2

|

||||

factor: 1.0e-05

|

||||

Architecture:

|

||||

model_type: rec

|

||||

algorithm: CRNN

|

||||

Transform: null

|

||||

Backbone:

|

||||

name: MobileNetV3

|

||||

scale: 0.5

|

||||

model_name: small

|

||||

small_stride:

|

||||

- 1

|

||||

- 2

|

||||

- 2

|

||||

- 2

|

||||

Neck:

|

||||

name: SequenceEncoder

|

||||

encoder_type: rnn

|

||||

hidden_size: 48

|

||||

Head:

|

||||

name: CTCHead

|

||||

fc_decay: 1.0e-05

|

||||

Loss:

|

||||

name: CTCLoss

|

||||

PostProcess:

|

||||

name: CTCLabelDecode

|

||||

Metric:

|

||||

name: RecMetric

|

||||

main_indicator: acc

|

||||

Train:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/devanagari_train.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- RecAug: null

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: true

|

||||

batch_size_per_card: 256

|

||||

drop_last: true

|

||||

num_workers: 8

|

||||

Eval:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/devanagari_val.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: false

|

||||

drop_last: false

|

||||

batch_size_per_card: 256

|

||||

num_workers: 8

|

||||

|

|

@ -15,11 +15,11 @@ Global:

|

|||

use_visualdl: False

|

||||

infer_img:

|

||||

# for data or label process

|

||||

character_dict_path: ppocr/utils/dict/en_dict.txt

|

||||

character_dict_path: ppocr/utils/en_dict.txt

|

||||

character_type: EN

|

||||

max_text_length: 25

|

||||

infer_mode: False

|

||||

use_space_char: False

|

||||

use_space_char: True

|

||||

|

||||

|

||||

Optimizer:

|

||||

|

|

|

|||

|

|

@ -0,0 +1,111 @@

|

|||

Global:

|

||||

use_gpu: true

|

||||

epoch_num: 500

|

||||

log_smooth_window: 20

|

||||

print_batch_step: 10

|

||||

save_model_dir: ./output/rec_latin_lite

|

||||

save_epoch_step: 3

|

||||

eval_batch_step:

|

||||

- 0

|

||||

- 2000

|

||||

cal_metric_during_train: true

|

||||

pretrained_model: null

|

||||

checkpoints: null

|

||||

save_inference_dir: null

|

||||

use_visualdl: false

|

||||

infer_img: null

|

||||

character_dict_path: ppocr/utils/dict/latin_dict.txt

|

||||

character_type: latin

|

||||

max_text_length: 25

|

||||

infer_mode: false

|

||||

use_space_char: true

|

||||

Optimizer:

|

||||

name: Adam

|

||||

beta1: 0.9

|

||||

beta2: 0.999

|

||||

lr:

|

||||

name: Cosine

|

||||

learning_rate: 0.001

|

||||

regularizer:

|

||||

name: L2

|

||||

factor: 1.0e-05

|

||||

Architecture:

|

||||

model_type: rec

|

||||

algorithm: CRNN

|

||||

Transform: null

|

||||

Backbone:

|

||||

name: MobileNetV3

|

||||

scale: 0.5

|

||||

model_name: small

|

||||

small_stride:

|

||||

- 1

|

||||

- 2

|

||||

- 2

|

||||

- 2

|

||||

Neck:

|

||||

name: SequenceEncoder

|

||||

encoder_type: rnn

|

||||

hidden_size: 48

|

||||

Head:

|

||||

name: CTCHead

|

||||

fc_decay: 1.0e-05

|

||||

Loss:

|

||||

name: CTCLoss

|

||||

PostProcess:

|

||||

name: CTCLabelDecode

|

||||

Metric:

|

||||

name: RecMetric

|

||||

main_indicator: acc

|

||||

Train:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/latin_train.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- RecAug: null

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: true

|

||||

batch_size_per_card: 256

|

||||

drop_last: true

|

||||

num_workers: 8

|

||||

Eval:

|

||||

dataset:

|

||||

name: SimpleDataSet

|

||||

data_dir: train_data/

|

||||

label_file_list:

|

||||

- train_data/latin_val.txt

|

||||

transforms:

|

||||

- DecodeImage:

|

||||

img_mode: BGR

|

||||

channel_first: false

|

||||

- CTCLabelEncode: null

|

||||

- RecResizeImg:

|

||||

image_shape:

|

||||

- 3

|

||||

- 32

|

||||

- 320

|

||||

- KeepKeys:

|

||||

keep_keys:

|

||||

- image

|

||||

- label

|

||||

- length

|

||||

loader:

|

||||

shuffle: false

|

||||

drop_last: false

|

||||

batch_size_per_card: 256

|

||||

num_workers: 8

|

||||

|

|

@ -40,6 +40,7 @@ endif()

|

|||

if (WIN32)

|

||||

include_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||

include_directories("${PADDLE_LIB}/paddle/include")

|

||||

link_directories("${PADDLE_LIB}/paddle/lib")

|

||||

link_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/build/ NO_DEFAULT_PATH)

|

||||

|

||||

|

|

@ -140,22 +141,22 @@ else()

|

|||

endif ()

|

||||

endif()

|

||||

|

||||

# Note: libpaddle_inference_api.so/a must put before libpaddle_fluid.so/a

|

||||

# Note: libpaddle_inference_api.so/a must put before libpaddle_inference.so/a

|

||||

if(WITH_STATIC_LIB)

|

||||

if(WIN32)

|

||||

set(DEPS

|

||||

${PADDLE_LIB}/paddle/lib/paddle_fluid${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

${PADDLE_LIB}/paddle/lib/paddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

else()

|

||||

set(DEPS

|

||||

${PADDLE_LIB}/paddle/lib/libpaddle_fluid${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||

endif()

|

||||

else()

|

||||

if(WIN32)

|

||||

set(DEPS

|

||||

${PADDLE_LIB}/paddle/lib/paddle_fluid${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

${PADDLE_LIB}/paddle/lib/paddle_inference${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

else()

|

||||

set(DEPS

|

||||

${PADDLE_LIB}/paddle/lib/libpaddle_fluid${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||

endif()

|

||||

endif(WITH_STATIC_LIB)

|

||||

|

||||

|

|

|

|||

|

After Width: | Height: | Size: 26 KiB |

|

|

@ -74,9 +74,10 @@ opencv3/

|

|||

|

||||

* 有2种方式获取Paddle预测库,下面进行详细介绍。

|

||||

|

||||

|

||||

#### 1.2.1 直接下载安装

|

||||

|

||||

* [Paddle预测库官网](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/guides/05_inference_deployment/inference/build_and_install_lib_cn.html)上提供了不同cuda版本的Linux预测库,可以在官网查看并选择合适的预测库版本。

|

||||

* [Paddle预测库官网](https://www.paddlepaddle.org.cn/documentation/docs/zh/advanced_guide/inference_deployment/inference/build_and_install_lib_cn.html)上提供了不同cuda版本的Linux预测库,可以在官网查看并选择合适的预测库版本(*建议选择paddle版本>=2.0.1版本的预测库* )。

|

||||

|

||||

* 下载之后使用下面的方法解压。

|

||||

|

||||

|

|

@ -130,8 +131,6 @@ build/paddle_inference_install_dir/

|

|||

其中`paddle`就是C++预测所需的Paddle库,`version.txt`中包含当前预测库的版本信息。

|

||||

|

||||

|

||||

|

||||

|

||||

## 2 开始运行

|

||||

|

||||

### 2.1 将模型导出为inference model

|

||||

|

|

@ -232,7 +231,7 @@ visualize 1 # 是否对结果进行可视化,为1时,会在当前文件夹

|

|||

最终屏幕上会输出检测结果如下。

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs/cpp_infer_pred_12.png" width="600">

|

||||

<img src="./imgs/cpp_infer_pred_12.png" width="600">

|

||||

</div>

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -91,8 +91,8 @@ tar -xf paddle_inference.tgz

|

|||

Finally you can see the following files in the folder of `paddle_inference/`.

|

||||

|

||||

#### 1.2.2 Compile from the source code

|

||||

* If you want to get the latest Paddle inference library features, you can download the latest code from Paddle github repository and compile the inference library from the source code.

|

||||

* You can refer to [Paddle inference library] (https://www.paddlepaddle.org.cn/documentation/docs/en/develop/guides/05_inference_deployment/inference/build_and_install_lib_en.html) to get the Paddle source code from github, and then compile To generate the latest inference library. The method of using git to access the code is as follows.

|

||||

* If you want to get the latest Paddle inference library features, you can download the latest code from Paddle github repository and compile the inference library from the source code. It is recommended to download the inference library with paddle version greater than or equal to 2.0.1.

|

||||

* You can refer to [Paddle inference library] (https://www.paddlepaddle.org.cn/documentation/docs/en/advanced_guide/inference_deployment/inference/build_and_install_lib_en.html) to get the Paddle source code from github, and then compile To generate the latest inference library. The method of using git to access the code is as follows.

|

||||

|

||||

|

||||

```shell

|

||||

|

|

@ -238,7 +238,7 @@ visualize 1 # Whether to visualize the results,when it is set as 1, The predic

|

|||

The detection results will be shown on the screen, which is as follows.

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs/cpp_infer_pred_12.png" width="600">

|

||||

<img src="./imgs/cpp_infer_pred_12.png" width="600">

|

||||

</div>

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -13,7 +13,6 @@ inference 模型(`paddle.jit.save`保存的模型)

|

|||

- [检测模型转inference模型](#检测模型转inference模型)

|

||||

- [识别模型转inference模型](#识别模型转inference模型)

|

||||

- [方向分类模型转inference模型](#方向分类模型转inference模型)

|

||||

- [端到端模型转inference模型](#端到端模型转inference模型)

|

||||

|

||||

- [二、文本检测模型推理](#文本检测模型推理)

|

||||

- [1. 超轻量中文检测模型推理](#超轻量中文检测模型推理)

|

||||

|

|

@ -28,13 +27,10 @@ inference 模型(`paddle.jit.save`保存的模型)

|

|||

- [4. 自定义文本识别字典的推理](#自定义文本识别字典的推理)

|

||||

- [5. 多语言模型的推理](#多语言模型的推理)

|

||||

|

||||

- [四、端到端模型推理](#端到端模型推理)

|

||||

- [1. PGNet端到端模型推理](#PGNet端到端模型推理)

|

||||

|

||||

- [五、方向分类模型推理](#方向识别模型推理)

|

||||

- [四、方向分类模型推理](#方向识别模型推理)

|

||||

- [1. 方向分类模型推理](#方向分类模型推理)

|

||||

|

||||

- [六、文本检测、方向分类和文字识别串联推理](#文本检测、方向分类和文字识别串联推理)

|

||||

- [五、文本检测、方向分类和文字识别串联推理](#文本检测、方向分类和文字识别串联推理)

|

||||

- [1. 超轻量中文OCR模型推理](#超轻量中文OCR模型推理)

|

||||

- [2. 其他模型推理](#其他模型推理)

|

||||

|

||||

|

|

@ -122,32 +118,6 @@ python3 tools/export_model.py -c configs/cls/cls_mv3.yml -o Global.pretrained_mo

|

|||

├── inference.pdiparams.info # 分类inference模型的参数信息,可忽略

|

||||

└── inference.pdmodel # 分类inference模型的program文件

|

||||

```

|

||||

<a name="端到端模型转inference模型"></a>

|

||||

### 端到端模型转inference模型

|

||||

|

||||

下载端到端模型:

|

||||

```

|

||||

wget -P ./ch_lite/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar && tar xf ./ch_lite/ch_ppocr_mobile_v2.0_cls_train.tar -C ./ch_lite/

|

||||

```

|

||||

|

||||

端到端模型转inference模型与检测的方式相同,如下:

|

||||

```

|

||||

# -c 后面设置训练算法的yml配置文件

|

||||

# -o 配置可选参数

|

||||

# Global.pretrained_model 参数设置待转换的训练模型地址,不用添加文件后缀 .pdmodel,.pdopt或.pdparams。

|

||||

# Global.load_static_weights 参数需要设置为 False。

|

||||

# Global.save_inference_dir参数设置转换的模型将保存的地址。

|

||||

|

||||

python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./ch_lite/ch_ppocr_mobile_v2.0_cls_train/best_accuracy Global.load_static_weights=False Global.save_inference_dir=./inference/e2e/

|

||||

```

|

||||

|

||||

转换成功后,在目录下有三个文件:

|

||||

```

|

||||

/inference/e2e/

|

||||

├── inference.pdiparams # 分类inference模型的参数文件

|

||||

├── inference.pdiparams.info # 分类inference模型的参数信息,可忽略

|

||||

└── inference.pdmodel # 分类inference模型的program文件

|

||||

```

|

||||

|

||||

<a name="文本检测模型推理"></a>

|

||||

## 二、文本检测模型推理

|

||||

|

|

@ -362,38 +332,8 @@ python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words/korean/1.jpg" -

|

|||

Predicts of ./doc/imgs_words/korean/1.jpg:('바탕으로', 0.9948904)

|

||||

```

|

||||

|

||||

<a name="端到端模型推理"></a>

|

||||

## 四、端到端模型推理

|

||||

|

||||

端到端模型推理,默认使用PGNet模型的配置参数。当不使用PGNet模型时,在推理时,需要通过传入相应的参数进行算法适配,细节参考下文。

|

||||

<a name="PGNet端到端模型推理"></a>

|

||||

### 1. PGNet端到端模型推理

|

||||

#### (1). 四边形文本检测模型(ICDAR2015)

|

||||

首先将PGNet端到端训练过程中保存的模型,转换成inference model。以基于Resnet50_vd骨干网络,在ICDAR2015英文数据集训练的模型为例([模型下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar)),可以使用如下命令进行转换:

|

||||

```

|

||||

python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./en_server_pgnetA/iter_epoch_450 Global.load_static_weights=False Global.save_inference_dir=./inference/e2e

|

||||

```

|

||||

**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`**,可以执行如下命令:

|

||||

```

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img_10.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=False

|

||||

```

|

||||

可视化文本检测结果默认保存到`./inference_results`文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

|

||||

|

||||

|

||||

|

||||

#### (2). 弯曲文本检测模型(Total-Text)

|

||||

和四边形文本检测模型共用一个推理模型

|

||||

**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`,同时,还需要增加参数`--e2e_pgnet_polygon=True`,**可以执行如下命令:

|

||||

```

|

||||

python3.7 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

|

||||

```

|

||||

可视化文本端到端结果默认保存到`./inference_results`文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

|

||||

|

||||

|

||||

|

||||

|

||||

<a name="方向分类模型推理"></a>

|

||||

## 五、方向分类模型推理

|

||||

## 四、方向分类模型推理

|

||||

|

||||

下面将介绍方向分类模型推理。

|

||||

|

||||

|

|

@ -418,7 +358,7 @@ Predicts of ./doc/imgs_words/ch/word_4.jpg:['0', 0.9999982]

|

|||

```

|

||||

|

||||

<a name="文本检测、方向分类和文字识别串联推理"></a>

|

||||

## 六、文本检测、方向分类和文字识别串联推理

|

||||

## 五、文本检测、方向分类和文字识别串联推理

|

||||

<a name="超轻量中文OCR模型推理"></a>

|

||||

### 1. 超轻量中文OCR模型推理

|

||||

|

||||

|

|

|

|||

|

|

@ -104,27 +104,16 @@ python3 generate_multi_language_configs.py -l it \

|

|||

| german_mobile_v2.0_rec |德文识别|[rec_german_lite_train.yml](../../configs/rec/multi_language/rec_german_lite_train.yml)|2.65M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/german_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/german_mobile_v2.0_rec_train.tar) |

|

||||

| korean_mobile_v2.0_rec |韩文识别|[rec_korean_lite_train.yml](../../configs/rec/multi_language/rec_korean_lite_train.yml)|3.9M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/korean_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/korean_mobile_v2.0_rec_train.tar) |

|

||||

| japan_mobile_v2.0_rec |日文识别|[rec_japan_lite_train.yml](../../configs/rec/multi_language/rec_japan_lite_train.yml)|4.23M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/japan_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/japan_mobile_v2.0_rec_train.tar) |

|

||||

| it_mobile_v2.0_rec |意大利文识别|rec_it_lite_train.yml|2.53M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/it_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/it_mobile_v2.0_rec_train.tar) |

|

||||

| xi_mobile_v2.0_rec |西班牙文识别|rec_xi_lite_train.yml|2.53M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/xi_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/xi_mobile_v2.0_rec_train.tar) |

|

||||

| pu_mobile_v2.0_rec |葡萄牙文识别|rec_pu_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/pu_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/pu_mobile_v2.0_rec_train.tar) |

|

||||

| ru_mobile_v2.0_rec |俄罗斯文识别|rec_ru_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ru_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ru_mobile_v2.0_rec_train.tar) |

|

||||

| ar_mobile_v2.0_rec |阿拉伯文识别|rec_ar_lite_train.yml|2.53M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ar_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ar_mobile_v2.0_rec_train.tar) |

|

||||

| hi_mobile_v2.0_rec |印地文识别|rec_hi_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/hi_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/hi_mobile_v2.0_rec_train.tar) |

|

||||

| chinese_cht_mobile_v2.0_rec |中文繁体识别|rec_chinese_cht_lite_train.yml|5.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/chinese_cht_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/chinese_cht_mobile_v2.0_rec_train.tar) |

|

||||

| ug_mobile_v2.0_rec |维吾尔文识别|rec_ug_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ug_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ug_mobile_v2.0_rec_train.tar) |

|

||||

| fa_mobile_v2.0_rec |波斯文识别|rec_fa_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/fa_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/fa_mobile_v2.0_rec_train.tar) |

|

||||

| ur_mobile_v2.0_rec |乌尔都文识别|rec_ur_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ur_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ur_mobile_v2.0_rec_train.tar) |

|

||||

| rs_mobile_v2.0_rec |塞尔维亚文(latin)识别|rec_rs_lite_train.yml|2.53M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/rs_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/rs_mobile_v2.0_rec_train.tar) |

|

||||

| oc_mobile_v2.0_rec |欧西坦文识别|rec_oc_lite_train.yml|2.53M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/oc_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/oc_mobile_v2.0_rec_train.tar) |

|

||||

| mr_mobile_v2.0_rec |马拉地文识别|rec_mr_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/mr_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/mr_mobile_v2.0_rec_train.tar) |

|

||||

| ne_mobile_v2.0_rec |尼泊尔文识别|rec_ne_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ne_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ne_mobile_v2.0_rec_train.tar) |

|

||||

| rsc_mobile_v2.0_rec |塞尔维亚文(cyrillic)识别|rec_rsc_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/rsc_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/rsc_mobile_v2.0_rec_train.tar) |

|

||||

| bg_mobile_v2.0_rec |保加利亚文识别|rec_bg_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/bg_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/bg_mobile_v2.0_rec_train.tar) |

|

||||

| uk_mobile_v2.0_rec |乌克兰文识别|rec_uk_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/uk_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/uk_mobile_v2.0_rec_train.tar) |

|

||||

| be_mobile_v2.0_rec |白俄罗斯文识别|rec_be_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/be_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/be_mobile_v2.0_rec_train.tar) |

|

||||

| te_mobile_v2.0_rec |泰卢固文识别|rec_te_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/te_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/te_mobile_v2.0_rec_train.tar) |

|

||||

| ka_mobile_v2.0_rec |卡纳达文识别|rec_ka_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ka_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ka_mobile_v2.0_rec_train.tar) |

|

||||

| ta_mobile_v2.0_rec |泰米尔文识别|rec_ta_lite_train.yml|2.63M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ta_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/ta_mobile_v2.0_rec_train.tar) |

|

||||

| latin_mobile_v2.0_rec | 拉丁文识别 | [rec_latin_lite_train.yml](../../configs/rec/multi_language/rec_latin_lite_train.yml) |2.6M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/latin_ppocr_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/latin_ppocr_mobile_v2.0_rec_train.tar) |

|

||||

| arabic_mobile_v2.0_rec | 阿拉伯字母 | [rec_arabic_lite_train.yml](../../configs/rec/multi_language/rec_arabic_lite_train.yml) |2.6M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/arabic_ppocr_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/arabic_ppocr_mobile_v2.0_rec_train.tar) |

|

||||

| cyrillic_mobile_v2.0_rec | 斯拉夫字母 | [rec_cyrillic_lite_train.yml](../../configs/rec/multi_language/rec_cyrillic_lite_train.yml) |2.6M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/cyrillic_ppocr_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/cyrillic_ppocr_mobile_v2.0_rec_train.tar) |

|

||||

| devanagari_mobile_v2.0_rec | 梵文字母 | [rec_devanagari_lite_train.yml](../../configs/rec/multi_language/rec_devanagari_lite_train.yml) |2.6M|[推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/devanagari_ppocr_mobile_v2.0_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/multilingual/devanagari_ppocr_mobile_v2.0_rec_train.tar) |

|

||||

|

||||

更多支持语种请参考: [多语言模型](./multi_languages.md)

|

||||

|

||||

|

||||

<a name="文本方向分类模型"></a>

|

||||

|

|

|

|||

|

|

@ -5,6 +5,25 @@

|

|||

- 2021.4.9 支持**80种**语言的检测和识别

|

||||

- 2021.4.9 支持**轻量高精度**英文模型检测识别

|

||||

|

||||

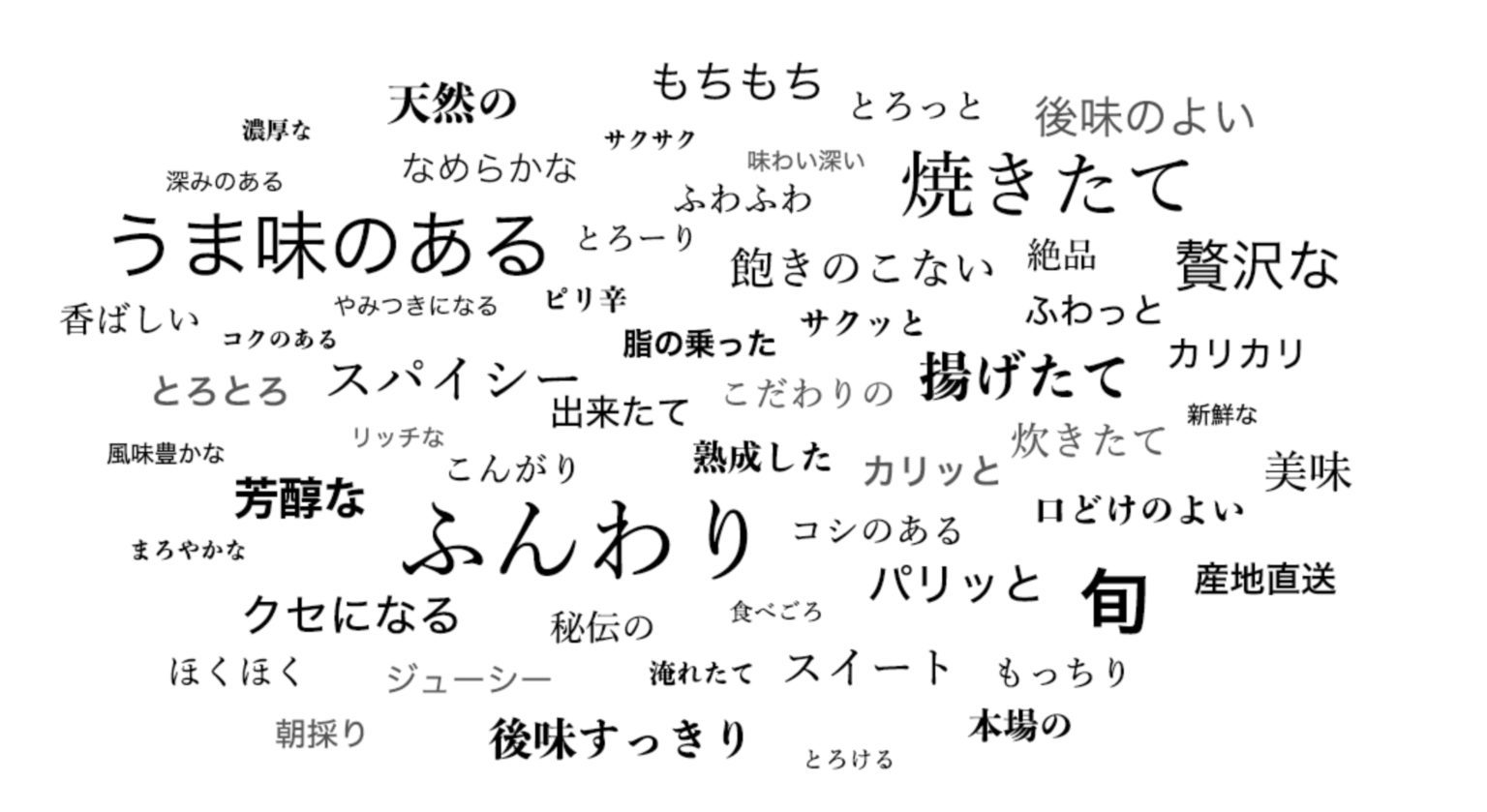

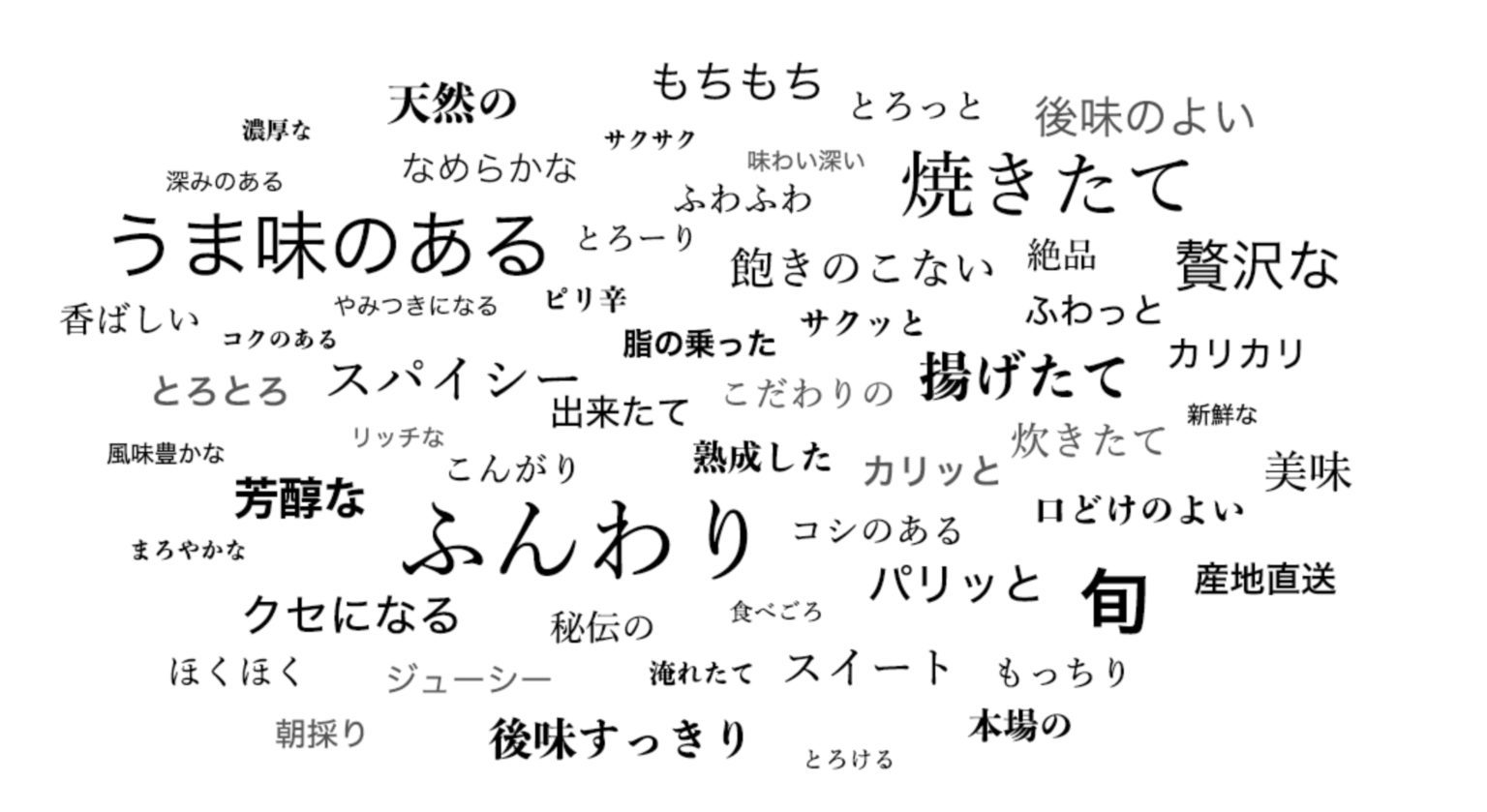

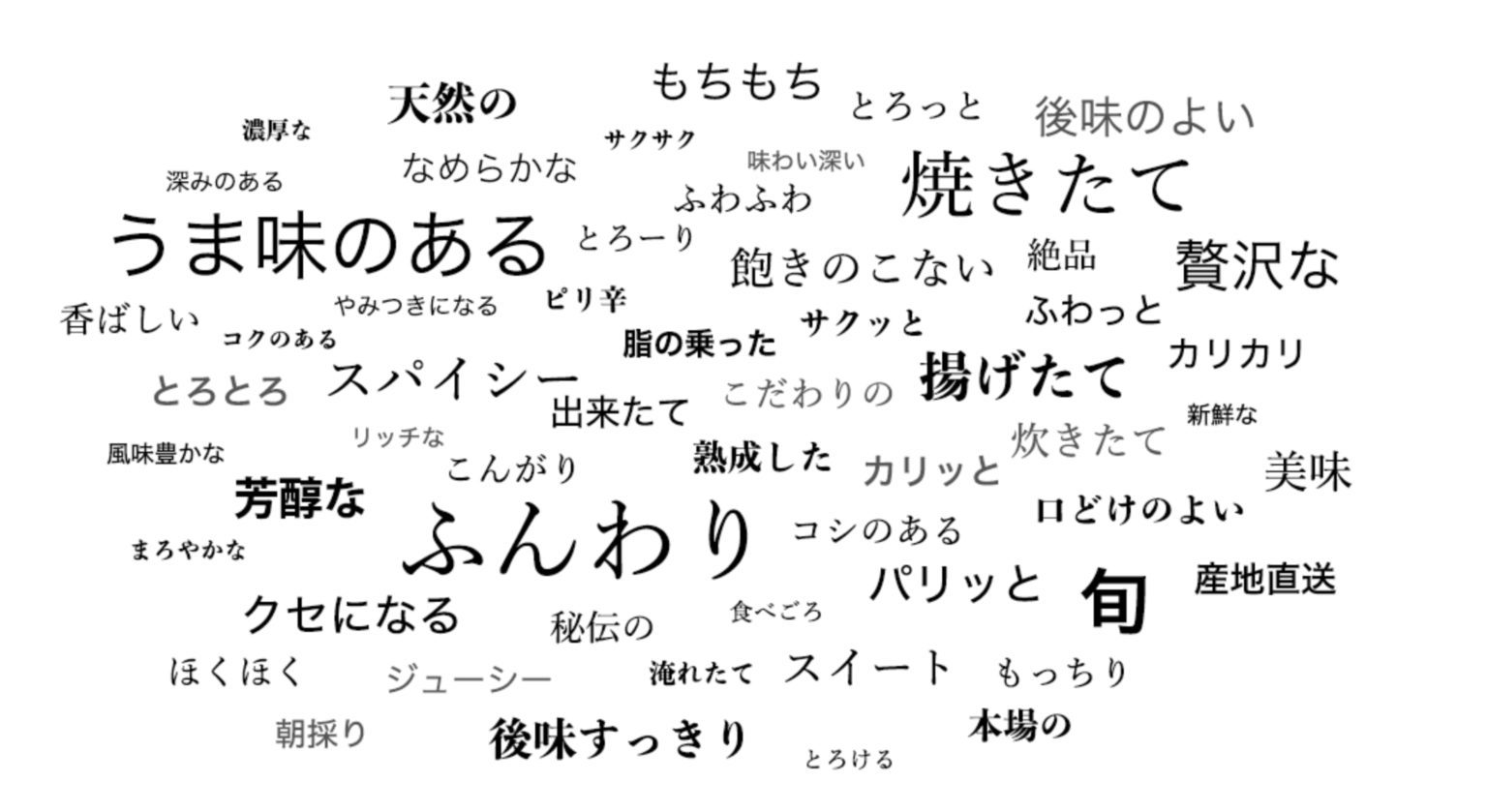

PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅提供了通用场景下的中英文模型,也提供了专门在英文场景下训练的模型,

|

||||

和覆盖[80个语言](#语种缩写)的小语种模型。

|

||||

|

||||

其中英文模型支持,大小写字母和常见标点的检测识别,并优化了空格字符的识别:

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/en_1.jpg" width="400" height="600">

|

||||

</div>

|

||||

|

||||

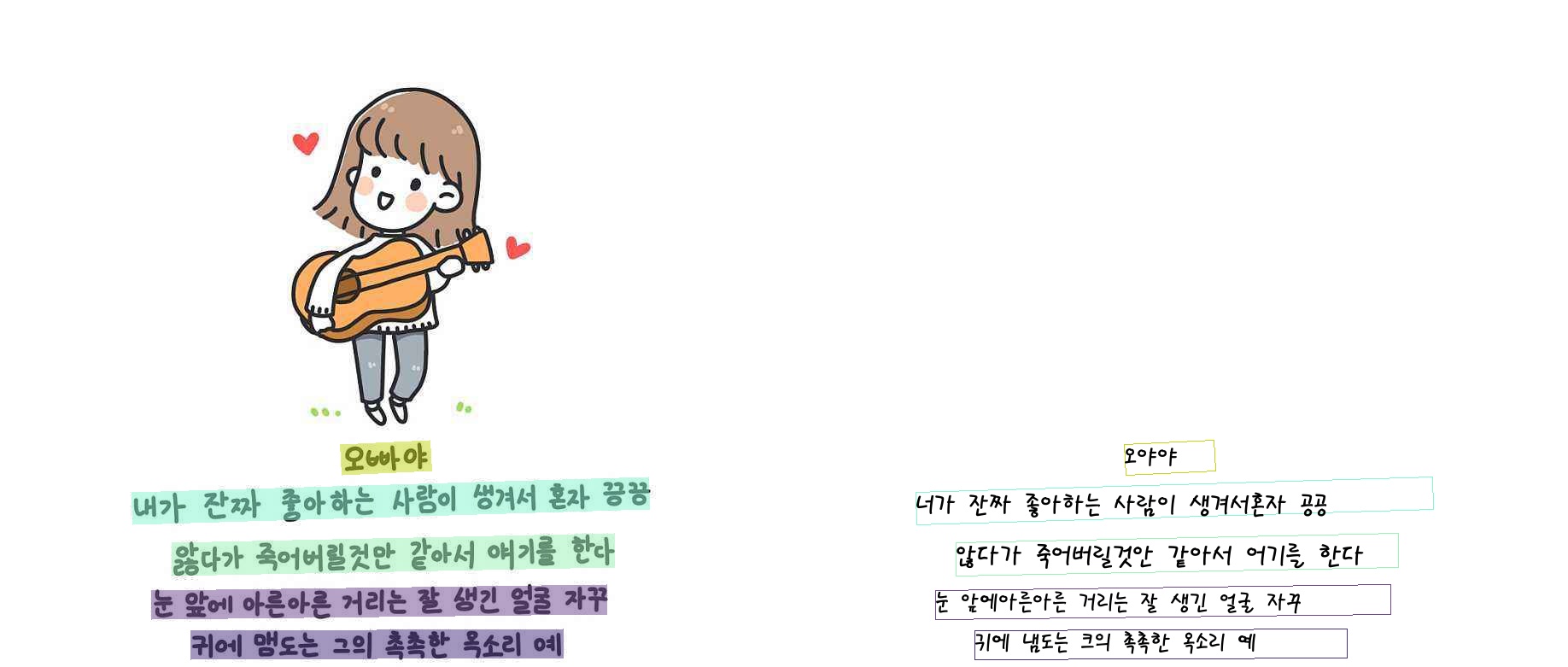

小语种模型覆盖了拉丁语系、阿拉伯语系、中文繁体、韩语、日语等等:

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/japan_2.jpg" width="600" height="300">

|

||||

<img src="../imgs_results/multi_lang/french_0.jpg" width="300" height="300">

|

||||

</div>

|

||||

|

||||

|

||||

本文档将简要介绍小语种模型的使用方法。

|

||||

|

||||

- [1 安装](#安装)

|

||||

- [1.1 paddle 安装](#paddle安装)

|

||||

- [1.2 paddleocr package 安装](#paddleocr_package_安装)

|

||||

|

|

@ -40,7 +59,7 @@ pip instll paddlepaddle-gpu

|

|||

|

||||

pip 安装

|

||||

```

|

||||

pip install "paddleocr>=2.0.4" # 推荐使用2.0.4版本

|

||||

pip install "paddleocr>=2.0.6" # 推荐使用2.0.6版本

|

||||

```

|

||||

本地构建并安装

|

||||

```

|

||||

|

|

@ -68,7 +87,11 @@ Paddleocr目前支持80个语种,可以通过修改--lang参数进行切换,

|

|||

|

||||

paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

|

||||

```

|

||||

|

||||

|

||||

<div align="center">

|

||||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/release/2.1/doc/imgs/japan_2.jpg" width="800">

|

||||

</div>

|

||||

|

||||

|

||||

结果是一个list,每个item包含了文本框,文字和识别置信度

|

||||

```text

|

||||

|

|

@ -111,7 +134,7 @@ paddleocr --image_dir PaddleOCR/doc/imgs/11.jpg --rec false

|

|||

<a name="python_脚本运行"></a>

|

||||

### 2.2 python 脚本运行

|

||||

|

||||

ppocr 也支持在python脚本中运行,便于嵌入到您自己的代码中:

|

||||

ppocr 也支持在python脚本中运行,便于嵌入到您自己的代码中 :

|

||||

|

||||

* 整图预测(检测+识别)

|

||||

|

||||

|

|

@ -132,14 +155,16 @@ image = Image.open(img_path).convert('RGB')

|

|||

boxes = [line[0] for line in result]

|

||||

txts = [line[1][0] for line in result]

|

||||

scores = [line[1][1] for line in result]

|

||||

im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/korean.ttf')

|

||||

im_show = draw_ocr(image, boxes, txts, scores, font_path='/path/to/PaddleOCR/doc/fonts/korean.ttf')

|

||||

im_show = Image.fromarray(im_show)

|

||||

im_show.save('result.jpg')

|

||||

```

|

||||

|

||||

结果可视化:

|

||||

|

||||

|

||||

<div align="center">

|

||||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/release/2.1/doc/imgs_results/korean.jpg" width="800">

|

||||

</div>

|

||||

|

||||

* 识别预测

|

||||

|

||||

|

|

@ -152,7 +177,8 @@ for line in result:

|

|||

print(line)

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

结果是一个tuple,只包含识别结果和识别置信度

|

||||

|

||||

|

|

@ -187,7 +213,10 @@ im_show.save('result.jpg')

|

|||

```

|

||||

|

||||

结果可视化 :

|

||||

|

||||

|

||||

<div align="center">

|

||||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/release/2.1/doc/imgs_results/whl/12_det.jpg" width="800">

|

||||

</div>

|

||||

|

||||

ppocr 还支持方向分类, 更多使用方式请参考:[whl包使用说明](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md)。

|

||||

|

||||

|

|

@ -211,7 +240,7 @@ ppocr 支持使用自己的数据进行自定义训练或finetune, 其中识别

|

|||

|德文|german|german|

|

||||

|日文|japan|japan|

|

||||

|韩文|korean|korean|

|

||||

|中文繁体|chinese traditional |ch_tra|

|

||||

|中文繁体|chinese traditional |chinese_cht|

|

||||

|意大利文| Italian |it|

|

||||

|西班牙文|Spanish |es|

|

||||

|葡萄牙文| Portuguese|pt|

|

||||

|

|

@ -230,10 +259,9 @@ ppocr 支持使用自己的数据进行自定义训练或finetune, 其中识别

|

|||

|乌克兰文|Ukranian|uk|

|

||||

|白俄罗斯文|Belarusian|be|

|

||||

|泰卢固文|Telugu |te|

|

||||

|卡纳达文|Kannada |kn|

|

||||

|泰米尔文|Tamil |ta|

|

||||

|南非荷兰文 |Afrikaans |af|

|

||||

|阿塞拜疆文 |Azerbaijani |az|

|

||||

|阿塞拜疆文 |Azerbaijani |az|

|

||||

|波斯尼亚文|Bosnian|bs|

|

||||

|捷克文|Czech|cs|

|

||||

|威尔士文 |Welsh |cy|

|

||||

|

|

|

|||

|

|

@ -2,7 +2,7 @@

|

|||

- [一、简介](#简介)

|

||||

- [二、环境配置](#环境配置)

|

||||

- [三、快速使用](#快速使用)

|

||||

- [四、模型训练、评估、推理](#快速训练)

|

||||

- [四、模型训练、评估、推理](#模型训练、评估、推理)

|

||||

|

||||

<a name="简介"></a>

|

||||

## 一、简介

|

||||

|

|

@ -16,14 +16,31 @@ OCR算法可以分为两阶段算法和端对端的算法。二阶段OCR算法

|

|||

- 提出基于图的修正模块(GRM)来进一步提高模型识别性能

|

||||

- 精度更高,预测速度更快

|

||||

|

||||

PGNet算法细节详见[论文](https://www.aaai.org/AAAI21Papers/AAAI-2885.WangP.pdf), 算法原理图如下所示:

|

||||

PGNet算法细节详见[论文](https://www.aaai.org/AAAI21Papers/AAAI-2885.WangP.pdf) ,算法原理图如下所示:

|

||||

|

||||

输入图像经过特征提取送入四个分支,分别是:文本边缘偏移量预测TBO模块,文本中心线预测TCL模块,文本方向偏移量预测TDO模块,以及文本字符分类图预测TCC模块。

|

||||

其中TBO以及TCL的输出经过后处理后可以得到文本的检测结果,TCL、TDO、TCC负责文本识别。

|

||||

|

||||

其检测识别效果图如下:

|

||||

|

||||

|

||||

|

||||

|

||||

### 性能指标

|

||||

|

||||

测试集: Total Text

|

||||

|

||||

测试环境: NVIDIA Tesla V100-SXM2-16GB

|

||||

|

||||

|PGNetA|det_precision|det_recall|det_f_score|e2e_precision|e2e_recall|e2e_f_score|FPS|下载|

|

||||

| --- | --- | --- | --- | --- | --- | --- | --- | --- |

|

||||

|Paper|85.30|86.80|86.1|-|-|61.7|38.20 (size=640)|-|

|

||||

|Ours|87.03|82.48|84.69|61.71|58.43|60.03|48.73 (size=768)|[下载链接](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar)|

|

||||

|

||||

*note:PaddleOCR里的PGNet实现针对预测速度做了优化,在精度下降可接受范围内,可以显著提升端对端预测速度*

|

||||

|

||||

|

||||

|

||||

<a name="环境配置"></a>

|

||||

## 二、环境配置

|

||||

请先参考[快速安装](./installation.md)配置PaddleOCR运行环境。

|

||||

|

|

@ -49,24 +66,24 @@ wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/e2e_server_pgnetA_infer.

|

|||

### 单张图像或者图像集合预测

|

||||

```bash

|

||||

# 预测image_dir指定的单张图像

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e_server_pgnetA_infer/" --e2e_pgnet_polygon=True

|

||||

|

||||

# 预测image_dir指定的图像集合

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/" --e2e_model_dir="./inference/e2e_server_pgnetA_infer/" --e2e_pgnet_polygon=True

|

||||

|

||||

# 如果想使用CPU进行预测,需设置use_gpu参数为False

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e/" --e2e_pgnet_polygon=True --use_gpu=False

|

||||

python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/imgs_en/img623.jpg" --e2e_model_dir="./inference/e2e_server_pgnetA_infer/" --e2e_pgnet_polygon=True --use_gpu=False

|

||||

```

|

||||

### 可视化结果

|

||||

可视化文本检测结果默认保存到./inference_results文件夹里面,结果文件的名称前缀为'e2e_res'。结果示例如下:

|

||||

|

||||

|

||||

<a name="快速训练"></a>

|

||||

<a name="模型训练、评估、推理"></a>

|

||||

## 四、模型训练、评估、推理

|

||||

本节以totaltext数据集为例,介绍PaddleOCR中端到端模型的训练、评估与测试。

|

||||

|

||||

### 准备数据

|

||||

下载解压[totaltext](https://github.com/cs-chan/Total-Text-Dataset/blob/master/Dataset/README.md)数据集到PaddleOCR/train_data/目录,数据集组织结构:

|

||||

下载解压[totaltext](https://github.com/cs-chan/Total-Text-Dataset/blob/master/Dataset/README.md) 数据集到PaddleOCR/train_data/目录,数据集组织结构:

|

||||

```

|

||||

/PaddleOCR/train_data/total_text/train/

|

||||

|- rgb/ # total_text数据集的训练数据

|

||||

|

|

@ -135,20 +152,20 @@ python3 tools/eval.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.checkpoints="{

|

|||

### 模型预测

|

||||

测试单张图像的端到端识别效果

|

||||

```shell

|

||||

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/img_10.jpg" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

|

||||

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/img_10.jpg" Global.pretrained_model="./output/e2e_pgnet/best_accuracy" Global.load_static_weights=false

|

||||

```

|

||||

|

||||

测试文件夹下所有图像的端到端识别效果

|

||||

```shell

|

||||

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/" Global.pretrained_model="./output/det_db/best_accuracy" Global.load_static_weights=false

|

||||

python3 tools/infer_e2e.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.infer_img="./doc/imgs_en/" Global.pretrained_model="./output/e2e_pgnet/best_accuracy" Global.load_static_weights=false

|

||||

```

|

||||

|

||||

### 预测推理

|

||||

#### (1).四边形文本检测模型(ICDAR2015)

|

||||

#### (1). 四边形文本检测模型(ICDAR2015)

|

||||

首先将PGNet端到端训练过程中保存的模型,转换成inference model。以基于Resnet50_vd骨干网络,以英文数据集训练的模型为例[模型下载地址](https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar) ,可以使用如下命令进行转换:

|

||||

```

|

||||

wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/pgnet/en_server_pgnetA.tar && tar xf en_server_pgnetA.tar

|

||||

python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./en_server_pgnetA/iter_epoch_450 Global.load_static_weights=False Global.save_inference_dir=./inference/e2e

|

||||

python3 tools/export_model.py -c configs/e2e/e2e_r50_vd_pg.yml -o Global.pretrained_model=./en_server_pgnetA/best_accuracy Global.load_static_weights=False Global.save_inference_dir=./inference/e2e

|

||||

```

|

||||

**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`**,可以执行如下命令:

|

||||

```

|

||||

|

|

@ -158,7 +175,7 @@ python3 tools/infer/predict_e2e.py --e2e_algorithm="PGNet" --image_dir="./doc/im

|

|||

|

||||

|

||||

|

||||

#### (2).弯曲文本检测模型(Total-Text)

|

||||

#### (2). 弯曲文本检测模型(Total-Text)

|

||||

对于弯曲文本样例

|

||||

|

||||

**PGNet端到端模型推理,需要设置参数`--e2e_algorithm="PGNet"`,同时,还需要增加参数`--e2e_pgnet_polygon=True`,**可以执行如下命令:

|

||||

|

|

|

|||

|

|

@ -138,7 +138,7 @@ PaddleOCR内置了一部分字典,可以按需使用。

|

|||

|

||||

`ppocr/utils/dict/german_dict.txt` 是一个包含131个字符的德文字典

|

||||

|

||||

`ppocr/utils/dict/en_dict.txt` 是一个包含63个字符的英文字典

|

||||

`ppocr/utils/en_dict.txt` 是一个包含96个字符的英文字典

|

||||

|

||||

|

||||

|

||||

|

|

@ -285,7 +285,7 @@ Eval:

|

|||

<a name="小语种"></a>

|

||||

#### 2.3 小语种

|

||||

|

||||

PaddleOCR目前已支持26种(除中文外)语种识别,`configs/rec/multi_languages` 路径下提供了一个多语言的配置文件模版: [rec_multi_language_lite_train.yml](../../configs/rec/multi_language/rec_multi_language_lite_train.yml)。

|

||||

PaddleOCR目前已支持80种(除中文外)语种识别,`configs/rec/multi_languages` 路径下提供了一个多语言的配置文件模版: [rec_multi_language_lite_train.yml](../../configs/rec/multi_language/rec_multi_language_lite_train.yml)。

|

||||

|

||||

您有两种方式创建所需的配置文件:

|

||||

|

||||

|

|

@ -368,26 +368,12 @@ PaddleOCR目前已支持26种(除中文外)语种识别,`configs/rec/multi

|

|||

| rec_ger_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 德语 | german |

|

||||

| rec_japan_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 日语 | japan |

|

||||

| rec_korean_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 韩语 | korean |

|

||||

| rec_it_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 意大利语 | it |

|

||||

| rec_xi_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 西班牙语 | xi |

|

||||

| rec_pu_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 葡萄牙语 | pu |

|

||||

| rec_ru_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 俄罗斯语 | ru |

|

||||

| rec_ar_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 阿拉伯语 | ar |

|

||||

| rec_hi_lite_train.yml | CRNN | Mobilenet_v3 small 0.5 | None | BiLSTM | ctc | 印地语 | hi |

|

||||