commit

eca0ef34ec

|

|

@ -3,10 +3,8 @@ English | [简体中文](README_cn.md)

|

||||||

## Introduction

|

## Introduction

|

||||||

PaddleOCR aims to create rich, leading, and practical OCR tools that help users train better models and apply them into practice.

|

PaddleOCR aims to create rich, leading, and practical OCR tools that help users train better models and apply them into practice.

|

||||||

|

|

||||||

**Live stream on coming day**: July 21, 2020 at 8 pm BiliBili station live stream

|

|

||||||

|

|

||||||

**Recent updates**

|

**Recent updates**

|

||||||

|

- 2020.7.23, Release the playback and PPT of live class on BiliBili station, PaddleOCR Introduction, [address](https://aistudio.baidu.com/aistudio/course/introduce/1519)

|

||||||

- 2020.7.15, Add mobile App demo , support both iOS and Android ( based on easyedge and Paddle Lite)

|

- 2020.7.15, Add mobile App demo , support both iOS and Android ( based on easyedge and Paddle Lite)

|

||||||

- 2020.7.15, Improve the deployment ability, add the C + + inference , serving deployment. In addtion, the benchmarks of the ultra-lightweight OCR model are provided.

|

- 2020.7.15, Improve the deployment ability, add the C + + inference , serving deployment. In addtion, the benchmarks of the ultra-lightweight OCR model are provided.

|

||||||

- 2020.7.15, Add several related datasets, data annotation and synthesis tools.

|

- 2020.7.15, Add several related datasets, data annotation and synthesis tools.

|

||||||

|

|

|

||||||

|

|

@ -3,9 +3,8 @@

|

||||||

## 简介

|

## 简介

|

||||||

PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力使用者训练出更好的模型,并应用落地。

|

PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力使用者训练出更好的模型,并应用落地。

|

||||||

|

|

||||||

**直播预告:2020年7月21日晚8点B站直播,PaddleOCR开源大礼包全面解读,直播地址当天更新**

|

|

||||||

|

|

||||||

**近期更新**

|

**近期更新**

|

||||||

|

- 2020.7.23 发布7月21日B站直播课回放和PPT,PaddleOCR开源大礼包全面解读,[获取地址](https://aistudio.baidu.com/aistudio/course/introduce/1519)

|

||||||

- 2020.7.15 添加基于EasyEdge和Paddle-Lite的移动端DEMO,支持iOS和Android系统

|

- 2020.7.15 添加基于EasyEdge和Paddle-Lite的移动端DEMO,支持iOS和Android系统

|

||||||

- 2020.7.15 完善预测部署,添加基于C++预测引擎推理、服务化部署和端侧部署方案,以及超轻量级中文OCR模型预测耗时Benchmark

|

- 2020.7.15 完善预测部署,添加基于C++预测引擎推理、服务化部署和端侧部署方案,以及超轻量级中文OCR模型预测耗时Benchmark

|

||||||

- 2020.7.15 整理OCR相关数据集、常用数据标注以及合成工具

|

- 2020.7.15 整理OCR相关数据集、常用数据标注以及合成工具

|

||||||

|

|

|

||||||

|

|

@ -25,6 +25,15 @@

|

||||||

android:name="com.baidu.paddle.lite.demo.ocr.SettingsActivity"

|

android:name="com.baidu.paddle.lite.demo.ocr.SettingsActivity"

|

||||||

android:label="Settings">

|

android:label="Settings">

|

||||||

</activity>

|

</activity>

|

||||||

|

<provider

|

||||||

|

android:name="android.support.v4.content.FileProvider"

|

||||||

|

android:authorities="com.baidu.paddle.lite.demo.ocr.fileprovider"

|

||||||

|

android:exported="false"

|

||||||

|

android:grantUriPermissions="true">

|

||||||

|

<meta-data

|

||||||

|

android:name="android.support.FILE_PROVIDER_PATHS"

|

||||||

|

android:resource="@xml/file_paths"></meta-data>

|

||||||

|

</provider>

|

||||||

</application>

|

</application>

|

||||||

|

|

||||||

</manifest>

|

</manifest>

|

||||||

|

|

@ -3,14 +3,17 @@ package com.baidu.paddle.lite.demo.ocr;

|

||||||

import android.Manifest;

|

import android.Manifest;

|

||||||

import android.app.ProgressDialog;

|

import android.app.ProgressDialog;

|

||||||

import android.content.ContentResolver;

|

import android.content.ContentResolver;

|

||||||

|

import android.content.Context;

|

||||||

import android.content.Intent;

|

import android.content.Intent;

|

||||||

import android.content.SharedPreferences;

|

import android.content.SharedPreferences;

|

||||||

import android.content.pm.PackageManager;

|

import android.content.pm.PackageManager;

|

||||||

import android.database.Cursor;

|

import android.database.Cursor;

|

||||||

import android.graphics.Bitmap;

|

import android.graphics.Bitmap;

|

||||||

import android.graphics.BitmapFactory;

|

import android.graphics.BitmapFactory;

|

||||||

|

import android.media.ExifInterface;

|

||||||

import android.net.Uri;

|

import android.net.Uri;

|

||||||

import android.os.Bundle;

|

import android.os.Bundle;

|

||||||

|

import android.os.Environment;

|

||||||

import android.os.Handler;

|

import android.os.Handler;

|

||||||

import android.os.HandlerThread;

|

import android.os.HandlerThread;

|

||||||

import android.os.Message;

|

import android.os.Message;

|

||||||

|

|

@ -19,6 +22,7 @@ import android.provider.MediaStore;

|

||||||

import android.support.annotation.NonNull;

|

import android.support.annotation.NonNull;

|

||||||

import android.support.v4.app.ActivityCompat;

|

import android.support.v4.app.ActivityCompat;

|

||||||

import android.support.v4.content.ContextCompat;

|

import android.support.v4.content.ContextCompat;

|

||||||

|

import android.support.v4.content.FileProvider;

|

||||||

import android.support.v7.app.AppCompatActivity;

|

import android.support.v7.app.AppCompatActivity;

|

||||||

import android.text.method.ScrollingMovementMethod;

|

import android.text.method.ScrollingMovementMethod;

|

||||||

import android.util.Log;

|

import android.util.Log;

|

||||||

|

|

@ -32,6 +36,8 @@ import android.widget.Toast;

|

||||||

import java.io.File;

|

import java.io.File;

|

||||||

import java.io.IOException;

|

import java.io.IOException;

|

||||||

import java.io.InputStream;

|

import java.io.InputStream;

|

||||||

|

import java.text.SimpleDateFormat;

|

||||||

|

import java.util.Date;

|

||||||

|

|

||||||

public class MainActivity extends AppCompatActivity {

|

public class MainActivity extends AppCompatActivity {

|

||||||

private static final String TAG = MainActivity.class.getSimpleName();

|

private static final String TAG = MainActivity.class.getSimpleName();

|

||||||

|

|

@ -69,6 +75,7 @@ public class MainActivity extends AppCompatActivity {

|

||||||

protected float[] inputMean = new float[]{};

|

protected float[] inputMean = new float[]{};

|

||||||

protected float[] inputStd = new float[]{};

|

protected float[] inputStd = new float[]{};

|

||||||

protected float scoreThreshold = 0.1f;

|

protected float scoreThreshold = 0.1f;

|

||||||

|

private String currentPhotoPath;

|

||||||

|

|

||||||

protected Predictor predictor = new Predictor();

|

protected Predictor predictor = new Predictor();

|

||||||

|

|

||||||

|

|

@ -368,18 +375,56 @@ public class MainActivity extends AppCompatActivity {

|

||||||

}

|

}

|

||||||

|

|

||||||

private void takePhoto() {

|

private void takePhoto() {

|

||||||

Intent takePhotoIntent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

|

Intent takePictureIntent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

|

||||||

if (takePhotoIntent.resolveActivity(getPackageManager()) != null) {

|

// Ensure that there's a camera activity to handle the intent

|

||||||

startActivityForResult(takePhotoIntent, TAKE_PHOTO_REQUEST_CODE);

|

if (takePictureIntent.resolveActivity(getPackageManager()) != null) {

|

||||||

|

// Create the File where the photo should go

|

||||||

|

File photoFile = null;

|

||||||

|

try {

|

||||||

|

photoFile = createImageFile();

|

||||||

|

} catch (IOException ex) {

|

||||||

|

Log.e("MainActitity", ex.getMessage(), ex);

|

||||||

|

Toast.makeText(MainActivity.this,

|

||||||

|

"Create Camera temp file failed: " + ex.getMessage(), Toast.LENGTH_SHORT).show();

|

||||||

|

}

|

||||||

|

// Continue only if the File was successfully created

|

||||||

|

if (photoFile != null) {

|

||||||

|

Log.i(TAG, "FILEPATH " + getExternalFilesDir("Pictures").getAbsolutePath());

|

||||||

|

Uri photoURI = FileProvider.getUriForFile(this,

|

||||||

|

"com.baidu.paddle.lite.demo.ocr.fileprovider",

|

||||||

|

photoFile);

|

||||||

|

currentPhotoPath = photoFile.getAbsolutePath();

|

||||||

|

takePictureIntent.putExtra(MediaStore.EXTRA_OUTPUT, photoURI);

|

||||||

|

startActivityForResult(takePictureIntent, TAKE_PHOTO_REQUEST_CODE);

|

||||||

|

Log.i(TAG, "startActivityForResult finished");

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

}

|

||||||

|

|

||||||

|

private File createImageFile() throws IOException {

|

||||||

|

// Create an image file name

|

||||||

|

String timeStamp = new SimpleDateFormat("yyyyMMdd_HHmmss").format(new Date());

|

||||||

|

String imageFileName = "JPEG_" + timeStamp + "_";

|

||||||

|

File storageDir = getExternalFilesDir(Environment.DIRECTORY_PICTURES);

|

||||||

|

File image = File.createTempFile(

|

||||||

|

imageFileName, /* prefix */

|

||||||

|

".bmp", /* suffix */

|

||||||

|

storageDir /* directory */

|

||||||

|

);

|

||||||

|

|

||||||

|

return image;

|

||||||

}

|

}

|

||||||

|

|

||||||

@Override

|

@Override

|

||||||

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

|

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

|

||||||

super.onActivityResult(requestCode, resultCode, data);

|

super.onActivityResult(requestCode, resultCode, data);

|

||||||

if (resultCode == RESULT_OK && data != null) {

|

if (resultCode == RESULT_OK) {

|

||||||

switch (requestCode) {

|

switch (requestCode) {

|

||||||

case OPEN_GALLERY_REQUEST_CODE:

|

case OPEN_GALLERY_REQUEST_CODE:

|

||||||

|

if (data == null) {

|

||||||

|

break;

|

||||||

|

}

|

||||||

try {

|

try {

|

||||||

ContentResolver resolver = getContentResolver();

|

ContentResolver resolver = getContentResolver();

|

||||||

Uri uri = data.getData();

|

Uri uri = data.getData();

|

||||||

|

|

@ -393,9 +438,22 @@ public class MainActivity extends AppCompatActivity {

|

||||||

}

|

}

|

||||||

break;

|

break;

|

||||||

case TAKE_PHOTO_REQUEST_CODE:

|

case TAKE_PHOTO_REQUEST_CODE:

|

||||||

Bundle extras = data.getExtras();

|

if (currentPhotoPath != null) {

|

||||||

Bitmap image = (Bitmap) extras.get("data");

|

ExifInterface exif = null;

|

||||||

onImageChanged(image);

|

try {

|

||||||

|

exif = new ExifInterface(currentPhotoPath);

|

||||||

|

} catch (IOException e) {

|

||||||

|

e.printStackTrace();

|

||||||

|

}

|

||||||

|

int orientation = exif.getAttributeInt(ExifInterface.TAG_ORIENTATION,

|

||||||

|

ExifInterface.ORIENTATION_UNDEFINED);

|

||||||

|

Log.i(TAG, "rotation " + orientation);

|

||||||

|

Bitmap image = BitmapFactory.decodeFile(currentPhotoPath);

|

||||||

|

image = Utils.rotateBitmap(image, orientation);

|

||||||

|

onImageChanged(image);

|

||||||

|

} else {

|

||||||

|

Log.e(TAG, "currentPhotoPath is null");

|

||||||

|

}

|

||||||

break;

|

break;

|

||||||

default:

|

default:

|

||||||

break;

|

break;

|

||||||

|

|

|

||||||

|

|

@ -35,8 +35,8 @@ public class OCRPredictorNative {

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|

||||||

public void release(){

|

public void release() {

|

||||||

if (nativePointer != 0){

|

if (nativePointer != 0) {

|

||||||

nativePointer = 0;

|

nativePointer = 0;

|

||||||

destory(nativePointer);

|

destory(nativePointer);

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -127,12 +127,12 @@ public class Predictor {

|

||||||

}

|

}

|

||||||

|

|

||||||

public void releaseModel() {

|

public void releaseModel() {

|

||||||

if (paddlePredictor != null){

|

if (paddlePredictor != null) {

|

||||||

paddlePredictor.release();

|

paddlePredictor.release();

|

||||||

paddlePredictor = null;

|

paddlePredictor = null;

|

||||||

}

|

}

|

||||||

isLoaded = false;

|

isLoaded = false;

|

||||||

cpuThreadNum = 4;

|

cpuThreadNum = 1;

|

||||||

cpuPowerMode = "LITE_POWER_HIGH";

|

cpuPowerMode = "LITE_POWER_HIGH";

|

||||||

modelPath = "";

|

modelPath = "";

|

||||||

modelName = "";

|

modelName = "";

|

||||||

|

|

@ -287,9 +287,7 @@ public class Predictor {

|

||||||

if (image == null) {

|

if (image == null) {

|

||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

// Scale image to the size of input tensor

|

this.inputImage = image.copy(Bitmap.Config.ARGB_8888, true);

|

||||||

Bitmap rgbaImage = image.copy(Bitmap.Config.ARGB_8888, true);

|

|

||||||

this.inputImage = rgbaImage;

|

|

||||||

}

|

}

|

||||||

|

|

||||||

private ArrayList<OcrResultModel> postprocess(ArrayList<OcrResultModel> results) {

|

private ArrayList<OcrResultModel> postprocess(ArrayList<OcrResultModel> results) {

|

||||||

|

|

@ -310,7 +308,7 @@ public class Predictor {

|

||||||

|

|

||||||

private void drawResults(ArrayList<OcrResultModel> results) {

|

private void drawResults(ArrayList<OcrResultModel> results) {

|

||||||

StringBuffer outputResultSb = new StringBuffer("");

|

StringBuffer outputResultSb = new StringBuffer("");

|

||||||

for (int i=0;i<results.size();i++) {

|

for (int i = 0; i < results.size(); i++) {

|

||||||

OcrResultModel result = results.get(i);

|

OcrResultModel result = results.get(i);

|

||||||

StringBuilder sb = new StringBuilder("");

|

StringBuilder sb = new StringBuilder("");

|

||||||

sb.append(result.getLabel());

|

sb.append(result.getLabel());

|

||||||

|

|

@ -320,7 +318,7 @@ public class Predictor {

|

||||||

sb.append("(").append(p.x).append(",").append(p.y).append(") ");

|

sb.append("(").append(p.x).append(",").append(p.y).append(") ");

|

||||||

}

|

}

|

||||||

Log.i(TAG, sb.toString());

|

Log.i(TAG, sb.toString());

|

||||||

outputResultSb.append(i+1).append(": ").append(result.getLabel()).append("\n");

|

outputResultSb.append(i + 1).append(": ").append(result.getLabel()).append("\n");

|

||||||

}

|

}

|

||||||

outputResult = outputResultSb.toString();

|

outputResult = outputResultSb.toString();

|

||||||

outputImage = inputImage;

|

outputImage = inputImage;

|

||||||

|

|

|

||||||

|

|

@ -2,6 +2,8 @@ package com.baidu.paddle.lite.demo.ocr;

|

||||||

|

|

||||||

import android.content.Context;

|

import android.content.Context;

|

||||||

import android.graphics.Bitmap;

|

import android.graphics.Bitmap;

|

||||||

|

import android.graphics.Matrix;

|

||||||

|

import android.media.ExifInterface;

|

||||||

import android.os.Environment;

|

import android.os.Environment;

|

||||||

|

|

||||||

import java.io.*;

|

import java.io.*;

|

||||||

|

|

@ -110,4 +112,48 @@ public class Utils {

|

||||||

}

|

}

|

||||||

return Bitmap.createScaledBitmap(bitmap, newWidth, newHeight, true);

|

return Bitmap.createScaledBitmap(bitmap, newWidth, newHeight, true);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

public static Bitmap rotateBitmap(Bitmap bitmap, int orientation) {

|

||||||

|

|

||||||

|

Matrix matrix = new Matrix();

|

||||||

|

switch (orientation) {

|

||||||

|

case ExifInterface.ORIENTATION_NORMAL:

|

||||||

|

return bitmap;

|

||||||

|

case ExifInterface.ORIENTATION_FLIP_HORIZONTAL:

|

||||||

|

matrix.setScale(-1, 1);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_ROTATE_180:

|

||||||

|

matrix.setRotate(180);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_FLIP_VERTICAL:

|

||||||

|

matrix.setRotate(180);

|

||||||

|

matrix.postScale(-1, 1);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_TRANSPOSE:

|

||||||

|

matrix.setRotate(90);

|

||||||

|

matrix.postScale(-1, 1);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_ROTATE_90:

|

||||||

|

matrix.setRotate(90);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_TRANSVERSE:

|

||||||

|

matrix.setRotate(-90);

|

||||||

|

matrix.postScale(-1, 1);

|

||||||

|

break;

|

||||||

|

case ExifInterface.ORIENTATION_ROTATE_270:

|

||||||

|

matrix.setRotate(-90);

|

||||||

|

break;

|

||||||

|

default:

|

||||||

|

return bitmap;

|

||||||

|

}

|

||||||

|

try {

|

||||||

|

Bitmap bmRotated = Bitmap.createBitmap(bitmap, 0, 0, bitmap.getWidth(), bitmap.getHeight(), matrix, true);

|

||||||

|

bitmap.recycle();

|

||||||

|

return bmRotated;

|

||||||

|

}

|

||||||

|

catch (OutOfMemoryError e) {

|

||||||

|

e.printStackTrace();

|

||||||

|

return null;

|

||||||

|

}

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,4 @@

|

||||||

|

<?xml version="1.0" encoding="utf-8"?>

|

||||||

|

<paths xmlns:android="http://schemas.android.com/apk/res/android">

|

||||||

|

<external-files-path name="my_images" path="Pictures" />

|

||||||

|

</paths>

|

||||||

|

|

@ -1,8 +1,17 @@

|

||||||

project(ocr_system CXX C)

|

project(ocr_system CXX C)

|

||||||

|

|

||||||

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

|

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

|

||||||

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

|

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

|

||||||

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

|

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

|

||||||

option(USE_TENSORRT "Compile demo with TensorRT." OFF)

|

option(WITH_TENSORRT "Compile demo with TensorRT." OFF)

|

||||||

|

|

||||||

|

SET(PADDLE_LIB "" CACHE PATH "Location of libraries")

|

||||||

|

SET(OPENCV_DIR "" CACHE PATH "Location of libraries")

|

||||||

|

SET(CUDA_LIB "" CACHE PATH "Location of libraries")

|

||||||

|

SET(CUDNN_LIB "" CACHE PATH "Location of libraries")

|

||||||

|

SET(TENSORRT_DIR "" CACHE PATH "Compile demo with TensorRT")

|

||||||

|

|

||||||

|

set(DEMO_NAME "ocr_system")

|

||||||

|

|

||||||

|

|

||||||

macro(safe_set_static_flag)

|

macro(safe_set_static_flag)

|

||||||

|

|

@ -15,24 +24,60 @@ macro(safe_set_static_flag)

|

||||||

endforeach(flag_var)

|

endforeach(flag_var)

|

||||||

endmacro()

|

endmacro()

|

||||||

|

|

||||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -g -fpermissive")

|

if (WITH_MKL)

|

||||||

set(CMAKE_STATIC_LIBRARY_PREFIX "")

|

ADD_DEFINITIONS(-DUSE_MKL)

|

||||||

message("flags" ${CMAKE_CXX_FLAGS})

|

endif()

|

||||||

set(CMAKE_CXX_FLAGS_RELEASE "-O3")

|

|

||||||

|

|

||||||

if(NOT DEFINED PADDLE_LIB)

|

if(NOT DEFINED PADDLE_LIB)

|

||||||

message(FATAL_ERROR "please set PADDLE_LIB with -DPADDLE_LIB=/path/paddle/lib")

|

message(FATAL_ERROR "please set PADDLE_LIB with -DPADDLE_LIB=/path/paddle/lib")

|

||||||

endif()

|

endif()

|

||||||

if(NOT DEFINED DEMO_NAME)

|

|

||||||

message(FATAL_ERROR "please set DEMO_NAME with -DDEMO_NAME=demo_name")

|

if(NOT DEFINED OPENCV_DIR)

|

||||||

|

message(FATAL_ERROR "please set OPENCV_DIR with -DOPENCV_DIR=/path/opencv")

|

||||||

endif()

|

endif()

|

||||||

|

|

||||||

|

|

||||||

set(OPENCV_DIR ${OPENCV_DIR})

|

if (WIN32)

|

||||||

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)

|

include_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||||

|

include_directories("${PADDLE_LIB}/paddle/include")

|

||||||

|

link_directories("${PADDLE_LIB}/paddle/fluid/inference")

|

||||||

|

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/build/ NO_DEFAULT_PATH)

|

||||||

|

|

||||||

|

else ()

|

||||||

|

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)

|

||||||

|

include_directories("${PADDLE_LIB}/paddle/include")

|

||||||

|

link_directories("${PADDLE_LIB}/paddle/lib")

|

||||||

|

endif ()

|

||||||

include_directories(${OpenCV_INCLUDE_DIRS})

|

include_directories(${OpenCV_INCLUDE_DIRS})

|

||||||

|

|

||||||

include_directories("${PADDLE_LIB}/paddle/include")

|

if (WIN32)

|

||||||

|

add_definitions("/DGOOGLE_GLOG_DLL_DECL=")

|

||||||

|

set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd")

|

||||||

|

set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT")

|

||||||

|

set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd")

|

||||||

|

set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT")

|

||||||

|

if (WITH_STATIC_LIB)

|

||||||

|

safe_set_static_flag()

|

||||||

|

add_definitions(-DSTATIC_LIB)

|

||||||

|

endif()

|

||||||

|

else()

|

||||||

|

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -g -o3 -std=c++11")

|

||||||

|

set(CMAKE_STATIC_LIBRARY_PREFIX "")

|

||||||

|

endif()

|

||||||

|

message("flags" ${CMAKE_CXX_FLAGS})

|

||||||

|

|

||||||

|

|

||||||

|

if (WITH_GPU)

|

||||||

|

if (NOT DEFINED CUDA_LIB OR ${CUDA_LIB} STREQUAL "")

|

||||||

|

message(FATAL_ERROR "please set CUDA_LIB with -DCUDA_LIB=/path/cuda-8.0/lib64")

|

||||||

|

endif()

|

||||||

|

if (NOT WIN32)

|

||||||

|

if (NOT DEFINED CUDNN_LIB)

|

||||||

|

message(FATAL_ERROR "please set CUDNN_LIB with -DCUDNN_LIB=/path/cudnn_v7.4/cuda/lib64")

|

||||||

|

endif()

|

||||||

|

endif(NOT WIN32)

|

||||||

|

endif()

|

||||||

|

|

||||||

include_directories("${PADDLE_LIB}/third_party/install/protobuf/include")

|

include_directories("${PADDLE_LIB}/third_party/install/protobuf/include")

|

||||||

include_directories("${PADDLE_LIB}/third_party/install/glog/include")

|

include_directories("${PADDLE_LIB}/third_party/install/glog/include")

|

||||||

include_directories("${PADDLE_LIB}/third_party/install/gflags/include")

|

include_directories("${PADDLE_LIB}/third_party/install/gflags/include")

|

||||||

|

|

@ -43,10 +88,12 @@ include_directories("${PADDLE_LIB}/third_party/eigen3")

|

||||||

|

|

||||||

include_directories("${CMAKE_SOURCE_DIR}/")

|

include_directories("${CMAKE_SOURCE_DIR}/")

|

||||||

|

|

||||||

if (USE_TENSORRT AND WITH_GPU)

|

if (NOT WIN32)

|

||||||

include_directories("${TENSORRT_ROOT}/include")

|

if (WITH_TENSORRT AND WITH_GPU)

|

||||||

link_directories("${TENSORRT_ROOT}/lib")

|

include_directories("${TENSORRT_DIR}/include")

|

||||||

endif()

|

link_directories("${TENSORRT_DIR}/lib")

|

||||||

|

endif()

|

||||||

|

endif(NOT WIN32)

|

||||||

|

|

||||||

link_directories("${PADDLE_LIB}/third_party/install/zlib/lib")

|

link_directories("${PADDLE_LIB}/third_party/install/zlib/lib")

|

||||||

|

|

||||||

|

|

@ -57,17 +104,24 @@ link_directories("${PADDLE_LIB}/third_party/install/xxhash/lib")

|

||||||

link_directories("${PADDLE_LIB}/paddle/lib")

|

link_directories("${PADDLE_LIB}/paddle/lib")

|

||||||

|

|

||||||

|

|

||||||

AUX_SOURCE_DIRECTORY(./src SRCS)

|

|

||||||

add_executable(${DEMO_NAME} ${SRCS})

|

|

||||||

|

|

||||||

if(WITH_MKL)

|

if(WITH_MKL)

|

||||||

include_directories("${PADDLE_LIB}/third_party/install/mklml/include")

|

include_directories("${PADDLE_LIB}/third_party/install/mklml/include")

|

||||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

|

if (WIN32)

|

||||||

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

|

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.lib

|

||||||

|

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.lib)

|

||||||

|

else ()

|

||||||

|

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

|

||||||

|

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

|

execute_process(COMMAND cp -r ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX} /usr/lib)

|

||||||

|

endif ()

|

||||||

set(MKLDNN_PATH "${PADDLE_LIB}/third_party/install/mkldnn")

|

set(MKLDNN_PATH "${PADDLE_LIB}/third_party/install/mkldnn")

|

||||||

if(EXISTS ${MKLDNN_PATH})

|

if(EXISTS ${MKLDNN_PATH})

|

||||||

include_directories("${MKLDNN_PATH}/include")

|

include_directories("${MKLDNN_PATH}/include")

|

||||||

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

|

if (WIN32)

|

||||||

|

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/mkldnn.lib)

|

||||||

|

else ()

|

||||||

|

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

|

||||||

|

endif ()

|

||||||

endif()

|

endif()

|

||||||

else()

|

else()

|

||||||

set(MATH_LIB ${PADDLE_LIB}/third_party/install/openblas/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

|

set(MATH_LIB ${PADDLE_LIB}/third_party/install/openblas/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||||

|

|

@ -82,24 +136,66 @@ else()

|

||||||

${PADDLE_LIB}/paddle/lib/libpaddle_fluid${CMAKE_SHARED_LIBRARY_SUFFIX})

|

${PADDLE_LIB}/paddle/lib/libpaddle_fluid${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

endif()

|

endif()

|

||||||

|

|

||||||

set(EXTERNAL_LIB "-lrt -ldl -lpthread -lm")

|

if (NOT WIN32)

|

||||||

|

set(DEPS ${DEPS}

|

||||||

|

${MATH_LIB} ${MKLDNN_LIB}

|

||||||

|

glog gflags protobuf z xxhash

|

||||||

|

)

|

||||||

|

if(EXISTS "${PADDLE_LIB}/third_party/install/snappystream/lib")

|

||||||

|

set(DEPS ${DEPS} snappystream)

|

||||||

|

endif()

|

||||||

|

if (EXISTS "${PADDLE_LIB}/third_party/install/snappy/lib")

|

||||||

|

set(DEPS ${DEPS} snappy)

|

||||||

|

endif()

|

||||||

|

else()

|

||||||

|

set(DEPS ${DEPS}

|

||||||

|

${MATH_LIB} ${MKLDNN_LIB}

|

||||||

|

glog gflags_static libprotobuf xxhash)

|

||||||

|

set(DEPS ${DEPS} libcmt shlwapi)

|

||||||

|

if (EXISTS "${PADDLE_LIB}/third_party/install/snappy/lib")

|

||||||

|

set(DEPS ${DEPS} snappy)

|

||||||

|

endif()

|

||||||

|

if(EXISTS "${PADDLE_LIB}/third_party/install/snappystream/lib")

|

||||||

|

set(DEPS ${DEPS} snappystream)

|

||||||

|

endif()

|

||||||

|

endif(NOT WIN32)

|

||||||

|

|

||||||

set(DEPS ${DEPS}

|

|

||||||

${MATH_LIB} ${MKLDNN_LIB}

|

|

||||||

glog gflags protobuf z xxhash

|

|

||||||

${EXTERNAL_LIB} ${OpenCV_LIBS})

|

|

||||||

|

|

||||||

if(WITH_GPU)

|

if(WITH_GPU)

|

||||||

if (USE_TENSORRT)

|

if(NOT WIN32)

|

||||||

set(DEPS ${DEPS}

|

if (WITH_TENSORRT)

|

||||||

${TENSORRT_ROOT}/lib/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

|

set(DEPS ${DEPS} ${TENSORRT_DIR}/lib/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

set(DEPS ${DEPS}

|

set(DEPS ${DEPS} ${TENSORRT_DIR}/lib/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

${TENSORRT_ROOT}/lib/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

|

endif()

|

||||||

|

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

|

set(DEPS ${DEPS} ${CUDNN_LIB}/libcudnn${CMAKE_SHARED_LIBRARY_SUFFIX})

|

||||||

|

else()

|

||||||

|

set(DEPS ${DEPS} ${CUDA_LIB}/cudart${CMAKE_STATIC_LIBRARY_SUFFIX} )

|

||||||

|

set(DEPS ${DEPS} ${CUDA_LIB}/cublas${CMAKE_STATIC_LIBRARY_SUFFIX} )

|

||||||

|

set(DEPS ${DEPS} ${CUDNN_LIB}/cudnn${CMAKE_STATIC_LIBRARY_SUFFIX})

|

||||||

endif()

|

endif()

|

||||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

|

|

||||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

|

||||||

set(DEPS ${DEPS} ${CUDA_LIB}/libcublas${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

|

||||||

set(DEPS ${DEPS} ${CUDNN_LIB}/libcudnn${CMAKE_SHARED_LIBRARY_SUFFIX} )

|

|

||||||

endif()

|

endif()

|

||||||

|

|

||||||

|

|

||||||

|

if (NOT WIN32)

|

||||||

|

set(EXTERNAL_LIB "-ldl -lrt -lgomp -lz -lm -lpthread")

|

||||||

|

set(DEPS ${DEPS} ${EXTERNAL_LIB})

|

||||||

|

endif()

|

||||||

|

|

||||||

|

set(DEPS ${DEPS} ${OpenCV_LIBS})

|

||||||

|

|

||||||

|

AUX_SOURCE_DIRECTORY(./src SRCS)

|

||||||

|

add_executable(${DEMO_NAME} ${SRCS})

|

||||||

|

|

||||||

target_link_libraries(${DEMO_NAME} ${DEPS})

|

target_link_libraries(${DEMO_NAME} ${DEPS})

|

||||||

|

|

||||||

|

if (WIN32 AND WITH_MKL)

|

||||||

|

add_custom_command(TARGET ${DEMO_NAME} POST_BUILD

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.dll ./mklml.dll

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dll

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dll

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/mklml.dll ./release/mklml.dll

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dll

|

||||||

|

COMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_LIB}/third_party/install/mkldnn/lib/mkldnn.dll ./release/mkldnn.dll

|

||||||

|

)

|

||||||

|

endif()

|

||||||

|

|

@ -0,0 +1,95 @@

|

||||||

|

# Visual Studio 2019 Community CMake 编译指南

|

||||||

|

|

||||||

|

PaddleOCR在Windows 平台下基于`Visual Studio 2019 Community` 进行了测试。微软从`Visual Studio 2017`开始即支持直接管理`CMake`跨平台编译项目,但是直到`2019`才提供了稳定和完全的支持,所以如果你想使用CMake管理项目编译构建,我们推荐你使用`Visual Studio 2019`环境下构建。

|

||||||

|

|

||||||

|

|

||||||

|

## 前置条件

|

||||||

|

* Visual Studio 2019

|

||||||

|

* CUDA 9.0 / CUDA 10.0,cudnn 7+ (仅在使用GPU版本的预测库时需要)

|

||||||

|

* CMake 3.0+

|

||||||

|

|

||||||

|

请确保系统已经安装好上述基本软件,我们使用的是`VS2019`的社区版。

|

||||||

|

|

||||||

|

**下面所有示例以工作目录为 `D:\projects`演示**。

|

||||||

|

|

||||||

|

### Step1: 下载PaddlePaddle C++ 预测库 fluid_inference

|

||||||

|

|

||||||

|

PaddlePaddle C++ 预测库针对不同的`CPU`和`CUDA`版本提供了不同的预编译版本,请根据实际情况下载: [C++预测库下载列表](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/advanced_guide/inference_deployment/inference/windows_cpp_inference.html)

|

||||||

|

|

||||||

|

解压后`D:\projects\fluid_inference`目录包含内容为:

|

||||||

|

```

|

||||||

|

fluid_inference

|

||||||

|

├── paddle # paddle核心库和头文件

|

||||||

|

|

|

||||||

|

├── third_party # 第三方依赖库和头文件

|

||||||

|

|

|

||||||

|

└── version.txt # 版本和编译信息

|

||||||

|

```

|

||||||

|

|

||||||

|

### Step2: 安装配置OpenCV

|

||||||

|

|

||||||

|

1. 在OpenCV官网下载适用于Windows平台的3.4.6版本, [下载地址](https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download)

|

||||||

|

2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\projects\opencv`

|

||||||

|

3. 配置环境变量,如下流程所示

|

||||||

|

- 我的电脑->属性->高级系统设置->环境变量

|

||||||

|

- 在系统变量中找到Path(如没有,自行创建),并双击编辑

|

||||||

|

- 新建,将opencv路径填入并保存,如`D:\projects\opencv\build\x64\vc14\bin`

|

||||||

|

|

||||||

|

### Step3: 使用Visual Studio 2019直接编译CMake

|

||||||

|

|

||||||

|

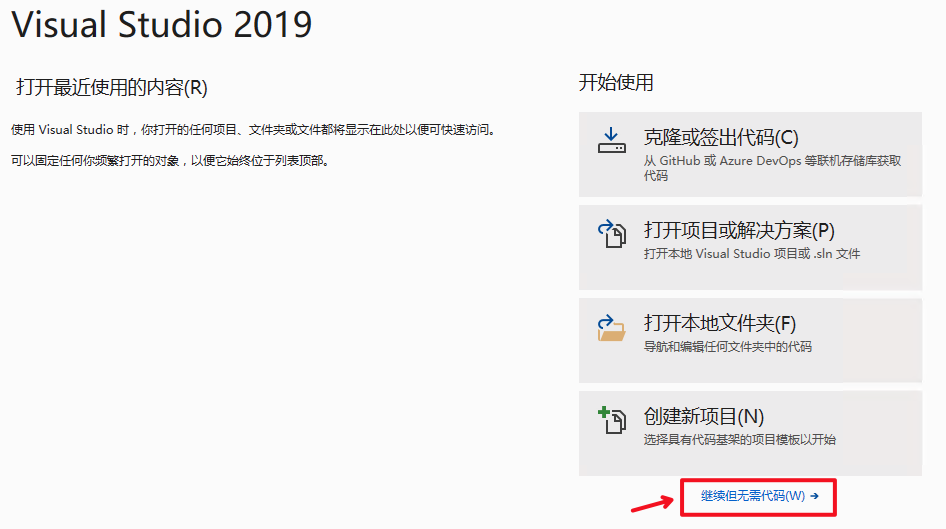

1. 打开Visual Studio 2019 Community,点击`继续但无需代码`

|

||||||

|

|

||||||

|

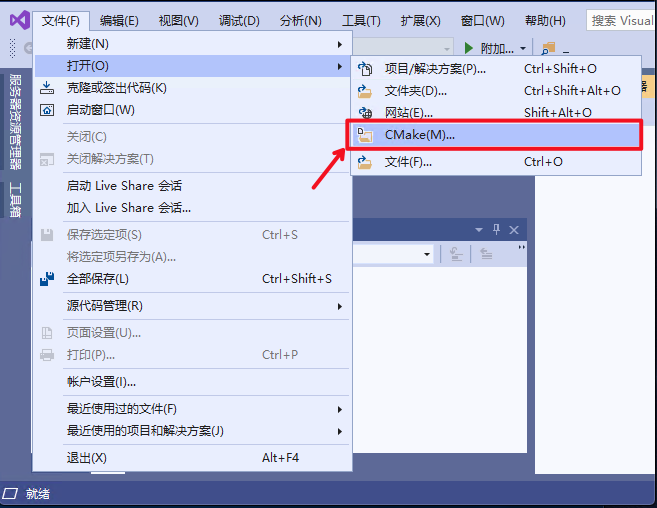

2. 点击: `文件`->`打开`->`CMake`

|

||||||

|

|

||||||

|

|

||||||

|

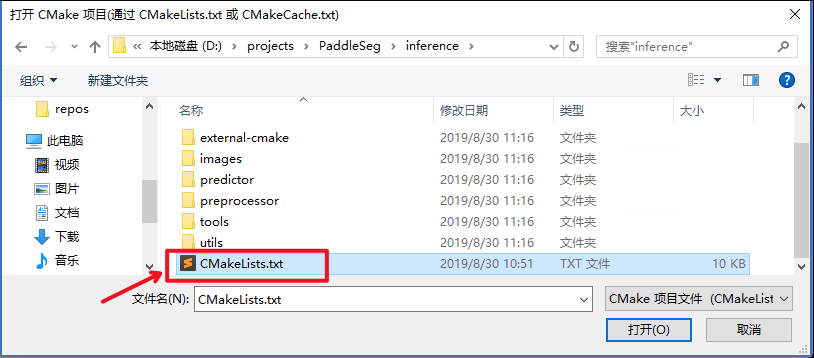

选择项目代码所在路径,并打开`CMakeList.txt`:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

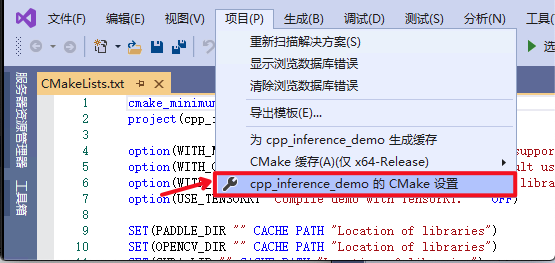

3. 点击:`项目`->`cpp_inference_demo的CMake设置`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

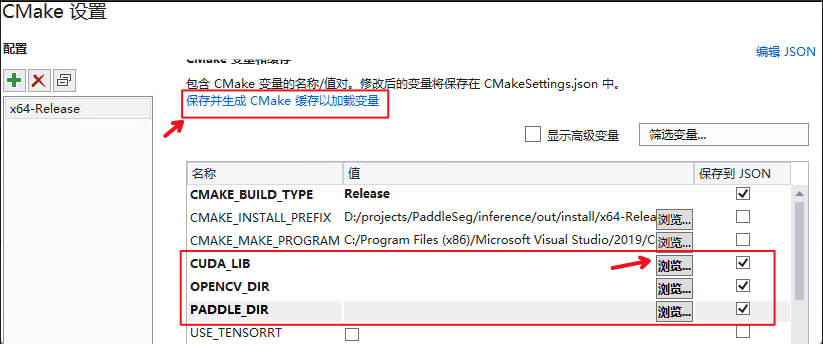

4. 点击`浏览`,分别设置编译选项指定`CUDA`、`CUDNN_LIB`、`OpenCV`、`Paddle预测库`的路径

|

||||||

|

|

||||||

|

三个编译参数的含义说明如下(带`*`表示仅在使用**GPU版本**预测库时指定, 其中CUDA库版本尽量对齐,**使用9.0、10.0版本,不使用9.2、10.1等版本CUDA库**):

|

||||||

|

|

||||||

|

| 参数名 | 含义 |

|

||||||

|

| ---- | ---- |

|

||||||

|

| *CUDA_LIB | CUDA的库路径 |

|

||||||

|

| *CUDNN_LIB | CUDNN的库路径 |

|

||||||

|

| OPENCV_DIR | OpenCV的安装路径 |

|

||||||

|

| PADDLE_LIB | Paddle预测库的路径 |

|

||||||

|

|

||||||

|

**注意:**

|

||||||

|

1. 使用`CPU`版预测库,请把`WITH_GPU`的勾去掉

|

||||||

|

2. 如果使用的是`openblas`版本,请把`WITH_MKL`勾去掉

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**设置完成后**, 点击上图中`保存并生成CMake缓存以加载变量`。

|

||||||

|

|

||||||

|

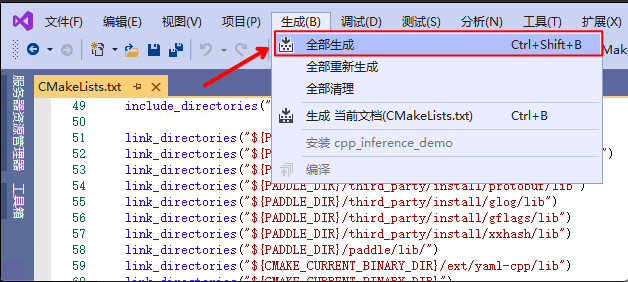

5. 点击`生成`->`全部生成`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Step4: 预测及可视化

|

||||||

|

|

||||||

|

上述`Visual Studio 2019`编译产出的可执行文件在`out\build\x64-Release`目录下,打开`cmd`,并切换到该目录:

|

||||||

|

|

||||||

|

```

|

||||||

|

cd D:\projects\PaddleOCR\deploy\cpp_infer\out\build\x64-Release

|

||||||

|

```

|

||||||

|

可执行文件`ocr_system.exe`即为样例的预测程序,其主要使用方法如下

|

||||||

|

|

||||||

|

```shell

|

||||||

|

#预测图片 `D:\projects\PaddleOCR\doc\imgs\10.jpg`

|

||||||

|

.\ocr_system.exe D:\projects\PaddleOCR\deploy\cpp_infer\tools\config.txt D:\projects\PaddleOCR\doc\imgs\10.jpg

|

||||||

|

```

|

||||||

|

|

||||||

|

第一个参数为配置文件路径,第二个参数为需要预测的图片路径。

|

||||||

|

|

||||||

|

|

||||||

|

### 注意

|

||||||

|

* 在Windows下的终端中执行文件exe时,可能会发生乱码的现象,此时需要在终端中输入`CHCP 65001`,将终端的编码方式由GBK编码(默认)改为UTF-8编码,更加具体的解释可以参考这篇博客:[https://blog.csdn.net/qq_35038153/article/details/78430359](https://blog.csdn.net/qq_35038153/article/details/78430359)。

|

||||||

|

|

@ -7,6 +7,9 @@

|

||||||

|

|

||||||

### 运行准备

|

### 运行准备

|

||||||

- Linux环境,推荐使用docker。

|

- Linux环境,推荐使用docker。

|

||||||

|

- Windows环境,目前支持基于`Visual Studio 2019 Community`进行编译。

|

||||||

|

|

||||||

|

* 该文档主要介绍基于Linux环境的PaddleOCR C++预测流程,如果需要在Windows下基于预测库进行C++预测,具体编译方法请参考[Windows下编译教程](./docs/windows_vs2019_build.md)

|

||||||

|

|

||||||

### 1.1 编译opencv库

|

### 1.1 编译opencv库

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -44,7 +44,7 @@ Config::LoadConfig(const std::string &config_path) {

|

||||||

std::map<std::string, std::string> dict;

|

std::map<std::string, std::string> dict;

|

||||||

for (int i = 0; i < config.size(); i++) {

|

for (int i = 0; i < config.size(); i++) {

|

||||||

// pass for empty line or comment

|

// pass for empty line or comment

|

||||||

if (config[i].size() <= 1 or config[i][0] == '#') {

|

if (config[i].size() <= 1 || config[i][0] == '#') {

|

||||||

continue;

|

continue;

|

||||||

}

|

}

|

||||||

std::vector<std::string> res = split(config[i], " ");

|

std::vector<std::string> res = split(config[i], " ");

|

||||||

|

|

|

||||||

|

|

@ -39,22 +39,21 @@ std::vector<std::string> Utility::ReadDict(const std::string &path) {

|

||||||

void Utility::VisualizeBboxes(

|

void Utility::VisualizeBboxes(

|

||||||

const cv::Mat &srcimg,

|

const cv::Mat &srcimg,

|

||||||

const std::vector<std::vector<std::vector<int>>> &boxes) {

|

const std::vector<std::vector<std::vector<int>>> &boxes) {

|

||||||

cv::Point rook_points[boxes.size()][4];

|

|

||||||

for (int n = 0; n < boxes.size(); n++) {

|

|

||||||

for (int m = 0; m < boxes[0].size(); m++) {

|

|

||||||

rook_points[n][m] = cv::Point(int(boxes[n][m][0]), int(boxes[n][m][1]));

|

|

||||||

}

|

|

||||||

}

|

|

||||||

cv::Mat img_vis;

|

cv::Mat img_vis;

|

||||||

srcimg.copyTo(img_vis);

|

srcimg.copyTo(img_vis);

|

||||||

for (int n = 0; n < boxes.size(); n++) {

|

for (int n = 0; n < boxes.size(); n++) {

|

||||||

const cv::Point *ppt[1] = {rook_points[n]};

|

cv::Point rook_points[4];

|

||||||

|

for (int m = 0; m < boxes[n].size(); m++) {

|

||||||

|

rook_points[m] = cv::Point(int(boxes[n][m][0]), int(boxes[n][m][1]));

|

||||||

|

}

|

||||||

|

|

||||||

|

const cv::Point *ppt[1] = {rook_points};

|

||||||

int npt[] = {4};

|

int npt[] = {4};

|

||||||

cv::polylines(img_vis, ppt, npt, 1, 1, CV_RGB(0, 255, 0), 2, 8, 0);

|

cv::polylines(img_vis, ppt, npt, 1, 1, CV_RGB(0, 255, 0), 2, 8, 0);

|

||||||

}

|

}

|

||||||

|

|

||||||

cv::imwrite("./ocr_vis.png", img_vis);

|

cv::imwrite("./ocr_vis.png", img_vis);

|

||||||

std::cout << "The detection visualized image saved in ./ocr_vis.png.pn"

|

std::cout << "The detection visualized image saved in ./ocr_vis.png"

|

||||||

<< std::endl;

|

<< std::endl;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,8 +1,7 @@

|

||||||

|

|

||||||

OPENCV_DIR=your_opencv_dir

|

OPENCV_DIR=your_opencv_dir

|

||||||

LIB_DIR=your_paddle_inference_dir

|

LIB_DIR=your_paddle_inference_dir

|

||||||

CUDA_LIB_DIR=your_cuda_lib_dir

|

CUDA_LIB_DIR=your_cuda_lib_dir

|

||||||

CUDNN_LIB_DIR=/your_cudnn_lib_dir

|

CUDNN_LIB_DIR=your_cudnn_lib_dir

|

||||||

|

|

||||||

BUILD_DIR=build

|

BUILD_DIR=build

|

||||||

rm -rf ${BUILD_DIR}

|

rm -rf ${BUILD_DIR}

|

||||||

|

|

@ -11,7 +10,6 @@ cd ${BUILD_DIR}

|

||||||

cmake .. \

|

cmake .. \

|

||||||

-DPADDLE_LIB=${LIB_DIR} \

|

-DPADDLE_LIB=${LIB_DIR} \

|

||||||

-DWITH_MKL=ON \

|

-DWITH_MKL=ON \

|

||||||

-DDEMO_NAME=ocr_system \

|

|

||||||

-DWITH_GPU=OFF \

|

-DWITH_GPU=OFF \

|

||||||

-DWITH_STATIC_LIB=OFF \

|

-DWITH_STATIC_LIB=OFF \

|

||||||

-DUSE_TENSORRT=OFF \

|

-DUSE_TENSORRT=OFF \

|

||||||

|

|

|

||||||

|

|

@ -15,8 +15,7 @@ det_model_dir ./inference/det_db

|

||||||

# rec config

|

# rec config

|

||||||

rec_model_dir ./inference/rec_crnn

|

rec_model_dir ./inference/rec_crnn

|

||||||

char_list_file ../../ppocr/utils/ppocr_keys_v1.txt

|

char_list_file ../../ppocr/utils/ppocr_keys_v1.txt

|

||||||

img_path ../../doc/imgs/11.jpg

|

|

||||||

|

|

||||||

# show the detection results

|

# show the detection results

|

||||||

visualize 0

|

visualize 1

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -18,7 +18,7 @@ Paddle Lite是飞桨轻量化推理引擎,为手机、IOT端提供高效推理

|

||||||

1. [Docker](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#docker)

|

1. [Docker](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#docker)

|

||||||

2. [Linux](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#android)

|

2. [Linux](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#android)

|

||||||

3. [MAC OS](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#id13)

|

3. [MAC OS](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#id13)

|

||||||

4. [Windows](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html#windows)

|

4. [Windows](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html#id4)

|

||||||

|

|

||||||

### 1.2 准备预测库

|

### 1.2 准备预测库

|

||||||

|

|

||||||

|

|

@ -84,7 +84,7 @@ Paddle-Lite 提供了多种策略来自动优化原始的模型,其中包括

|

||||||

|

|

||||||

|模型简介|检测模型|识别模型|Paddle-Lite版本|

|

|模型简介|检测模型|识别模型|Paddle-Lite版本|

|

||||||

|-|-|-|-|

|

|-|-|-|-|

|

||||||

|超轻量级中文OCR opt优化模型|[下载地址](https://paddleocr.bj.bcebos.com/deploy/lite/ch_det_mv3_db_opt.nb)|[下载地址](https://paddleocr.bj.bcebos.com/deploy/lite/ch_rec_mv3_crnn_opt.nb)|2.6.1|

|

|超轻量级中文OCR opt优化模型|[下载地址](https://paddleocr.bj.bcebos.com/deploy/lite/ch_det_mv3_db_opt.nb)|[下载地址](https://paddleocr.bj.bcebos.com/deploy/lite/ch_rec_mv3_crnn_opt.nb)|develop|

|

||||||

|

|

||||||

如果直接使用上述表格中的模型进行部署,可略过下述步骤,直接阅读 [2.2节](#2.2与手机联调)。

|

如果直接使用上述表格中的模型进行部署,可略过下述步骤,直接阅读 [2.2节](#2.2与手机联调)。

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -17,7 +17,7 @@ deployment solutions for end-side deployment issues.

|

||||||

[build for Docker](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#docker)

|

[build for Docker](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#docker)

|

||||||

[build for Linux](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#android)

|

[build for Linux](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#android)

|

||||||

[build for MAC OS](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#id13)

|

[build for MAC OS](https://paddle-lite.readthedocs.io/zh/latest/user_guides/source_compile.html#id13)

|

||||||

[build for windows](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html#windows)

|

[build for windows](https://paddle-lite.readthedocs.io/zh/latest/demo_guides/x86.html#id4)

|

||||||

|

|

||||||

## 3. Download prebuild library for android and ios

|

## 3. Download prebuild library for android and ios

|

||||||

|

|

||||||

|

|

@ -155,7 +155,7 @@ demo/cxx/ocr/

|

||||||

|-- debug/

|

|-- debug/

|

||||||

| |--ch_det_mv3_db_opt.nb Detection model

|

| |--ch_det_mv3_db_opt.nb Detection model

|

||||||

| |--ch_rec_mv3_crnn_opt.nb Recognition model

|

| |--ch_rec_mv3_crnn_opt.nb Recognition model

|

||||||

| |--11.jpg image for OCR

|

| |--11.jpg Image for OCR

|

||||||

| |--ppocr_keys_v1.txt Dictionary file

|

| |--ppocr_keys_v1.txt Dictionary file

|

||||||

| |--libpaddle_light_api_shared.so C++ .so file

|

| |--libpaddle_light_api_shared.so C++ .so file

|

||||||

| |--config.txt Config file

|

| |--config.txt Config file

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,71 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

import cv2

|

||||||

|

import sys

|

||||||

|

import numpy as np

|

||||||

|

import os

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import Sequential, ResizeByFactor

|

||||||

|

from paddle_serving_app.reader import Div, Normalize, Transpose

|

||||||

|

from paddle_serving_app.reader import DBPostProcess, FilterBoxes

|

||||||

|

from paddle_serving_server_gpu.web_service import WebService

|

||||||

|

import time

|

||||||

|

import re

|

||||||

|

import base64

|

||||||

|

|

||||||

|

|

||||||

|

class OCRService(WebService):

|

||||||

|

def init_det(self):

|

||||||

|

self.det_preprocess = Sequential([

|

||||||

|

ResizeByFactor(32, 960), Div(255),

|

||||||

|

Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]), Transpose(

|

||||||

|

(2, 0, 1))

|

||||||

|

])

|

||||||

|

self.filter_func = FilterBoxes(10, 10)

|

||||||

|

self.post_func = DBPostProcess({

|

||||||

|

"thresh": 0.3,

|

||||||

|

"box_thresh": 0.5,

|

||||||

|

"max_candidates": 1000,

|

||||||

|

"unclip_ratio": 1.5,

|

||||||

|

"min_size": 3

|

||||||

|

})

|

||||||

|

|

||||||

|

def preprocess(self, feed=[], fetch=[]):

|

||||||

|

data = base64.b64decode(feed[0]["image"].encode('utf8'))

|

||||||

|

data = np.fromstring(data, np.uint8)

|

||||||

|

im = cv2.imdecode(data, cv2.IMREAD_COLOR)

|

||||||

|

self.ori_h, self.ori_w, _ = im.shape

|

||||||

|

det_img = self.det_preprocess(im)

|

||||||

|

_, self.new_h, self.new_w = det_img.shape

|

||||||

|

return {"image": det_img[np.newaxis, :].copy()}, ["concat_1.tmp_0"]

|

||||||

|

|

||||||

|

def postprocess(self, feed={}, fetch=[], fetch_map=None):

|

||||||

|

det_out = fetch_map["concat_1.tmp_0"]

|

||||||

|

ratio_list = [

|

||||||

|

float(self.new_h) / self.ori_h, float(self.new_w) / self.ori_w

|

||||||

|

]

|

||||||

|

dt_boxes_list = self.post_func(det_out, [ratio_list])

|

||||||

|

dt_boxes = self.filter_func(dt_boxes_list[0], [self.ori_h, self.ori_w])

|

||||||

|

return {"dt_boxes": dt_boxes.tolist()}

|

||||||

|

|

||||||

|

|

||||||

|

ocr_service = OCRService(name="ocr")

|

||||||

|

ocr_service.load_model_config("ocr_det_model")

|

||||||

|

ocr_service.set_gpus("0")

|

||||||

|

ocr_service.prepare_server(workdir="workdir", port=9292, device="gpu", gpuid=0)

|

||||||

|

ocr_service.init_det()

|

||||||

|

ocr_service.run_debugger_service()

|

||||||

|

ocr_service.run_web_service()

|

||||||

|

|

@ -0,0 +1,72 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

import cv2

|

||||||

|

import sys

|

||||||

|

import numpy as np

|

||||||

|

import os

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import Sequential, ResizeByFactor

|

||||||

|

from paddle_serving_app.reader import Div, Normalize, Transpose

|

||||||

|

from paddle_serving_app.reader import DBPostProcess, FilterBoxes

|

||||||

|

from paddle_serving_server_gpu.web_service import WebService

|

||||||

|

import time

|

||||||

|

import re

|

||||||

|

import base64

|

||||||

|

|

||||||

|

|

||||||

|

class OCRService(WebService):

|

||||||

|

def init_det(self):

|

||||||

|

self.det_preprocess = Sequential([

|

||||||

|

ResizeByFactor(32, 960), Div(255),

|

||||||

|

Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]), Transpose(

|

||||||

|

(2, 0, 1))

|

||||||

|

])

|

||||||

|

self.filter_func = FilterBoxes(10, 10)

|

||||||

|

self.post_func = DBPostProcess({

|

||||||

|

"thresh": 0.3,

|

||||||

|

"box_thresh": 0.5,

|

||||||

|

"max_candidates": 1000,

|

||||||

|

"unclip_ratio": 1.5,

|

||||||

|

"min_size": 3

|

||||||

|

})

|

||||||

|

|

||||||

|

def preprocess(self, feed=[], fetch=[]):

|

||||||

|

data = base64.b64decode(feed[0]["image"].encode('utf8'))

|

||||||

|

data = np.fromstring(data, np.uint8)

|

||||||

|

im = cv2.imdecode(data, cv2.IMREAD_COLOR)

|

||||||

|

self.ori_h, self.ori_w, _ = im.shape

|

||||||

|

det_img = self.det_preprocess(im)

|

||||||

|

_, self.new_h, self.new_w = det_img.shape

|

||||||

|

print(det_img)

|

||||||

|

return {"image": det_img}, ["concat_1.tmp_0"]

|

||||||

|

|

||||||

|

def postprocess(self, feed={}, fetch=[], fetch_map=None):

|

||||||

|

det_out = fetch_map["concat_1.tmp_0"]

|

||||||

|

ratio_list = [

|

||||||

|

float(self.new_h) / self.ori_h, float(self.new_w) / self.ori_w

|

||||||

|

]

|

||||||

|

dt_boxes_list = self.post_func(det_out, [ratio_list])

|

||||||

|

dt_boxes = self.filter_func(dt_boxes_list[0], [self.ori_h, self.ori_w])

|

||||||

|

return {"dt_boxes": dt_boxes.tolist()}

|

||||||

|

|

||||||

|

|

||||||

|

ocr_service = OCRService(name="ocr")

|

||||||

|

ocr_service.load_model_config("ocr_det_model")

|

||||||

|

ocr_service.set_gpus("0")

|

||||||

|

ocr_service.prepare_server(workdir="workdir", port=9292, device="gpu", gpuid=0)

|

||||||

|

ocr_service.init_det()

|

||||||

|

ocr_service.run_rpc_service()

|

||||||

|

ocr_service.run_web_service()

|

||||||

|

|

@ -0,0 +1,103 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import OCRReader

|

||||||

|

import cv2

|

||||||

|

import sys

|

||||||

|

import numpy as np

|

||||||

|

import os

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import Sequential, URL2Image, ResizeByFactor

|

||||||

|

from paddle_serving_app.reader import Div, Normalize, Transpose

|

||||||

|

from paddle_serving_app.reader import DBPostProcess, FilterBoxes, GetRotateCropImage, SortedBoxes

|

||||||

|

from paddle_serving_server_gpu.web_service import WebService

|

||||||

|

from paddle_serving_app.local_predict import Debugger

|

||||||

|

import time

|

||||||

|

import re

|

||||||

|

import base64

|

||||||

|

|

||||||

|

|

||||||

|

class OCRService(WebService):

|

||||||

|

def init_det_debugger(self, det_model_config):

|

||||||

|

self.det_preprocess = Sequential([

|

||||||

|

ResizeByFactor(32, 960), Div(255),

|

||||||

|

Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]), Transpose(

|

||||||

|

(2, 0, 1))

|

||||||

|

])

|

||||||

|

self.det_client = Debugger()

|

||||||

|

self.det_client.load_model_config(

|

||||||

|

det_model_config, gpu=True, profile=False)

|

||||||

|

self.ocr_reader = OCRReader()

|

||||||

|

|

||||||

|

def preprocess(self, feed=[], fetch=[]):

|

||||||

|

data = base64.b64decode(feed[0]["image"].encode('utf8'))

|

||||||

|

data = np.fromstring(data, np.uint8)

|

||||||

|

im = cv2.imdecode(data, cv2.IMREAD_COLOR)

|

||||||

|

ori_h, ori_w, _ = im.shape

|

||||||

|

det_img = self.det_preprocess(im)

|

||||||

|

_, new_h, new_w = det_img.shape

|

||||||

|

det_img = det_img[np.newaxis, :]

|

||||||

|

det_img = det_img.copy()

|

||||||

|

det_out = self.det_client.predict(

|

||||||

|

feed={"image": det_img}, fetch=["concat_1.tmp_0"])

|

||||||

|

filter_func = FilterBoxes(10, 10)

|

||||||

|

post_func = DBPostProcess({

|

||||||

|

"thresh": 0.3,

|

||||||

|

"box_thresh": 0.5,

|

||||||

|

"max_candidates": 1000,

|

||||||

|

"unclip_ratio": 1.5,

|

||||||

|

"min_size": 3

|

||||||

|

})

|

||||||

|

sorted_boxes = SortedBoxes()

|

||||||

|

ratio_list = [float(new_h) / ori_h, float(new_w) / ori_w]

|

||||||

|

dt_boxes_list = post_func(det_out["concat_1.tmp_0"], [ratio_list])

|

||||||

|

dt_boxes = filter_func(dt_boxes_list[0], [ori_h, ori_w])

|

||||||

|

dt_boxes = sorted_boxes(dt_boxes)

|

||||||

|

get_rotate_crop_image = GetRotateCropImage()

|

||||||

|

img_list = []

|

||||||

|

max_wh_ratio = 0

|

||||||

|

for i, dtbox in enumerate(dt_boxes):

|

||||||

|

boximg = get_rotate_crop_image(im, dt_boxes[i])

|

||||||

|

img_list.append(boximg)

|

||||||

|

h, w = boximg.shape[0:2]

|

||||||

|

wh_ratio = w * 1.0 / h

|

||||||

|

max_wh_ratio = max(max_wh_ratio, wh_ratio)

|

||||||

|

if len(img_list) == 0:

|

||||||

|

return [], []

|

||||||

|

_, w, h = self.ocr_reader.resize_norm_img(img_list[0],

|

||||||

|

max_wh_ratio).shape

|

||||||

|

imgs = np.zeros((len(img_list), 3, w, h)).astype('float32')

|

||||||

|

for id, img in enumerate(img_list):

|

||||||

|

norm_img = self.ocr_reader.resize_norm_img(img, max_wh_ratio)

|

||||||

|

imgs[id] = norm_img

|

||||||

|

feed = {"image": imgs.copy()}

|

||||||

|

fetch = ["ctc_greedy_decoder_0.tmp_0", "softmax_0.tmp_0"]

|

||||||

|

return feed, fetch

|

||||||

|

|

||||||

|

def postprocess(self, feed={}, fetch=[], fetch_map=None):

|

||||||

|

rec_res = self.ocr_reader.postprocess(fetch_map, with_score=True)

|

||||||

|

res_lst = []

|

||||||

|

for res in rec_res:

|

||||||

|

res_lst.append(res[0])

|

||||||

|

res = {"res": res_lst}

|

||||||

|

return res

|

||||||

|

|

||||||

|

|

||||||

|

ocr_service = OCRService(name="ocr")

|

||||||

|

ocr_service.load_model_config("ocr_rec_model")

|

||||||

|

ocr_service.prepare_server(workdir="workdir", port=9292)

|

||||||

|

ocr_service.init_det_debugger(det_model_config="ocr_det_model")

|

||||||

|

ocr_service.run_debugger_service(gpu=True)

|

||||||

|

ocr_service.run_web_service()

|

||||||

|

|

@ -0,0 +1,37 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

|

import requests

|

||||||

|

import json

|

||||||

|

import cv2

|

||||||

|

import base64

|

||||||

|

import os, sys

|

||||||

|

import time

|

||||||

|

|

||||||

|

def cv2_to_base64(image):

|

||||||

|

#data = cv2.imencode('.jpg', image)[1]

|

||||||

|

return base64.b64encode(image).decode(

|

||||||

|

'utf8') #data.tostring()).decode('utf8')

|

||||||

|

|

||||||

|

headers = {"Content-type": "application/json"}

|

||||||

|

url = "http://127.0.0.1:9292/ocr/prediction"

|

||||||

|

test_img_dir = "../../doc/imgs/"

|

||||||

|

for img_file in os.listdir(test_img_dir):

|

||||||

|

with open(os.path.join(test_img_dir, img_file), 'rb') as file:

|

||||||

|

image_data1 = file.read()

|

||||||

|

image = cv2_to_base64(image_data1)

|

||||||

|

data = {"feed": [{"image": image}], "fetch": ["res"]}

|

||||||

|

r = requests.post(url=url, headers=headers, data=json.dumps(data))

|

||||||

|

print(r.json())

|

||||||

|

|

@ -0,0 +1,99 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import OCRReader

|

||||||

|

import cv2

|

||||||

|

import sys

|

||||||

|

import numpy as np

|

||||||

|

import os

|

||||||

|

from paddle_serving_client import Client

|

||||||

|

from paddle_serving_app.reader import Sequential, URL2Image, ResizeByFactor

|

||||||

|

from paddle_serving_app.reader import Div, Normalize, Transpose

|

||||||

|

from paddle_serving_app.reader import DBPostProcess, FilterBoxes, GetRotateCropImage, SortedBoxes

|

||||||

|

from paddle_serving_server_gpu.web_service import WebService

|

||||||

|

import time

|

||||||

|

import re

|

||||||

|

import base64

|

||||||

|

|

||||||

|

|

||||||

|

class OCRService(WebService):

|

||||||

|