Merge branch 'develop' of https://github.com/PaddlePaddle/PaddleOCR into develop-nblib

This commit is contained in:

commit

f7ae9a5fb3

|

|

@ -21,3 +21,7 @@ output/

|

||||||

*.log

|

*.log

|

||||||

.clang-format

|

.clang-format

|

||||||

.clang_format.hook

|

.clang_format.hook

|

||||||

|

|

||||||

|

build/

|

||||||

|

dist/

|

||||||

|

paddleocr.egg-info/

|

||||||

|

|

@ -0,0 +1,8 @@

|

||||||

|

include LICENSE.txt

|

||||||

|

include README.md

|

||||||

|

|

||||||

|

recursive-include ppocr/utils *.txt utility.py character.py check.py

|

||||||

|

recursive-include ppocr/data/det *.py

|

||||||

|

recursive-include ppocr/postprocess *.py

|

||||||

|

recursive-include ppocr/postprocess/lanms *.*

|

||||||

|

recursive-include tools/infer *.py

|

||||||

282

README.md

282

README.md

|

|

@ -1,209 +1,139 @@

|

||||||

[English](README_en.md) | 简体中文

|

English | [简体中文](README_ch.md)

|

||||||

|

|

||||||

## 简介

|

## Introduction

|

||||||

PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力使用者训练出更好的模型,并应用落地。

|

PaddleOCR aims to create rich, leading, and practical OCR tools that help users train better models and apply them into practice.

|

||||||

|

|

||||||

**近期更新**

|

**Recent updates**

|

||||||

- 2020.7.15 添加基于EasyEdge和Paddle-Lite的移动端DEMO,支持iOS和Android系统

|

- 2020.9.22 Update the PP-OCR technical article, https://arxiv.org/abs/2009.09941

|

||||||

- 2020.7.15 完善预测部署,添加基于C++预测引擎推理、服务化部署和端侧部署方案,以及超轻量级中文OCR模型预测耗时Benchmark

|

- 2020.9.19 Update the ultra lightweight compressed ppocr_mobile_slim series models, the overall model size is 3.5M (see [PP-OCR Pipline](#PP-OCR-Pipline)), suitable for mobile deployment. [Model Downloads](#Supported-Chinese-model-list)

|

||||||

- 2020.7.15 整理OCR相关数据集、常用数据标注以及合成工具

|

- 2020.9.17 Update the ultra lightweight ppocr_mobile series and general ppocr_server series Chinese and English ocr models, which are comparable to commercial effects. [Model Downloads](#Supported-Chinese-model-list)

|

||||||

- 2020.7.9 添加支持空格的识别模型,识别效果,预测及训练方式请参考快速开始和文本识别训练相关文档

|

- 2020.8.24 Support the use of PaddleOCR through whl package installation,pelease refer [PaddleOCR Package](./doc/doc_en/whl_en.md)

|

||||||

- 2020.7.9 添加数据增强、学习率衰减策略,具体参考[配置文件](./doc/doc_ch/config.md)

|

- 2020.8.21 Update the replay and PPT of the live lesson at Bilibili on August 18, lesson 2, easy to learn and use OCR tool spree. [Get Address](https://aistudio.baidu.com/aistudio/education/group/info/1519)

|

||||||

- [more](./doc/doc_ch/update.md)

|

- [more](./doc/doc_en/update_en.md)

|

||||||

|

|

||||||

## 特性

|

## Features

|

||||||

- 超轻量级中文OCR模型,总模型仅8.6M

|

- PPOCR series of high-quality pre-trained models, comparable to commercial effects

|

||||||

- 单模型支持中英文数字组合识别、竖排文本识别、长文本识别

|

- Ultra lightweight ppocr_mobile series models: detection (2.6M) + direction classifier (0.9M) + recognition (4.6M) = 8.1M

|

||||||

- 检测模型DB(4.1M)+识别模型CRNN(4.5M)

|

- General ppocr_server series models: detection (47.2M) + direction classifier (0.9M) + recognition (107M) = 155.1M

|

||||||

- 实用通用中文OCR模型

|

- Ultra lightweight compression ppocr_mobile_slim series models: detection (1.4M) + direction classifier (0.5M) + recognition (1.6M) = 3.5M

|

||||||

- 多种预测推理部署方案,包括服务部署和端侧部署

|

- Support Chinese, English, and digit recognition, vertical text recognition, and long text recognition

|

||||||

- 多种文本检测训练算法,EAST、DB

|

- Support multi-language recognition: Korean, Japanese, German, French

|

||||||

- 多种文本识别训练算法,Rosetta、CRNN、STAR-Net、RARE

|

- Support user-defined training, provides rich predictive inference deployment solutions

|

||||||

- 可运行于Linux、Windows、MacOS等多种系统

|

- Support PIP installation, easy to use

|

||||||

|

- Support Linux, Windows, MacOS and other systems

|

||||||

|

|

||||||

## 快速体验

|

## Visualization

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="doc/imgs_results/11.jpg" width="800">

|

<img src="doc/imgs_results/1101.jpg" width="800">

|

||||||

|

<img src="doc/imgs_results/1103.jpg" width="800">

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

上图是超轻量级中文OCR模型效果展示,更多效果图请见[效果展示页面](./doc/doc_ch/visualization.md)。

|

The above pictures are the visualizations of the general ppocr_server model. For more effect pictures, please see [More visualizations](./doc/doc_en/visualization_en.md).

|

||||||

|

|

||||||

- 超轻量级中文OCR在线体验地址:https://www.paddlepaddle.org.cn/hub/scene/ocr

|

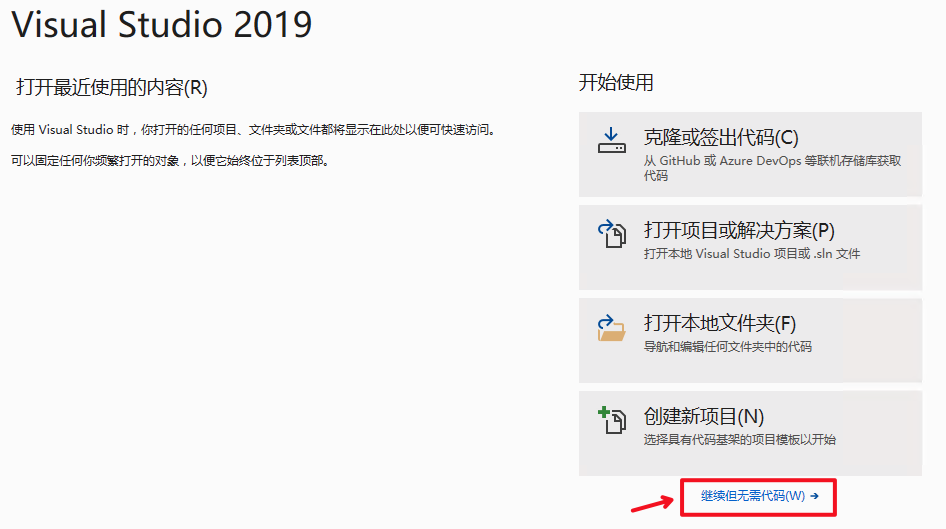

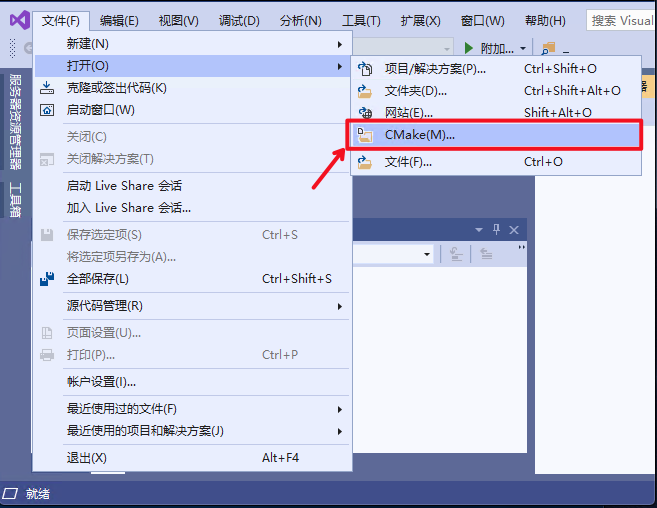

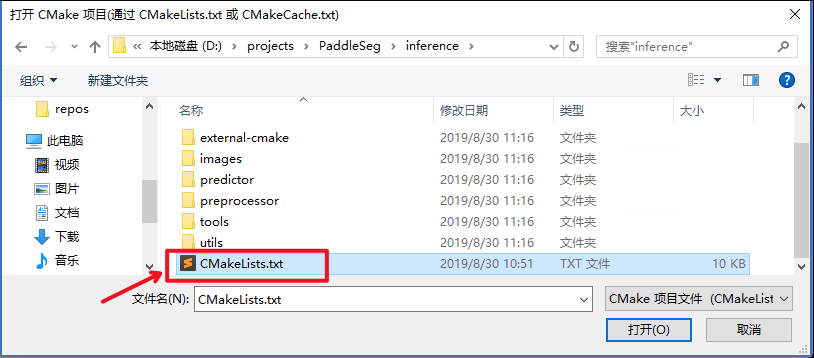

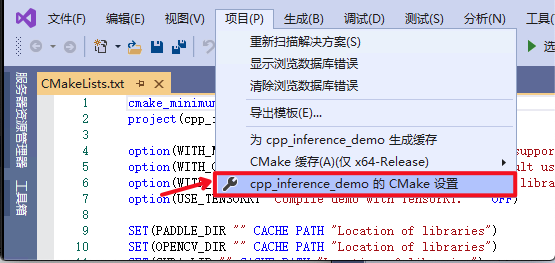

## Quick Experience

|

||||||

- 移动端DEMO体验(基于EasyEdge和Paddle-Lite, 支持iOS和Android系统):[安装包二维码获取地址](https://ai.baidu.com/easyedge/app/openSource?from=paddlelite)

|

|

||||||

|

|

||||||

Android手机也可以扫描下面二维码安装体验。

|

You can also quickly experience the ultra-lightweight OCR : [Online Experience](https://www.paddlepaddle.org.cn/hub/scene/ocr)

|

||||||

|

|

||||||

|

Mobile DEMO experience (based on EasyEdge and Paddle-Lite, supports iOS and Android systems): [Sign in to the website to obtain the QR code for installing the App](https://ai.baidu.com/easyedge/app/openSource?from=paddlelite)

|

||||||

|

|

||||||

|

Also, you can scan the QR code below to install the App (**Android support only**)

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="./doc/ocr-android-easyedge.png" width = "200" height = "200" />

|

<img src="./doc/ocr-android-easyedge.png" width = "200" height = "200" />

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

- [**中文OCR模型快速使用**](./doc/doc_ch/quickstart.md)

|

- [**OCR Quick Start**](./doc/doc_en/quickstart_en.md)

|

||||||

|

|

||||||

|

<a name="Supported-Chinese-model-list"></a>

|

||||||

|

|

||||||

|

## PP-OCR 1.1 series model list(Update on Sep 17)

|

||||||

|

|

||||||

|

| Model introduction | Model name | Recommended scene | Detection model | Direction classifier | Recognition model |

|

||||||

|

| ------------------------------------------------------------ | ---------------------------- | ----------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

|

||||||

|

| Chinese and English ultra-lightweight OCR model (8.1M) | ch_ppocr_mobile_v1.1_xx | Mobile & server | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/mobile/det/ch_ppocr_mobile_v1.1_det_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/mobile/det/ch_ppocr_mobile_v1.1_det_train.tar) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_train.tar) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/mobile/rec/ch_ppocr_mobile_v1.1_rec_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/mobile/rec/ch_ppocr_mobile_v1.1_rec_pre.tar) |

|

||||||

|

| Chinese and English general OCR model (155.1M) | ch_ppocr_server_v1.1_xx | Server | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/server/det/ch_ppocr_server_v1.1_det_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/server/det/ch_ppocr_server_v1.1_det_train.tar) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_train.tar) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/server/rec/ch_ppocr_server_v1.1_rec_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/20-09-22/server/rec/ch_ppocr_server_v1.1_rec_pre.tar) |

|

||||||

|

| Chinese and English ultra-lightweight compressed OCR model (3.5M) | ch_ppocr_mobile_slim_v1.1_xx | Mobile | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/det/ch_ppocr_mobile_v1.1_det_prune_infer.tar) / [slim model](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/det/ch_ppocr_mobile_v1.1_det_prune_opt.nb) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_quant_infer.tar) / [slim model](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_cls_quant_opt.nb) | [inference model](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/rec/ch_ppocr_mobile_v1.1_rec_quant_infer.tar) / [slim model](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/rec/ch_ppocr_mobile_v1.1_rec_quant_opt.nb) |

|

||||||

|

|

||||||

|

For more model downloads (including multiple languages), please refer to [PP-OCR v1.1 series model downloads](./doc/doc_en/models_list_en.md)

|

||||||

|

|

||||||

|

|

||||||

## 中文OCR模型列表

|

## Tutorials

|

||||||

|

- [Installation](./doc/doc_en/installation_en.md)

|

||||||

|

- [Quick Start](./doc/doc_en/quickstart_en.md)

|

||||||

|

- [Code Structure](./doc/doc_en/tree_en.md)

|

||||||

|

- Algorithm introduction

|

||||||

|

- [Text Detection Algorithm](./doc/doc_en/algorithm_overview_en.md)

|

||||||

|

- [Text Recognition Algorithm](./doc/doc_en/algorithm_overview_en.md)

|

||||||

|

- [PP-OCR Pipline](#PP-OCR-Pipline)

|

||||||

|

- Model training/evaluation

|

||||||

|

- [Text Detection](./doc/doc_en/detection_en.md)

|

||||||

|

- [Text Recognition](./doc/doc_en/recognition_en.md)

|

||||||

|

- [Direction Classification](./doc/doc_en/angle_class_en.md)

|

||||||

|

- [Yml Configuration](./doc/doc_en/config_en.md)

|

||||||

|

- Inference and Deployment

|

||||||

|

- [Quick inference based on pip](./doc/doc_en/whl_en.md)

|

||||||

|

- [Python Inference](./doc/doc_en/inference_en.md)

|

||||||

|

- [C++ Inference](./deploy/cpp_infer/readme_en.md)

|

||||||

|

- [Serving](./deploy/hubserving/readme_en.md)

|

||||||

|

- [Mobile](./deploy/lite/readme_en.md)

|

||||||

|

- [Model Quantization](./deploy/slim/quantization/README_en.md)

|

||||||

|

- [Model Compression](./deploy/slim/prune/README_en.md)

|

||||||

|

- [Benchmark](./doc/doc_en/benchmark_en.md)

|

||||||

|

- Datasets

|

||||||

|

- [General OCR Datasets(Chinese/English)](./doc/doc_en/datasets_en.md)

|

||||||

|

- [HandWritten_OCR_Datasets(Chinese)](./doc/doc_en/handwritten_datasets_en.md)

|

||||||

|

- [Various OCR Datasets(multilingual)](./doc/doc_en/vertical_and_multilingual_datasets_en.md)

|

||||||

|

- [Data Annotation Tools](./doc/doc_en/data_annotation_en.md)

|

||||||

|

- [Data Synthesis Tools](./doc/doc_en/data_synthesis_en.md)

|

||||||

|

- [Visualization](#Visualization)

|

||||||

|

- [FAQ](./doc/doc_en/FAQ_en.md)

|

||||||

|

- [Community](#Community)

|

||||||

|

- [References](./doc/doc_en/reference_en.md)

|

||||||

|

- [License](#LICENSE)

|

||||||

|

- [Contribution](#CONTRIBUTION)

|

||||||

|

|

||||||

|模型名称|模型简介|检测模型地址|识别模型地址|支持空格的识别模型地址|

|

<a name="PP-OCR-Pipline"></a>

|

||||||

|-|-|-|-|-|

|

|

||||||

|chinese_db_crnn_mobile|超轻量级中文OCR模型|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db.tar)|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn.tar)|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance.tar)

|

|

||||||

|chinese_db_crnn_server|通用中文OCR模型|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db.tar)|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn.tar)|[inference模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance.tar)

|

|

||||||

|

|

||||||

## 文档教程

|

## PP-OCR Pipline

|

||||||

- [快速安装](./doc/doc_ch/installation.md)

|

|

||||||

- [中文OCR模型快速使用](./doc/doc_ch/quickstart.md)

|

|

||||||

- 算法介绍

|

|

||||||

- [文本检测](#文本检测算法)

|

|

||||||

- [文本识别](#文本识别算法)

|

|

||||||

- [端到端OCR](#端到端OCR算法)

|

|

||||||

- 模型训练/评估

|

|

||||||

- [文本检测](./doc/doc_ch/detection.md)

|

|

||||||

- [文本识别](./doc/doc_ch/recognition.md)

|

|

||||||

- [yml参数配置文件介绍](./doc/doc_ch/config.md)

|

|

||||||

- 中文OCR训练预测技巧

|

|

||||||

- 预测部署

|

|

||||||

- [基于Python预测引擎推理](./doc/doc_ch/inference.md)

|

|

||||||

- [基于C++预测引擎推理](./deploy/cpp_infer/readme.md)

|

|

||||||

- [服务化部署](./doc/doc_ch/serving.md)

|

|

||||||

- [端侧部署](./deploy/lite/readme.md)

|

|

||||||

- 模型量化压缩

|

|

||||||

- [Benchmark](./doc/doc_ch/benchmark.md)

|

|

||||||

- 数据集

|

|

||||||

- [通用中英文OCR数据集](./doc/doc_ch/datasets.md)

|

|

||||||

- [手写中文OCR数据集](./doc/doc_ch/handwritten_datasets.md)

|

|

||||||

- 垂类多语言OCR数据集

|

|

||||||

- [常用数据标注工具](./doc/doc_ch/data_annotation.md)

|

|

||||||

- [常用数据合成工具](./doc/doc_ch/data_synthesis.md)

|

|

||||||

- [FAQ](#FAQ)

|

|

||||||

- 效果展示

|

|

||||||

- [超轻量级中文OCR效果展示](#超轻量级中文OCR效果展示)

|

|

||||||

- [通用中文OCR效果展示](#通用中文OCR效果展示)

|

|

||||||

- [支持空格的中文OCR效果展示](#支持空格的中文OCR效果展示)

|

|

||||||

- [技术交流群](#欢迎加入PaddleOCR技术交流群)

|

|

||||||

- [参考文献](./doc/doc_ch/reference.md)

|

|

||||||

- [许可证书](#许可证书)

|

|

||||||

- [贡献代码](#贡献代码)

|

|

||||||

|

|

||||||

<a name="算法介绍"></a>

|

|

||||||

## 算法介绍

|

|

||||||

<a name="文本检测算法"></a>

|

|

||||||

### 1.文本检测算法

|

|

||||||

|

|

||||||

PaddleOCR开源的文本检测算法列表:

|

|

||||||

- [x] EAST([paper](https://arxiv.org/abs/1704.03155))

|

|

||||||

- [x] DB([paper](https://arxiv.org/abs/1911.08947))

|

|

||||||

- [ ] SAST([paper](https://arxiv.org/abs/1908.05498))(百度自研, comming soon)

|

|

||||||

|

|

||||||

在ICDAR2015文本检测公开数据集上,算法效果如下:

|

|

||||||

|

|

||||||

|模型|骨干网络|precision|recall|Hmean|下载链接|

|

|

||||||

|-|-|-|-|-|-|

|

|

||||||

|EAST|ResNet50_vd|88.18%|85.51%|86.82%|[下载链接](https://paddleocr.bj.bcebos.com/det_r50_vd_east.tar)|

|

|

||||||

|EAST|MobileNetV3|81.67%|79.83%|80.74%|[下载链接](https://paddleocr.bj.bcebos.com/det_mv3_east.tar)|

|

|

||||||

|DB|ResNet50_vd|83.79%|80.65%|82.19%|[下载链接](https://paddleocr.bj.bcebos.com/det_r50_vd_db.tar)|

|

|

||||||

|DB|MobileNetV3|75.92%|73.18%|74.53%|[下载链接](https://paddleocr.bj.bcebos.com/det_mv3_db.tar)|

|

|

||||||

|

|

||||||

使用[LSVT](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/doc/doc_ch/datasets.md#1icdar2019-lsvt)街景数据集共3w张数据,训练中文检测模型的相关配置和预训练文件如下:

|

|

||||||

|模型|骨干网络|配置文件|预训练模型|

|

|

||||||

|-|-|-|-|

|

|

||||||

|超轻量中文模型|MobileNetV3|det_mv3_db.yml|[下载链接](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db.tar)|

|

|

||||||

|通用中文OCR模型|ResNet50_vd|det_r50_vd_db.yml|[下载链接](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db.tar)|

|

|

||||||

|

|

||||||

* 注: 上述DB模型的训练和评估,需设置后处理参数box_thresh=0.6,unclip_ratio=1.5,使用不同数据集、不同模型训练,可调整这两个参数进行优化

|

|

||||||

|

|

||||||

PaddleOCR文本检测算法的训练和使用请参考文档教程中[模型训练/评估中的文本检测部分](./doc/doc_ch/detection.md)。

|

|

||||||

|

|

||||||

<a name="文本识别算法"></a>

|

|

||||||

### 2.文本识别算法

|

|

||||||

|

|

||||||

PaddleOCR开源的文本识别算法列表:

|

|

||||||

- [x] CRNN([paper](https://arxiv.org/abs/1507.05717))

|

|

||||||

- [x] Rosetta([paper](https://arxiv.org/abs/1910.05085))

|

|

||||||

- [x] STAR-Net([paper](http://www.bmva.org/bmvc/2016/papers/paper043/index.html))

|

|

||||||

- [x] RARE([paper](https://arxiv.org/abs/1603.03915v1))

|

|

||||||

- [ ] SRN([paper](https://arxiv.org/abs/2003.12294))(百度自研, comming soon)

|

|

||||||

|

|

||||||

参考[DTRB](https://arxiv.org/abs/1904.01906)文字识别训练和评估流程,使用MJSynth和SynthText两个文字识别数据集训练,在IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE数据集上进行评估,算法效果如下:

|

|

||||||

|

|

||||||

|模型|骨干网络|Avg Accuracy|模型存储命名|下载链接|

|

|

||||||

|-|-|-|-|-|

|

|

||||||

|Rosetta|Resnet34_vd|80.24%|rec_r34_vd_none_none_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_r34_vd_none_none_ctc.tar)|

|

|

||||||

|Rosetta|MobileNetV3|78.16%|rec_mv3_none_none_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_mv3_none_none_ctc.tar)|

|

|

||||||

|CRNN|Resnet34_vd|82.20%|rec_r34_vd_none_bilstm_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_r34_vd_none_bilstm_ctc.tar)|

|

|

||||||

|CRNN|MobileNetV3|79.37%|rec_mv3_none_bilstm_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_mv3_none_bilstm_ctc.tar)|

|

|

||||||

|STAR-Net|Resnet34_vd|83.93%|rec_r34_vd_tps_bilstm_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_r34_vd_tps_bilstm_ctc.tar)|

|

|

||||||

|STAR-Net|MobileNetV3|81.56%|rec_mv3_tps_bilstm_ctc|[下载链接](https://paddleocr.bj.bcebos.com/rec_mv3_tps_bilstm_ctc.tar)|

|

|

||||||

|RARE|Resnet34_vd|84.90%|rec_r34_vd_tps_bilstm_attn|[下载链接](https://paddleocr.bj.bcebos.com/rec_r34_vd_tps_bilstm_attn.tar)|

|

|

||||||

|RARE|MobileNetV3|83.32%|rec_mv3_tps_bilstm_attn|[下载链接](https://paddleocr.bj.bcebos.com/rec_mv3_tps_bilstm_attn.tar)|

|

|

||||||

|

|

||||||

使用[LSVT](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/doc/doc_ch/datasets.md#1icdar2019-lsvt)街景数据集根据真值将图crop出来30w数据,进行位置校准。此外基于LSVT语料生成500w合成数据训练中文模型,相关配置和预训练文件如下:

|

|

||||||

|

|

||||||

|模型|骨干网络|配置文件|预训练模型|

|

|

||||||

|-|-|-|-|

|

|

||||||

|超轻量中文模型|MobileNetV3|rec_chinese_lite_train.yml|[下载链接](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn.tar)|

|

|

||||||

|通用中文OCR模型|Resnet34_vd|rec_chinese_common_train.yml|[下载链接](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn.tar)|

|

|

||||||

|

|

||||||

PaddleOCR文本识别算法的训练和使用请参考文档教程中[模型训练/评估中的文本识别部分](./doc/doc_ch/recognition.md)。

|

|

||||||

|

|

||||||

<a name="端到端OCR算法"></a>

|

|

||||||

### 3.端到端OCR算法

|

|

||||||

- [ ] [End2End-PSL](https://arxiv.org/abs/1909.07808)(百度自研, comming soon)

|

|

||||||

|

|

||||||

## 效果展示

|

|

||||||

|

|

||||||

<a name="超轻量级中文OCR效果展示"></a>

|

|

||||||

### 1.超轻量级中文OCR效果展示 [more](./doc/doc_ch/visualization.md)

|

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="doc/imgs_results/1.jpg" width="800">

|

<img src="./doc/ppocr_framework.png" width="800">

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

<a name="通用中文OCR效果展示"></a>

|

PP-OCR is a practical ultra-lightweight OCR system. It is mainly composed of three parts: DB text detection, detection frame correction and CRNN text recognition. The system adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module. The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to the PP-OCR technical article (https://arxiv.org/abs/2009.09941).

|

||||||

### 2.通用中文OCR效果展示 [more](./doc/doc_ch/visualization.md)

|

|

||||||

|

## Visualization [more](./doc/doc_en/visualization_en.md)

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="doc/imgs_results/chinese_db_crnn_server/11.jpg" width="800">

|

<img src="./doc/imgs_results/1102.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1104.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1106.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1105.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1110.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1112.jpg" width="800">

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

<a name="支持空格的中文OCR效果展示"></a>

|

<a name="Community"></a>

|

||||||

### 3.支持空格的中文OCR效果展示 [more](./doc/doc_ch/visualization.md)

|

## Community

|

||||||

|

Scan the QR code below with your Wechat and completing the questionnaire, you can access to offical technical exchange group.

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="doc/imgs_results/chinese_db_crnn_server/en_paper.jpg" width="800">

|

<img src="./doc/joinus.PNG" width = "200" height = "200" />

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

<a name="FAQ"></a>

|

<a name="LICENSE"></a>

|

||||||

## FAQ

|

## License

|

||||||

1. **转换attention识别模型时报错:KeyError: 'predict'**

|

This project is released under <a href="https://github.com/PaddlePaddle/PaddleOCR/blob/master/LICENSE">Apache 2.0 license</a>

|

||||||

问题已解,请更新到最新代码。

|

|

||||||

|

|

||||||

2. **关于推理速度**

|

<a name="CONTRIBUTION"></a>

|

||||||

图片中的文字较多时,预测时间会增,可以使用--rec_batch_num设置更小预测batch num,默认值为30,可以改为10或其他数值。

|

## Contribution

|

||||||

|

We welcome all the contributions to PaddleOCR and appreciate for your feedback very much.

|

||||||

|

|

||||||

3. **服务部署与移动端部署**

|

- Many thanks to [Khanh Tran](https://github.com/xxxpsyduck) and [Karl Horky](https://github.com/karlhorky) for contributing and revising the English documentation.

|

||||||

预计6月中下旬会先后发布基于Serving的服务部署方案和基于Paddle Lite的移动端部署方案,欢迎持续关注。

|

- Many thanks to [zhangxin](https://github.com/ZhangXinNan) for contributing the new visualize function、add .gitgnore and discard set PYTHONPATH manually.

|

||||||

|

- Many thanks to [lyl120117](https://github.com/lyl120117) for contributing the code for printing the network structure.

|

||||||

4. **自研算法发布时间**

|

- Thanks [xiangyubo](https://github.com/xiangyubo) for contributing the handwritten Chinese OCR datasets.

|

||||||

自研算法SAST、SRN、End2End-PSL都将在7-8月陆续发布,敬请期待。

|

- Thanks [authorfu](https://github.com/authorfu) for contributing Android demo and [xiadeye](https://github.com/xiadeye) contributing iOS demo, respectively.

|

||||||

|

- Thanks [BeyondYourself](https://github.com/BeyondYourself) for contributing many great suggestions and simplifying part of the code style.

|

||||||

[more](./doc/doc_ch/FAQ.md)

|

- Thanks [tangmq](https://gitee.com/tangmq) for contributing Dockerized deployment services to PaddleOCR and supporting the rapid release of callable Restful API services.

|

||||||

|

|

||||||

<a name="欢迎加入PaddleOCR技术交流群"></a>

|

|

||||||

## 欢迎加入PaddleOCR技术交流群

|

|

||||||

请扫描下面二维码,完成问卷填写,获取加群二维码和OCR方向的炼丹秘籍

|

|

||||||

|

|

||||||

<div align="center">

|

|

||||||

<img src="./doc/joinus.jpg" width = "200" height = "200" />

|

|

||||||

</div>

|

|

||||||

|

|

||||||

<a name="许可证书"></a>

|

|

||||||

## 许可证书

|

|

||||||

本项目的发布受<a href="https://github.com/PaddlePaddle/PaddleOCR/blob/master/LICENSE">Apache 2.0 license</a>许可认证。

|

|

||||||

|

|

||||||

<a name="贡献代码"></a>

|

|

||||||

## 贡献代码

|

|

||||||

我们非常欢迎你为PaddleOCR贡献代码,也十分感谢你的反馈。

|

|

||||||

|

|

||||||

- 非常感谢 [Khanh Tran](https://github.com/xxxpsyduck) 贡献了英文文档。

|

|

||||||

- 非常感谢 [zhangxin](https://github.com/ZhangXinNan)([Blog](https://blog.csdn.net/sdlypyzq)) 贡献新的可视化方式、添加.gitgnore、处理手动设置PYTHONPATH环境变量的问题

|

|

||||||

- 非常感谢 [lyl120117](https://github.com/lyl120117) 贡献打印网络结构的代码

|

|

||||||

- 非常感谢 [xiangyubo](https://github.com/xiangyubo) 贡献手写中文OCR数据集

|

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,141 @@

|

||||||

|

[English](README.md) | 简体中文

|

||||||

|

|

||||||

|

## 简介

|

||||||

|

PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力使用者训练出更好的模型,并应用落地。

|

||||||

|

|

||||||

|

**近期更新**

|

||||||

|

- 2020.9.22 更新PP-OCR技术文章,https://arxiv.org/abs/2009.09941

|

||||||

|

- 2020.9.19 更新超轻量压缩ppocr_mobile_slim系列模型,整体模型3.5M(详见[PP-OCR Pipline](#PP-OCR)),适合在移动端部署使用。[模型下载](#模型下载)

|

||||||

|

- 2020.9.17 更新超轻量ppocr_mobile系列和通用ppocr_server系列中英文ocr模型,媲美商业效果。[模型下载](#模型下载)

|

||||||

|

- 2020.8.26 更新OCR相关的84个常见问题及解答,具体参考[FAQ](./doc/doc_ch/FAQ.md)

|

||||||

|

- 2020.8.24 支持通过whl包安装使用PaddleOCR,具体参考[Paddleocr Package使用说明](./doc/doc_ch/whl.md)

|

||||||

|

- 2020.8.21 更新8月18日B站直播课回放和PPT,课节2,易学易用的OCR工具大礼包,[获取地址](https://aistudio.baidu.com/aistudio/education/group/info/1519)

|

||||||

|

- [More](./doc/doc_ch/update.md)

|

||||||

|

|

||||||

|

|

||||||

|

## 特性

|

||||||

|

|

||||||

|

- PPOCR系列高质量预训练模型,准确的识别效果

|

||||||

|

- 超轻量ppocr_mobile移动端系列:检测(2.6M)+方向分类器(0.9M)+ 识别(4.6M)= 8.1M

|

||||||

|

- 通用ppocr_server系列:检测(47.2M)+方向分类器(0.9M)+ 识别(107M)= 155.1M

|

||||||

|

- 超轻量压缩ppocr_mobile_slim系列:检测(1.4M)+方向分类器(0.5M)+ 识别(1.6M)= 3.5M

|

||||||

|

- 支持中英文数字组合识别、竖排文本识别、长文本识别

|

||||||

|

- 支持多语言识别:韩语、日语、德语、法语

|

||||||

|

- 支持用户自定义训练,提供丰富的预测推理部署方案

|

||||||

|

- 支持PIP快速安装使用

|

||||||

|

- 可运行于Linux、Windows、MacOS等多种系统

|

||||||

|

|

||||||

|

## 效果展示

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

<img src="doc/imgs_results/1101.jpg" width="800">

|

||||||

|

<img src="doc/imgs_results/1103.jpg" width="800">

|

||||||

|

</div>

|

||||||

|

|

||||||

|

上图是通用ppocr_server模型效果展示,更多效果图请见[效果展示页面](./doc/doc_ch/visualization.md)。

|

||||||

|

|

||||||

|

## 快速体验

|

||||||

|

- PC端:超轻量级中文OCR在线体验地址:https://www.paddlepaddle.org.cn/hub/scene/ocr

|

||||||

|

|

||||||

|

- 移动端:[安装包DEMO下载地址](https://ai.baidu.com/easyedge/app/openSource?from=paddlelite)(基于EasyEdge和Paddle-Lite, 支持iOS和Android系统),Android手机也可以直接扫描下面二维码安装体验。

|

||||||

|

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

<img src="./doc/ocr-android-easyedge.png" width = "200" height = "200" />

|

||||||

|

</div>

|

||||||

|

|

||||||

|

- 代码体验:从[快速安装](./doc/doc_ch/installation.md) 开始

|

||||||

|

|

||||||

|

<a name="模型下载"></a>

|

||||||

|

## PP-OCR 1.1系列模型列表(9月17日更新)

|

||||||

|

|

||||||

|

| 模型简介 | 模型名称 |推荐场景 | 检测模型 | 方向分类器 | 识别模型 |

|

||||||

|

| ------------ | --------------- | ----------------|---- | ---------- | -------- |

|

||||||

|

| 中英文超轻量OCR模型(8.1M) | ch_ppocr_mobile_v1.1_xx |移动端&服务器端|[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile/det/ch_ppocr_mobile_v1.1_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile/det/ch_ppocr_mobile_v1.1_det_train.tar)|[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_train.tar) |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile/rec/ch_ppocr_mobile_v1.1_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile/rec/ch_ppocr_mobile_v1.1_rec_pre.tar) |

|

||||||

|

| 中英文通用OCR模型(155.1M) |ch_ppocr_server_v1.1_xx|服务器端 |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/server/det/ch_ppocr_server_v1.1_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/server/det/ch_ppocr_server_v1.1_det_train.tar) |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_train.tar) |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/server/rec/ch_ppocr_server_v1.1_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/20-09-22/server/rec/ch_ppocr_server_v1.1_rec_pre.tar) |

|

||||||

|

| 中英文超轻量压缩OCR模型(3.5M) | ch_ppocr_mobile_slim_v1.1_xx| 移动端 |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/det/ch_ppocr_mobile_v1.1_det_prune_infer.tar) / [slim模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/det/ch_ppocr_mobile_v1.1_det_prune_opt.nb) |[推理模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_quant_infer.tar) / [slim模型](https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_cls_quant_opt.nb)| [推理模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/rec/ch_ppocr_mobile_v1.1_rec_quant_infer.tar) / [slim模型](https://paddleocr.bj.bcebos.com/20-09-22/mobile-slim/rec/ch_ppocr_mobile_v1.1_rec_quant_opt.nb)|

|

||||||

|

|

||||||

|

更多模型下载(包括多语言),可以参考[PP-OCR v1.1 系列模型下载](./doc/doc_ch/models_list.md)

|

||||||

|

|

||||||

|

## 文档教程

|

||||||

|

- [快速安装](./doc/doc_ch/installation.md)

|

||||||

|

- [中文OCR模型快速使用](./doc/doc_ch/quickstart.md)

|

||||||

|

- [代码组织结构](./doc/doc_ch/tree.md)

|

||||||

|

- 算法介绍

|

||||||

|

- [文本检测](./doc/doc_ch/algorithm_overview.md)

|

||||||

|

- [文本识别](./doc/doc_ch/algorithm_overview.md)

|

||||||

|

- [PP-OCR Pipline](#PP-OCR)

|

||||||

|

- 模型训练/评估

|

||||||

|

- [文本检测](./doc/doc_ch/detection.md)

|

||||||

|

- [文本识别](./doc/doc_ch/recognition.md)

|

||||||

|

- [方向分类器](./doc/doc_ch/angle_class.md)

|

||||||

|

- [yml参数配置文件介绍](./doc/doc_ch/config.md)

|

||||||

|

- 预测部署

|

||||||

|

- [基于pip安装whl包快速推理](./doc/doc_ch/whl.md)

|

||||||

|

- [基于Python脚本预测引擎推理](./doc/doc_ch/inference.md)

|

||||||

|

- [基于C++预测引擎推理](./deploy/cpp_infer/readme.md)

|

||||||

|

- [服务化部署](./deploy/hubserving/readme.md)

|

||||||

|

- [端侧部署](./deploy/lite/readme.md)

|

||||||

|

- [模型量化](./deploy/slim/quantization/README.md)

|

||||||

|

- [模型裁剪](./deploy/slim/prune/README.md)

|

||||||

|

- [Benchmark](./doc/doc_ch/benchmark.md)

|

||||||

|

- 数据集

|

||||||

|

- [通用中英文OCR数据集](./doc/doc_ch/datasets.md)

|

||||||

|

- [手写中文OCR数据集](./doc/doc_ch/handwritten_datasets.md)

|

||||||

|

- [垂类多语言OCR数据集](./doc/doc_ch/vertical_and_multilingual_datasets.md)

|

||||||

|

- [常用数据标注工具](./doc/doc_ch/data_annotation.md)

|

||||||

|

- [常用数据合成工具](./doc/doc_ch/data_synthesis.md)

|

||||||

|

- [效果展示](#效果展示)

|

||||||

|

- FAQ

|

||||||

|

- [【精选】OCR精选10个问题](./doc/doc_ch/FAQ.md)

|

||||||

|

- [【理论篇】OCR通用21个问题](./doc/doc_ch/FAQ.md)

|

||||||

|

- [【实战篇】PaddleOCR实战53个问题](./doc/doc_ch/FAQ.md)

|

||||||

|

- [技术交流群](#欢迎加入PaddleOCR技术交流群)

|

||||||

|

- [参考文献](./doc/doc_ch/reference.md)

|

||||||

|

- [许可证书](#许可证书)

|

||||||

|

- [贡献代码](#贡献代码)

|

||||||

|

|

||||||

|

<a name="PP-OCR"></a>

|

||||||

|

## PP-OCR Pipline

|

||||||

|

<div align="center">

|

||||||

|

<img src="./doc/ppocr_framework.png" width="800">

|

||||||

|

</div>

|

||||||

|

|

||||||

|

PP-OCR是一个实用的超轻量OCR系统。主要由DB文本检测、检测框矫正和CRNN文本识别三部分组成。该系统从骨干网络选择和调整、预测头部的设计、数据增强、学习率变换策略、正则化参数选择、预训练模型使用以及模型自动裁剪量化8个方面,采用19个有效策略,对各个模块的模型进行效果调优和瘦身,最终得到整体大小为3.5M的超轻量中英文OCR和2.8M的英文数字OCR。更多细节请参考PP-OCR技术方案 https://arxiv.org/abs/2009.09941 。

|

||||||

|

|

||||||

|

<a name="效果展示"></a>

|

||||||

|

## 效果展示 [more](./doc/doc_ch/visualization.md)

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

<img src="./doc/imgs_results/1102.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1104.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1106.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1105.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1110.jpg" width="800">

|

||||||

|

<img src="./doc/imgs_results/1112.jpg" width="800">

|

||||||

|

</div>

|

||||||

|

|

||||||

|

|

||||||

|

<a name="欢迎加入PaddleOCR技术交流群"></a>

|

||||||

|

## 欢迎加入PaddleOCR技术交流群

|

||||||

|

请扫描下面二维码,完成问卷填写,获取加群二维码和OCR方向的炼丹秘籍

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

<img src="./doc/joinus.PNG" width = "200" height = "200" />

|

||||||

|

</div>

|

||||||

|

|

||||||

|

<a name="许可证书"></a>

|

||||||

|

## 许可证书

|

||||||

|

本项目的发布受<a href="https://github.com/PaddlePaddle/PaddleOCR/blob/master/LICENSE">Apache 2.0 license</a>许可认证。

|

||||||

|

|

||||||

|

<a name="贡献代码"></a>

|

||||||

|

## 贡献代码

|

||||||

|

我们非常欢迎你为PaddleOCR贡献代码,也十分感谢你的反馈。

|

||||||

|

|

||||||

|

- 非常感谢 [Khanh Tran](https://github.com/xxxpsyduck) 和 [Karl Horky](https://github.com/karlhorky) 贡献修改英文文档

|

||||||

|

- 非常感谢 [zhangxin](https://github.com/ZhangXinNan)([Blog](https://blog.csdn.net/sdlypyzq)) 贡献新的可视化方式、添加.gitgnore、处理手动设置PYTHONPATH环境变量的问题

|

||||||

|

- 非常感谢 [lyl120117](https://github.com/lyl120117) 贡献打印网络结构的代码

|

||||||

|

- 非常感谢 [xiangyubo](https://github.com/xiangyubo) 贡献手写中文OCR数据集

|

||||||

|

- 非常感谢 [authorfu](https://github.com/authorfu) 贡献Android和[xiadeye](https://github.com/xiadeye) 贡献IOS的demo代码

|

||||||

|

- 非常感谢 [BeyondYourself](https://github.com/BeyondYourself) 给PaddleOCR提了很多非常棒的建议,并简化了PaddleOCR的部分代码风格。

|

||||||

|

- 非常感谢 [tangmq](https://gitee.com/tangmq) 给PaddleOCR增加Docker化部署服务,支持快速发布可调用的Restful API服务。

|

||||||

302

README_en.md

302

README_en.md

|

|

@ -1,302 +0,0 @@

|

||||||

English | [简体中文](README.md)

|

|

||||||

|

|

||||||

## INTRODUCTION

|

|

||||||

PaddleOCR aims to create a rich, leading, and practical OCR tools that help users train better models and apply them into practice.

|

|

||||||

|

|

||||||

**Recent updates**、

|

|

||||||

- 2020.7.9 Add recognition model to support space, [recognition result](#space Chinese OCR results). For more information: [Recognition](./doc/doc_ch/recognition.md) and [quickstart](./doc/doc_ch/quickstart.md)

|

|

||||||

- 2020.7.9 Add data auguments and learning rate decay strategies,please read [config](./doc/doc_en/config_en.md)

|

|

||||||

- 2020.6.8 Add [dataset](./doc/doc_en/datasets_en.md) and keep updating

|

|

||||||

- 2020.6.5 Support exporting `attention` model to `inference_model`

|

|

||||||

- 2020.6.5 Support separate prediction and recognition, output result score

|

|

||||||

- [more](./doc/doc_en/update_en.md)

|

|

||||||

|

|

||||||

## FEATURES

|

|

||||||

- Lightweight Chinese OCR model, total model size is only 8.6M

|

|

||||||

- Single model supports Chinese and English numbers combination recognition, vertical text recognition, long text recognition

|

|

||||||

- Detection model DB (4.1M) + recognition model CRNN (4.5M)

|

|

||||||

- Various text detection algorithms: EAST, DB

|

|

||||||

- Various text recognition algorithms: Rosetta, CRNN, STAR-Net, RARE

|

|

||||||

|

|

||||||

<a name="Supported-Chinese-model-list"></a>

|

|

||||||

### Supported Chinese models list:

|

|

||||||

|

|

||||||

|Model Name|Description |Detection Model link|Recognition Model link| Support for space Recognition Model link|

|

|

||||||

|-|-|-|-|-|

|

|

||||||

|chinese_db_crnn_mobile|lightweight Chinese OCR model|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance_infer.tar) / [pre-train model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance.tar)

|

|

||||||

|chinese_db_crnn_server|General Chinese OCR model|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_infer.tar) / [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance_infer.tar) / [pre-train model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance.tar)

|

|

||||||

|

|

||||||

|

|

||||||

For testing our Chinese OCR online:https://www.paddlepaddle.org.cn/hub/scene/ocr

|

|

||||||

|

|

||||||

**You can also quickly experience the lightweight Chinese OCR and General Chinese OCR models as follows:**

|

|

||||||

|

|

||||||

## **LIGHTWEIGHT CHINESE OCR AND GENERAL CHINESE OCR INFERENCE**

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

The picture above is the result of our lightweight Chinese OCR model. For more testing results, please see the end of the article [lightweight Chinese OCR results](#lightweight-Chinese-OCR-results) , [General Chinese OCR results](#General-Chinese-OCR-results) and [Support for space Recognition Model](#Space-Chinese-OCR-results).

|

|

||||||

|

|

||||||

#### 1. ENVIRONMENT CONFIGURATION

|

|

||||||

|

|

||||||

Please see [Quick installation](./doc/doc_en/installation_en.md)

|

|

||||||

|

|

||||||

#### 2. DOWNLOAD INFERENCE MODELS

|

|

||||||

|

|

||||||

#### (1) Download lightweight Chinese OCR models

|

|

||||||

*If wget is not installed in the windows system, you can copy the link to the browser to download the model. After model downloaded, unzip it and place it in the corresponding directory*

|

|

||||||

|

|

||||||

Copy the detection and recognition 'inference model' address in [Chinese model List](#Supported-Chinese-model-list), download and unpack:

|

|

||||||

|

|

||||||

```

|

|

||||||

mkdir inference && cd inference

|

|

||||||

# Download the detection part of the Chinese OCR and decompress it

|

|

||||||

wget {url/of/detection/inference_model} && tar xf {name/of/detection/inference_model/package}

|

|

||||||

# Download the recognition part of the Chinese OCR and decompress it

|

|

||||||

wget {url/of/recognition/inference_model} && tar xf {name/of/recognition/inference_model/package}

|

|

||||||

cd ..

|

|

||||||

```

|

|

||||||

|

|

||||||

Take lightweight Chinese OCR model as an example:

|

|

||||||

|

|

||||||

```

|

|

||||||

mkdir inference && cd inference

|

|

||||||

# Download the detection part of the lightweight Chinese OCR and decompress it

|

|

||||||

wget https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db_infer.tar && tar xf ch_det_mv3_db_infer.tar

|

|

||||||

# Download the recognition part of the lightweight Chinese OCR and decompress it

|

|

||||||

wget https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_infer.tar && tar xf ch_rec_mv3_crnn_infer.tar

|

|

||||||

# Download the space-recognized part of the lightweight Chinese OCR and decompress it

|

|

||||||

wget https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance_infer.tar && tar xf ch_rec_mv3_crnn_enhance_infer.tar

|

|

||||||

|

|

||||||

cd ..

|

|

||||||

```

|

|

||||||

|

|

||||||

After the decompression is completed, the file structure should be as follows:

|

|

||||||

|

|

||||||

```

|

|

||||||

|-inference

|

|

||||||

|-ch_rec_mv3_crnn

|

|

||||||

|- model

|

|

||||||

|- params

|

|

||||||

|-ch_det_mv3_db

|

|

||||||

|- model

|

|

||||||

|- params

|

|

||||||

...

|

|

||||||

```

|

|

||||||

|

|

||||||

#### 3. SINGLE IMAGE AND BATCH PREDICTION

|

|

||||||

|

|

||||||

The following code implements text detection and recognition inference tandemly. When performing prediction, you need to specify the path of a single image or image folder through the parameter `image_dir`, the parameter `det_model_dir` specifies the path to detection model, and the parameter `rec_model_dir` specifies the path to the recognition model. The visual prediction results are saved to the `./inference_results` folder by default.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

|

|

||||||

# Prediction on a single image by specifying image path to image_dir

|

|

||||||

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/11.jpg" --det_model_dir="./inference/ch_det_mv3_db/" --rec_model_dir="./inference/ch_rec_mv3_crnn/"

|

|

||||||

|

|

||||||

# Prediction on a batch of images by specifying image folder path to image_dir

|

|

||||||

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/" --det_model_dir="./inference/ch_det_mv3_db/" --rec_model_dir="./inference/ch_rec_mv3_crnn/"

|

|

||||||

|

|

||||||

# If you want to use CPU for prediction, you need to set the use_gpu parameter to False

|

|

||||||

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/11.jpg" --det_model_dir="./inference/ch_det_mv3_db/" --rec_model_dir="./inference/ch_rec_mv3_crnn/" --use_gpu=False

|

|

||||||

```

|

|

||||||

|

|

||||||

To run inference of the Generic Chinese OCR model, follow these steps above to download the corresponding models and update the relevant parameters. Examples are as follows:

|

|

||||||

```

|

|

||||||

# Prediction on a single image by specifying image path to image_dir

|

|

||||||

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/11.jpg" --det_model_dir="./inference/ch_det_r50_vd_db/" --rec_model_dir="./inference/ch_rec_r34_vd_crnn/"

|

|

||||||

```

|

|

||||||

|

|

||||||

To run inference of the space-Generic Chinese OCR model, follow these steps above to download the corresponding models and update the relevant parameters. Examples are as follows:

|

|

||||||

|

|

||||||

```

|

|

||||||

# Prediction on a single image by specifying image path to image_dir

|

|

||||||

python3 tools/infer/predict_system.py --image_dir="./doc/imgs_en/img_12.jpg" --det_model_dir="./inference/ch_det_r50_vd_db/" --rec_model_dir="./inference/ch_rec_r34_vd_crnn_enhance/"

|

|

||||||

```

|

|

||||||

|

|

||||||

For more text detection and recognition models, please refer to the document [Inference](./doc/doc_en/inference_en.md)

|

|

||||||

|

|

||||||

## DOCUMENTATION

|

|

||||||

- [Quick installation](./doc/doc_en/installation_en.md)

|

|

||||||

- [Text detection model training/evaluation/prediction](./doc/doc_en/detection_en.md)

|

|

||||||

- [Text recognition model training/evaluation/prediction](./doc/doc_en/recognition_en.md)

|

|

||||||

- [Inference](./doc/doc_en/inference_en.md)

|

|

||||||

- [Introduction of yml file](./doc/doc_en/config_en.md)

|

|

||||||

- [Dataset](./doc/doc_en/datasets_en.md)

|

|

||||||

- [FAQ]((#FAQ)

|

|

||||||

|

|

||||||

## TEXT DETECTION ALGORITHM

|

|

||||||

|

|

||||||

PaddleOCR open source text detection algorithms list:

|

|

||||||

- [x] EAST([paper](https://arxiv.org/abs/1704.03155))

|

|

||||||

- [x] DB([paper](https://arxiv.org/abs/1911.08947))

|

|

||||||

- [ ] SAST([paper](https://arxiv.org/abs/1908.05498))(Baidu Self-Research, comming soon)

|

|

||||||

|

|

||||||

On the ICDAR2015 dataset, the text detection result is as follows:

|

|

||||||

|

|

||||||

|Model|Backbone|precision|recall|Hmean|Download link|

|

|

||||||

|-|-|-|-|-|-|

|

|

||||||

|EAST|ResNet50_vd|88.18%|85.51%|86.82%|[Download link](https://paddleocr.bj.bcebos.com/det_r50_vd_east.tar)|

|

|

||||||

|EAST|MobileNetV3|81.67%|79.83%|80.74%|[Download link](https://paddleocr.bj.bcebos.com/det_mv3_east.tar)|

|

|

||||||

|DB|ResNet50_vd|83.79%|80.65%|82.19%|[Download link](https://paddleocr.bj.bcebos.com/det_r50_vd_db.tar)|

|

|

||||||

|DB|MobileNetV3|75.92%|73.18%|74.53%|[Download link](https://paddleocr.bj.bcebos.com/det_mv3_db.tar)|

|

|

||||||

|

|

||||||

For use of [LSVT](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/doc/doc_en/datasets_en.md#1-icdar2019-lsvt) street view dataset with a total of 3w training data,the related configuration and pre-trained models for Chinese detection task are as follows:

|

|

||||||

|Model|Backbone|Configuration file|Pre-trained model|

|

|

||||||

|-|-|-|-|

|

|

||||||

|lightweight Chinese model|MobileNetV3|det_mv3_db.yml|[Download link](https://paddleocr.bj.bcebos.com/ch_models/ch_det_mv3_db.tar)|

|

|

||||||

|General Chinese OCR model|ResNet50_vd|det_r50_vd_db.yml|[Download link](https://paddleocr.bj.bcebos.com/ch_models/ch_det_r50_vd_db.tar)|

|

|

||||||

|

|

||||||

* Note: For the training and evaluation of the above DB model, post-processing parameters box_thresh=0.6 and unclip_ratio=1.5 need to be set. If using different datasets and different models for training, these two parameters can be adjusted for better result.

|

|

||||||

|

|

||||||

For the training guide and use of PaddleOCR text detection algorithms, please refer to the document [Text detection model training/evaluation/prediction](./doc/doc_en/detection_en.md)

|

|

||||||

|

|

||||||

## TEXT RECOGNITION ALGORITHM

|

|

||||||

|

|

||||||

PaddleOCR open-source text recognition algorithms list:

|

|

||||||

- [x] CRNN([paper](https://arxiv.org/abs/1507.05717))

|

|

||||||

- [x] Rosetta([paper](https://arxiv.org/abs/1910.05085))

|

|

||||||

- [x] STAR-Net([paper](http://www.bmva.org/bmvc/2016/papers/paper043/index.html))

|

|

||||||

- [x] RARE([paper](https://arxiv.org/abs/1603.03915v1))

|

|

||||||

- [ ] SRN([paper](https://arxiv.org/abs/2003.12294))(Baidu Self-Research, comming soon)

|

|

||||||

|

|

||||||

Refer to [DTRB](https://arxiv.org/abs/1904.01906), the training and evaluation result of these above text recognition (using MJSynth and SynthText for training, evaluate on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE) is as follow:

|

|

||||||

|

|

||||||

|Model|Backbone|Avg Accuracy|Module combination|Download link|

|

|

||||||

|-|-|-|-|-|

|

|

||||||

|Rosetta|Resnet34_vd|80.24%|rec_r34_vd_none_none_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_r34_vd_none_none_ctc.tar)|

|

|

||||||

|Rosetta|MobileNetV3|78.16%|rec_mv3_none_none_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_mv3_none_none_ctc.tar)|

|

|

||||||

|CRNN|Resnet34_vd|82.20%|rec_r34_vd_none_bilstm_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_r34_vd_none_bilstm_ctc.tar)|

|

|

||||||

|CRNN|MobileNetV3|79.37%|rec_mv3_none_bilstm_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_mv3_none_bilstm_ctc.tar)|

|

|

||||||

|STAR-Net|Resnet34_vd|83.93%|rec_r34_vd_tps_bilstm_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_r34_vd_tps_bilstm_ctc.tar)|

|

|

||||||

|STAR-Net|MobileNetV3|81.56%|rec_mv3_tps_bilstm_ctc|[Download link](https://paddleocr.bj.bcebos.com/rec_mv3_tps_bilstm_ctc.tar)|

|

|

||||||

|RARE|Resnet34_vd|84.90%|rec_r34_vd_tps_bilstm_attn|[Download link](https://paddleocr.bj.bcebos.com/rec_r34_vd_tps_bilstm_attn.tar)|

|

|

||||||

|RARE|MobileNetV3|83.32%|rec_mv3_tps_bilstm_attn|[Download link](https://paddleocr.bj.bcebos.com/rec_mv3_tps_bilstm_attn.tar)|

|

|

||||||

|

|

||||||

We use [LSVT](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/doc/doc_en/datasets_en.md#1-icdar2019-lsvt) dataset and cropout 30w traning data from original photos by using position groundtruth and make some calibration needed. In addition, based on the LSVT corpus, 500w synthetic data is generated to train the Chinese model. The related configuration and pre-trained models are as follows:

|

|

||||||

|Model|Backbone|Configuration file|Pre-trained model|

|

|

||||||

|-|-|-|-|

|

|

||||||

|lightweight Chinese model|MobileNetV3|rec_chinese_lite_train.yml|[Download link](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance_infer.tar) & [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_mv3_crnn_enhance.tar)|

|

|

||||||

|General Chinese OCR model|Resnet34_vd|rec_chinese_common_train.yml|[Download link](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn.tar)|[inference model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance_infer.tar) & [pre-trained model](https://paddleocr.bj.bcebos.com/ch_models/ch_rec_r34_vd_crnn_enhance.tar)|

|

|

||||||

|

|

||||||

Please refer to the document for training guide and use of PaddleOCR text recognition algorithms [Text recognition model training/evaluation/prediction](./doc/doc_en/recognition_en.md)

|

|

||||||

|

|

||||||

## END-TO-END OCR ALGORITHM

|

|

||||||

- [ ] [End2End-PSL](https://arxiv.org/abs/1909.07808)(Baidu Self-Research, comming soon)

|

|

||||||

|

|

||||||

<a name="lightweight-Chinese-OCR-results"></a>

|

|

||||||

## LIGHTWEIGHT CHINESE OCR RESULTS

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

<a name="General-Chinese-OCR-results"></a>

|

|

||||||

## General Chinese OCR results

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

<a name="Space-Chinese-OCR-results"></a>

|

|

||||||

|

|

||||||

## space Chinese OCR results

|

|

||||||

|

|

||||||

### LIGHTWEIGHT CHINESE OCR RESULTS

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### General Chinese OCR results

|

|

||||||

|

|

||||||

|

|

||||||

<a name="FAQ"></a>

|

|

||||||

## FAQ

|

|

||||||

1. Error when using attention-based recognition model: KeyError: 'predict'

|

|

||||||

|

|

||||||

The inference of recognition model based on attention loss is still being debugged. For Chinese text recognition, it is recommended to choose the recognition model based on CTC loss first. In practice, it is also found that the recognition model based on attention loss is not as effective as the one based on CTC loss.

|

|

||||||

|

|

||||||

2. About inference speed

|

|

||||||

|

|

||||||

When there are a lot of texts in the picture, the prediction time will increase. You can use `--rec_batch_num` to set a smaller prediction batch size. The default value is 30, which can be changed to 10 or other values.

|

|

||||||

|

|

||||||

3. Service deployment and mobile deployment

|

|

||||||

|

|

||||||

It is expected that the service deployment based on Serving and the mobile deployment based on Paddle Lite will be released successively in mid-to-late June. Stay tuned for more updates.

|

|

||||||

|

|

||||||

4. Release time of self-developed algorithm

|

|

||||||

|

|

||||||

Baidu Self-developed algorithms such as SAST, SRN and end2end PSL will be released in June or July. Please be patient.

|

|

||||||

|

|

||||||

[more](./doc/doc_en/FAQ_en.md)

|

|

||||||

|

|

||||||

## WELCOME TO THE PaddleOCR TECHNICAL EXCHANGE GROUP

|

|

||||||

WeChat: paddlehelp, note OCR, our assistant will get you into the group~

|

|

||||||

|

|

||||||

<img src="./doc/paddlehelp.jpg" width = "200" height = "200" />

|

|

||||||

|

|

||||||

## REFERENCES

|

|

||||||

```

|

|

||||||

1. EAST:

|

|

||||||

@inproceedings{zhou2017east,

|

|

||||||

title={EAST: an efficient and accurate scene text detector},

|

|

||||||

author={Zhou, Xinyu and Yao, Cong and Wen, He and Wang, Yuzhi and Zhou, Shuchang and He, Weiran and Liang, Jiajun},

|

|

||||||

booktitle={Proceedings of the IEEE conference on Computer Vision and Pattern Recognition},

|

|

||||||

pages={5551--5560},

|

|

||||||

year={2017}

|

|

||||||

}

|

|

||||||

|

|

||||||

2. DB:

|

|

||||||

@article{liao2019real,

|

|

||||||

title={Real-time Scene Text Detection with Differentiable Binarization},

|

|

||||||

author={Liao, Minghui and Wan, Zhaoyi and Yao, Cong and Chen, Kai and Bai, Xiang},

|

|

||||||

journal={arXiv preprint arXiv:1911.08947},

|

|

||||||

year={2019}

|

|

||||||

}

|

|

||||||

|

|

||||||

3. DTRB:

|

|

||||||

@inproceedings{baek2019wrong,

|

|

||||||

title={What is wrong with scene text recognition model comparisons? dataset and model analysis},

|

|

||||||

author={Baek, Jeonghun and Kim, Geewook and Lee, Junyeop and Park, Sungrae and Han, Dongyoon and Yun, Sangdoo and Oh, Seong Joon and Lee, Hwalsuk},

|

|

||||||

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

|

|

||||||

pages={4715--4723},

|

|

||||||

year={2019}

|

|

||||||

}

|

|

||||||

|

|

||||||

4. SAST:

|

|

||||||

@inproceedings{wang2019single,

|

|

||||||

title={A Single-Shot Arbitrarily-Shaped Text Detector based on Context Attended Multi-Task Learning},

|

|

||||||

author={Wang, Pengfei and Zhang, Chengquan and Qi, Fei and Huang, Zuming and En, Mengyi and Han, Junyu and Liu, Jingtuo and Ding, Errui and Shi, Guangming},

|

|

||||||

booktitle={Proceedings of the 27th ACM International Conference on Multimedia},

|

|

||||||

pages={1277--1285},

|

|

||||||

year={2019}

|

|

||||||

}

|

|

||||||

|

|

||||||

5. SRN:

|

|

||||||

@article{yu2020towards,

|

|

||||||

title={Towards Accurate Scene Text Recognition with Semantic Reasoning Networks},

|

|

||||||

author={Yu, Deli and Li, Xuan and Zhang, Chengquan and Han, Junyu and Liu, Jingtuo and Ding, Errui},

|

|

||||||

journal={arXiv preprint arXiv:2003.12294},

|

|

||||||

year={2020}

|

|

||||||

}

|

|

||||||

|

|

||||||

6. end2end-psl:

|

|

||||||

@inproceedings{sun2019chinese,

|

|

||||||

title={Chinese Street View Text: Large-scale Chinese Text Reading with Partially Supervised Learning},

|

|

||||||

author={Sun, Yipeng and Liu, Jiaming and Liu, Wei and Han, Junyu and Ding, Errui and Liu, Jingtuo},

|

|

||||||

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

|

|

||||||

pages={9086--9095},

|

|

||||||

year={2019}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

## LICENSE

|

|

||||||

This project is released under <a href="https://github.com/PaddlePaddle/PaddleOCR/blob/master/LICENSE">Apache 2.0 license</a>

|

|

||||||

|

|

||||||

## CONTRIBUTION

|

|

||||||

We welcome all the contributions to PaddleOCR and appreciate for your feedback very much.

|

|

||||||

|

|

||||||

- Many thanks to [Khanh Tran](https://github.com/xxxpsyduck) for contributing the English documentation.

|

|

||||||

- Many thanks to [zhangxin](https://github.com/ZhangXinNan) for contributing the new visualize function、add .gitgnore and discard set PYTHONPATH manually.

|

|

||||||

- Many thanks to [lyl120117](https://github.com/lyl120117) for contributing the code for printing the network structure.

|

|

||||||

|

|

@ -0,0 +1,17 @@

|

||||||

|

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

__all__ = ['PaddleOCR', 'draw_ocr']

|

||||||

|

from .paddleocr import PaddleOCR

|

||||||

|

from .tools.infer.utility import draw_ocr

|

||||||

|

|

@ -0,0 +1,44 @@

|

||||||

|

Global:

|

||||||

|

algorithm: CLS

|

||||||

|

use_gpu: False

|

||||||

|

epoch_num: 100

|

||||||

|

log_smooth_window: 20

|

||||||

|

print_batch_step: 100

|

||||||

|

save_model_dir: output/cls_mv3

|

||||||

|

save_epoch_step: 3

|

||||||

|

eval_batch_step: 500

|

||||||

|

train_batch_size_per_card: 512

|

||||||

|

test_batch_size_per_card: 512

|

||||||

|

image_shape: [3, 48, 192]

|

||||||

|

label_list: ['0','180']

|

||||||

|

distort: True

|

||||||

|

reader_yml: ./configs/cls/cls_reader.yml

|

||||||

|

pretrain_weights:

|

||||||

|

checkpoints:

|

||||||

|

save_inference_dir:

|

||||||

|

infer_img:

|

||||||

|

|

||||||

|

Architecture:

|

||||||

|

function: ppocr.modeling.architectures.cls_model,ClsModel

|

||||||

|

|

||||||

|

Backbone:

|

||||||

|

function: ppocr.modeling.backbones.rec_mobilenet_v3,MobileNetV3

|

||||||

|

scale: 0.35

|

||||||

|

model_name: small

|

||||||

|

|

||||||

|

Head:

|

||||||

|

function: ppocr.modeling.heads.cls_head,ClsHead

|

||||||

|

class_dim: 2

|

||||||

|

|

||||||

|

Loss:

|

||||||

|

function: ppocr.modeling.losses.cls_loss,ClsLoss

|

||||||

|

|

||||||

|

Optimizer:

|

||||||

|

function: ppocr.optimizer,AdamDecay

|

||||||

|

base_lr: 0.001

|

||||||

|

beta1: 0.9

|

||||||

|

beta2: 0.999

|

||||||

|

decay:

|

||||||

|

function: cosine_decay

|

||||||

|

step_each_epoch: 1169

|

||||||

|

total_epoch: 100

|

||||||

|

|

@ -0,0 +1,13 @@

|

||||||

|

TrainReader:

|

||||||

|

reader_function: ppocr.data.cls.dataset_traversal,SimpleReader

|

||||||

|

num_workers: 8

|

||||||

|

img_set_dir: ./train_data/cls

|

||||||

|

label_file_path: ./train_data/cls/train.txt

|

||||||

|

|

||||||

|

EvalReader:

|

||||||

|

reader_function: ppocr.data.cls.dataset_traversal,SimpleReader

|

||||||

|

img_set_dir: ./train_data/cls

|

||||||

|

label_file_path: ./train_data/cls/test.txt

|

||||||

|

|

||||||

|

TestReader:

|

||||||

|

reader_function: ppocr.data.cls.dataset_traversal,SimpleReader

|

||||||

|

|

@ -49,6 +49,6 @@ Optimizer:

|

||||||

PostProcess:

|

PostProcess:

|

||||||

function: ppocr.postprocess.db_postprocess,DBPostProcess

|

function: ppocr.postprocess.db_postprocess,DBPostProcess

|

||||||

thresh: 0.3

|

thresh: 0.3

|

||||||

box_thresh: 0.7

|

box_thresh: 0.6

|

||||||

max_candidates: 1000

|

max_candidates: 1000

|

||||||

unclip_ratio: 2.0

|

unclip_ratio: 1.5

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,59 @@

|

||||||

|

Global:

|

||||||

|

algorithm: DB

|

||||||

|

use_gpu: true

|

||||||

|

epoch_num: 1200

|

||||||

|

log_smooth_window: 20

|

||||||

|

print_batch_step: 2

|

||||||

|

save_model_dir: ./output/det_db/

|

||||||

|

save_epoch_step: 200

|

||||||

|

# evaluation is run every 5000 iterations after the 4000th iteration

|

||||||

|

eval_batch_step: [4000, 5000]

|

||||||

|

train_batch_size_per_card: 16

|

||||||

|

test_batch_size_per_card: 16

|

||||||

|

image_shape: [3, 640, 640]

|

||||||

|

reader_yml: ./configs/det/det_db_icdar15_reader.yml

|

||||||

|

pretrain_weights: ./pretrain_models/MobileNetV3_large_x0_5_pretrained/

|

||||||

|

checkpoints:

|

||||||

|

save_res_path: ./output/det_db/predicts_db.txt

|

||||||

|

save_inference_dir:

|

||||||

|

|

||||||

|

Architecture:

|

||||||

|

function: ppocr.modeling.architectures.det_model,DetModel

|

||||||

|

|

||||||

|

Backbone:

|

||||||

|

function: ppocr.modeling.backbones.det_mobilenet_v3,MobileNetV3

|

||||||

|

scale: 0.5

|

||||||

|

model_name: large

|

||||||

|

disable_se: true

|

||||||

|

|

||||||

|

Head:

|

||||||

|

function: ppocr.modeling.heads.det_db_head,DBHead

|

||||||

|

model_name: large

|

||||||

|

k: 50

|

||||||

|

inner_channels: 96

|

||||||

|

out_channels: 2

|

||||||

|

|

||||||

|

Loss:

|

||||||

|

function: ppocr.modeling.losses.det_db_loss,DBLoss

|

||||||

|

balance_loss: true

|

||||||

|

main_loss_type: DiceLoss

|

||||||

|

alpha: 5

|

||||||

|

beta: 10

|

||||||

|

ohem_ratio: 3

|

||||||

|

|

||||||

|

Optimizer:

|

||||||

|

function: ppocr.optimizer,AdamDecay

|

||||||

|

base_lr: 0.001

|

||||||

|

beta1: 0.9

|

||||||

|

beta2: 0.999

|

||||||

|

decay:

|

||||||

|

function: cosine_decay_warmup

|

||||||

|

step_each_epoch: 16

|

||||||

|

total_epoch: 1200

|

||||||

|

|

||||||

|

PostProcess:

|

||||||

|

function: ppocr.postprocess.db_postprocess,DBPostProcess

|

||||||

|

thresh: 0.3

|

||||||

|

box_thresh: 0.6

|

||||||

|

max_candidates: 1000

|

||||||

|

unclip_ratio: 1.5

|

||||||

|

|

@ -0,0 +1,57 @@

|

||||||

|

Global:

|

||||||

|

algorithm: DB

|

||||||

|

use_gpu: true

|

||||||

|

epoch_num: 1200

|

||||||

|

log_smooth_window: 20

|

||||||

|

print_batch_step: 2

|

||||||

|

save_model_dir: ./output/det_r_18_vd_db/

|

||||||

|

save_epoch_step: 200

|

||||||

|

eval_batch_step: [3000, 2000]

|

||||||

|

train_batch_size_per_card: 8

|

||||||

|

test_batch_size_per_card: 1

|

||||||

|

image_shape: [3, 640, 640]

|

||||||

|

reader_yml: ./configs/det/det_db_icdar15_reader.yml

|

||||||

|

pretrain_weights: ./pretrain_models/ResNet18_vd_pretrained/

|

||||||

|

save_res_path: ./output/det_r18_vd_db/predicts_db.txt

|

||||||

|

checkpoints:

|

||||||

|

save_inference_dir:

|

||||||

|

|

||||||

|

Architecture:

|