|

|

||

|---|---|---|

| .. | ||

| arch | ||

| configs | ||

| doc/images | ||

| engine | ||

| examples | ||

| fonts | ||

| tools | ||

| utils | ||

| README.md | ||

| README_ch.md | ||

| __init__.py | ||

README.md

English | 简体中文

Contents

Introduction

The Style-Text data synthesis tool is a tool based on Baidu's self-developed text editing algorithm "Editing Text in the Wild" https://arxiv.org/abs/1908.03047.

Different from the commonly used GAN-based data synthesis tools, the main framework of Style-Text includes:

- (1) Text foreground style transfer module.

- (2) Background extraction module.

- (3) Fusion module.

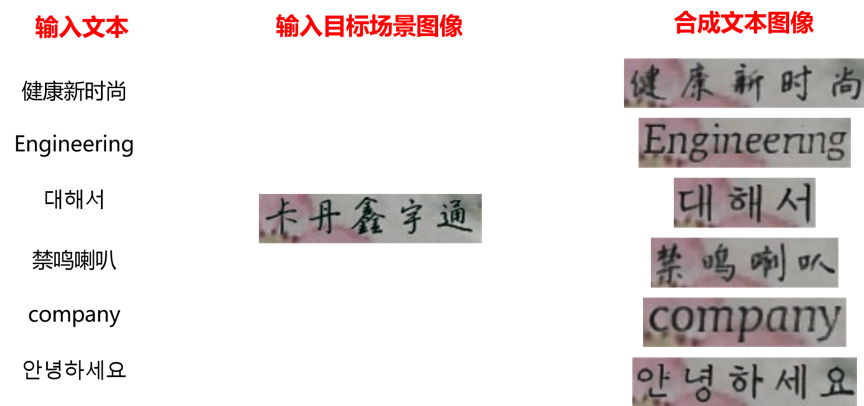

After these three steps, you can quickly realize the image text style transfer. The following figure is some results of the data synthesis tool.

Preparation

- Please refer the QUICK INSTALLATION to install PaddlePaddle. Python3 environment is strongly recommended.

- Download the pretrained models and unzip:

cd StyleText

wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/style_text/style_text_models.zip

unzip style_text_models.zip

If you save the model in another location, please modify the address of the model file in configs/config.yml, and you need to modify these three configurations at the same time:

bg_generator:

pretrain: style_text_rec/bg_generator

...

text_generator:

pretrain: style_text_models/text_generator

...

fusion_generator:

pretrain: style_text_models/fusion_generator

Demo

Synthesis single image

- You can run

tools/synth_imageand generate the demo image.

python3 -m tools.synth_image -c configs/config.yml

-

The results are

fake_bg.jpg,fake_text.jpgandfake_fusion.jpgas shown in the figure above. Above them:fake_text.jpgis the generated image with the same font style asStyle Input;fake_bg.jpgis the generated image ofStyle Inputafter removing foreground.fake_fusion.jpgis the final result, that is synthesised byfake_text.jpgandfake_bg.jpg.

-

If want to generate image by other

Style InputorText Input, you can modify thetools/synth_image.py:img = cv2.imread("examples/style_images/1.jpg"): the path ofStyle Input;corpus = "PaddleOCR": theText Input;- Notice:modify the language option(

language = "en") to adaptText Input, that supporten,ch,ko.

-

We also provide

batch_synth_imagesmothod, that can combine corpus and pictures in pairs to generate a batch of data.

Batch synthesis

Before the start, you need to prepare some data as material. First, you should have the style reference data for synthesis tasks, which are generally used as datasets for OCR recognition tasks.

- The referenced dataset can be specifed in

configs/dataset_config.yml:-

StyleSampler:method: The method ofStyleSampler.image_home: The directory of pictures.label_file: The list of pictures path ifwith_labelisfalse, otherwise, the label file path.with_label: Thelabel_fileis label file or not.

-

CorpusGenerator:method: The mothod ofCorpusGenerator. IfFileCorpusused, you need modifycorpus_fileandlanguageaccordingly, ifEnNumCorpus, other configurations is not needed.language: The language of the corpus. Needed if method is notEnNumCorpus.corpus_file: The corpus file path. Needed if method is notEnNumCorpus.

-

We provide a general dataset containing Chinese, English and Korean (50,000 images in all) for your trial (download link), some examples are given below :

-

You can run the following command to start synthesis task:

python -m tools.synth_dataset.py -c configs/dataset_config.yml -

You can using the following command to start multiple synthesis tasks in a multi-threaded manner, which needed to specifying tags by

-t:python -m tools.synth_dataset.py -t 0 -c configs/dataset_config.yml python -m tools.synth_dataset.py -t 1 -c configs/dataset_config.yml

Advanced Usage

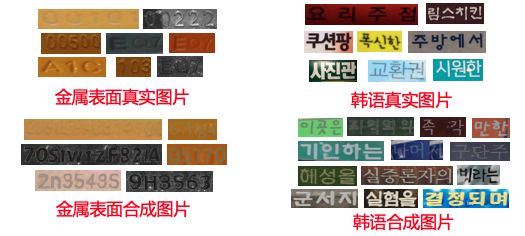

We take two scenes as examples, which are metal surface English number recognition and general Korean recognition, to illustrate practical cases of using StyleText to synthesize data to improve text recognition. The following figure shows some examples of real scene images and composite images:

After adding the above synthetic data for training, the accuracy of the recognition model is improved, which is shown in the following table:

| Scenario | Characters | Raw Data | Test Data | Only Use Raw Data

Recognition Accuracy | New Synthetic Data | Simultaneous Use of Synthetic Data

Recognition Accuracy | Index Improvement |

| -------- | ---------- | -------- | -------- | ----------- --------------- | ------------ | --------------------- -| -------- |

| Metal surface | English and numbers | 2203 | 650 | 0.5938 | 20000 | 0.7546 | 16% |

| Random background | Korean | 5631 | 1230 | 0.3012 | 100000 | 0.5057 | 20% |

Code Structure

style_text_rec

|-- arch

| |-- base_module.py

| |-- decoder.py

| |-- encoder.py

| |-- spectral_norm.py

| `-- style_text_rec.py

|-- configs

| |-- config.yml

| `-- dataset_config.yml

|-- engine

| |-- corpus_generators.py

| |-- predictors.py

| |-- style_samplers.py

| |-- synthesisers.py

| |-- text_drawers.py

| `-- writers.py

|-- examples

| |-- corpus

| | `-- example.txt

| |-- image_list.txt

| `-- style_images

| |-- 1.jpg

| `-- 2.jpg

|-- fonts

| |-- ch_standard.ttf

| |-- en_standard.ttf

| `-- ko_standard.ttf

|-- tools

| |-- __init__.py

| |-- synth_dataset.py

| `-- synth_image.py

`-- utils

|-- config.py

|-- load_params.py

|-- logging.py

|-- math_functions.py

`-- sys_funcs.py